Big Data London 2025

TLDR; Big Data LDN returned as the UK's largest free data and AI event, drawing 15,000+ professionals and 150+ exhibitors to Olympia in South West London. This year's event was dominated by AI agents. Organizations demonstrating real AI success had done the foundational work first. For senior leaders making platform decisions now, the message is clear: the strategic imperative isn't choosing between innovation and governance; it's recognizing that governance enables innovation at scale.

The Industry's Evolution: Five Years in Focus

When I was a CTO, Big Data LDN was exactly the type of conference that I would make a point of attending. With all of the major vendors represented at the event, it provides a rare opportunity to bring yourself up to date with the data and AI landscape. CTOs and other senior decision makers continue to make important decisions about choice of platforms, architectures, methodology and skills for the future—this conference can really help to shape and validate those decisions.

One of the most fascinating aspects of attending Big Data LDN over the last 5 years is watching it serve as a barometer for where the industry's collective attention has shifted. Each annual edition captures a different phase in the evolution of the data landscape:

- 2021: the Lakehouse emerged as the solution to bridging data lakes and warehouses. This platform along with the Medallion Architecture became the foundation for unifying analytics and AI workloads.

- 2022: Data Mesh dominated—how do we decentralize data ownership while maintaining coherence. Data Products emerged as the organizing principle. Eli's blog provides deeper insights into the 2022 event.

- 2023: The ChatGPT revolution hit the conference hard. The focus was very much on use of Generative AI to spearhead innovation.

- 2024: The focus shifted to how organizations could leverage GenAI while building the proper foundations. A realisation that AI is only as smart as the data it's fed.

- 2025: AI Agents took center stage promising new use cases and accelerating the democratisation of data and analytics.

The progression tells a story. We've moved up the stack from infrastructure debates (where should data live?) through architectural patterns (how should we organize it?) to operational models (how should we manage it?) and now to intelligence layer (how should AI interact with it?).

But here's what's crucial: each layer builds on the previous ones. Organizations jumping straight to AI agents without solid infrastructure, governance, and product thinking will struggle.

The success stories at this year's event all shared one characteristic: they had done the foundational work first.

A packed event

Here are some photos to give you a sense of scale of the event.

The vast exhibition space houses 150+ vendor stands, each competing for attention. Multiple theatre spaces are curtained off but not soundproofed, creating a cacophony where live demonstrations blend with theatre presentations blend with hundreds of simultaneous conversations.

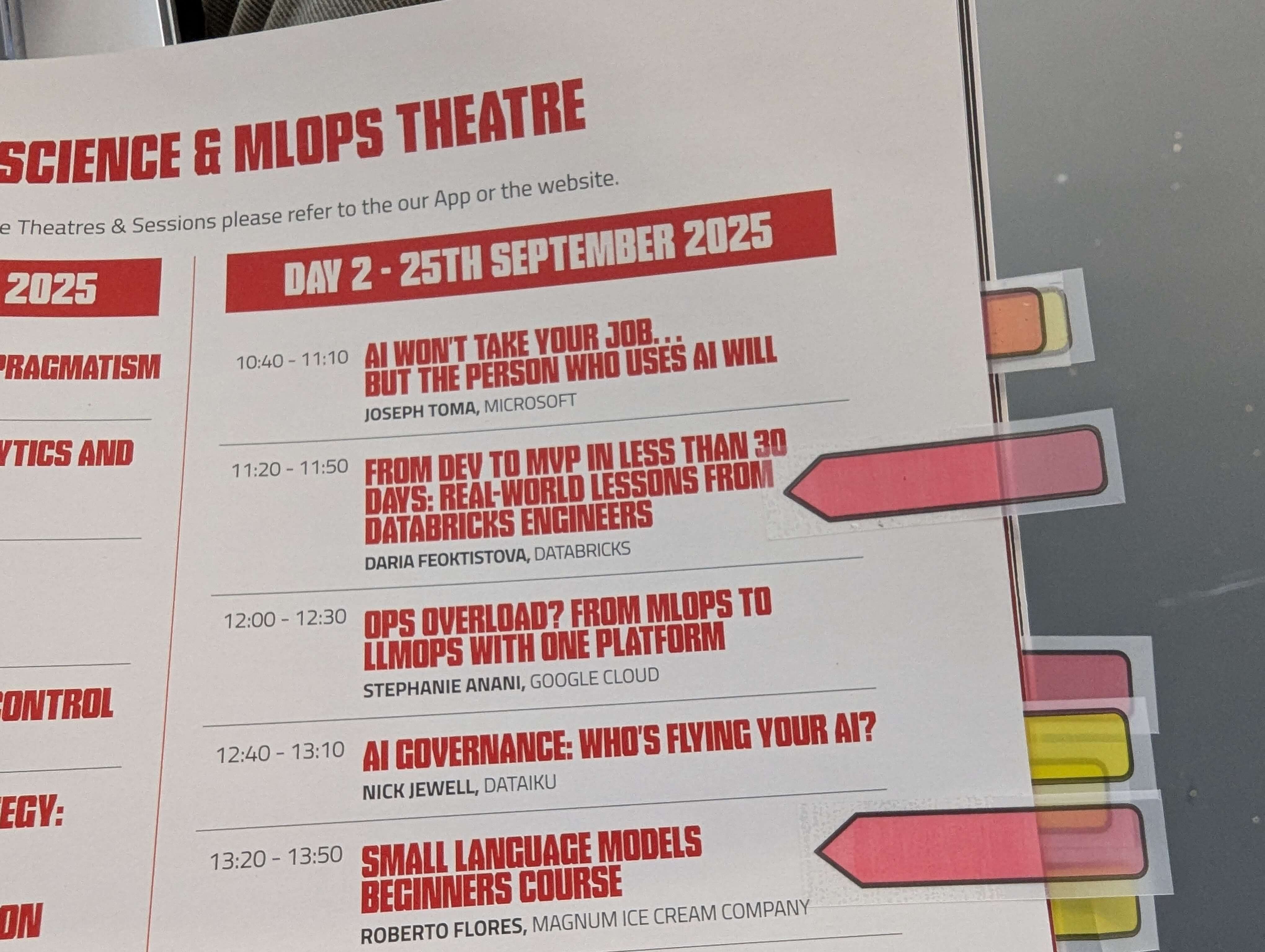

There were parallel tracks in progress across 17 theatres. It was difficult to choose sometimes which session to attend! Some theatres used noise cancelling headphones to help cut through the noise!

Google's stand was very popular—you could design and print your own custom travel tag with the help of AI, there was also a lego builders competition with live commentary and final winner judged by an AI:

Plan ahead to identify sessions you'd like to attend and exhibitors you'd like to visit. Popular sessions filled up 30 minutes early, so I pivoted to focus on the expo floor where I could control my experience. The expo floor offers interactions you can't replicate later, and the talks will be available on YouTube in 2-3 weeks. Subscribe to the Big Data LDN YouTube channel for notifications as these videos drop!

Agents everywhere!

If there was one dominant theme threading through Big Data LDN 2025, it was AI agents. But we need to distinguish between two very different approaches that signal fundamentally different bets about the future:

The Integration Play

Most established vendors are following what we might call the "AI augmentation" strategy—integrating generative AI capabilities into existing platforms. The core product remains familiar, but now it has AI-powered assistance for common tasks. This makes perfect sense for companies with well established workflows that are fundamentally sound and just need AI assistance. Why force users to learn entirely new paradigms when you can make their current work more intelligent?

The Agent-First Approach

More intriguing were the handful of new entrants building products that are fundamentally agentic from the ground up. These aren't traditional BI tools with ChatGPT bolted on—they're platforms designed around the assumption that AI agents will be the primary interface between users and data.

Companies like Lynk AI exemplify this approach. Rather than asking "how do we add AI to our existing product?", they're asking "if we put AI agents at the heart of the data value chain, what should the architecture look like?" This agent-first approach assumes AI will fundamentally restructure how we interact with data.

Whether these emerging companies can disrupt the established mega vendors remains to be seen. But it was exciting to see the innovation and the focus being placed onto new capabilities which may not have had the emphasis historically.

Semantic Layer: The Foundation for Agentic Analytics

This brings us to what might be the most important architectural conversation happening right now: the semantic layer.

The semantic layer sits between your curated data and your AI. It provides the context and meaning over that data to help the AI navigate knowledge successfully to provide the right answer.

Without a robust semantic layer, AI agents are essentially guessing about what your data means. It's like asking someone to translate a document when they don't speak the language it's written in—they might produce something, but it won't be trustworthy.

The semantic layer enables:

- Consistent definitions - What does "customer" mean within and across different domains?

- Business context - How do metrics relate to business outcomes?

- Governed logic - Ensuring AI agents use approved calculation methods such as how to derive "gross margin".

- Explainable results - Tracing how answers were generated, with supporting lineage and citations back to ground truth.

Companies like Lynk AI are positioning this as the critical infrastructure for trustworthy agentic analytics. In our work with enterprise clients struggling to implement AI on messy data, this emphasis is spot-on.

Vendors to watch: Innovation Beyond the Mega Platforms

Three of the "big four" mega vendors (Databricks, Snowflake, Google) had large spaces and were extremely busy. They had the space to run their own demos and presentations.

I was surprised to find that Microsoft, the final of the "big four", had no presence in the exhibition hall. Numerous exhibitors that partner with Microsoft had a presence. But Microsoft themselves? Absent. This is puzzling.

Microsoft had recently held their own Fabric Conference (FabCon) in Vienna: my colleagues Carmel and Jess attended the event and wrote up a great summary. It's great that FabCon exists, but this is an event for people who are already committed to Fabric: they're willing to pay for the conference and will get a lot out of it. Whereas Big Data LDN allows CTOs and other senior decision makers a single (free!) event to assess the whole ecosystem—if you're not in the room, you're not part of the conversation. Given the competitive intensity in the data platform space, with Snowflake, Google and Databricks all having significant presence and actively supporting their wider partner ecosystem, Microsoft's absence feels like a missed opportunity.

Away from the big 4 and their respective ecosystems, some of the most intriguing innovation was happening in "Discovery Zone" where smaller niche vendors could be found. Based on conversations and demonstrations, here are three emerging vendors addressing real pain points with differentiated approaches:

Lynk AI: Semantic Layer for Agentic Analytics

As discussed earlier, the semantic layer provides essential context for AI agents. Lynk AI is building a semantic layer platform specifically designed for AI agents, not as an afterthought to traditional BI. Their focus on creating a "semantic graph" that gives AI context about your data relationships addresses the fundamental trust problem in agentic analytics.

MotherDuck: DuckDB for Collaboration at Scale

DuckDB has become the darling of the data engineering community for its speed and simplicity. See my blog series on DuckDB and also on DuckLake for more background about this exciting new analytics engine. MotherDuck takes the open-source DuckDB engine and wraps it with the operational capabilities enterprises need: cloud-native scaling, team collaboration, and managed operations. If you're exploring alternatives to traditional data warehouses for analytical workloads, this is worth evaluating. The platform is able to leverage the cutting edge in-process analytics engine provided by DuckDB to run blazingly fast queries at a TCO significantly lower than its competitors.

dltHub: Python-Native Data Loading

dltHub offers a Python library and platform for data loading that's built for the way modern data teams actually work. Instead of configuring low-code tools, you write Python. This resonates with the growing population of Python-first data engineers who find traditional ETL tools too rigid. This focus on DevEx (developer experience) becomes increasingly important as organizations aim to accelerate time to value, avoid vendor lock-in and minimize technical debt.

The Pattern: These three vendors exemplify a broader pattern emerging across the ecosystem—they're not trying to replace your entire platform. They're solving specific, high-value problems that mega vendors either overlook or address as afterthoughts. The strategic question for data leaders: when does the innovation and fit of a specialized vendor outweigh the simplicity of staying entirely within one mega platform's ecosystem?

Based on our experience, the answer is usually "hybrid"—use a mega platform for core capabilities, then integrate specialized vendors where they add meaningful value. The key is ensuring your chosen mega platform has strong commitment to open standards (Delta Lake, Iceberg, Arrow) that enable this flexibility.

Beyond Technology: The Socio-Technical Reality

One of the most encouraging observations from this year's event: growing recognition that data transformation is a socio-technical endeavour. Success requires alignment of people, processes, and technology.

People: Skills Are the Bottleneck

The prominence of training and education providers reflects the reality that skills, not tools or platforms, constrain progress.

Organizations face a critical choice: hire expensively for scarce external talent, or invest in developing internal capability. Our client engagements consistently demonstrate that building your own data talent yields better long-term results. These people already understand your business, culture, and challenges.

The prominence of training providers and career-switchers at the event reflects the recognition that people capability is the real constraint, and focusing on this as a primary concern is an opportunity for organizations.

Processes: Governance as Enablement

The strong showing of governance vendors reflects painful lessons learned. The 25+ vendors in the Data Governance & Quality category exist because enterprises have learned that data sprawl without governance creates expensive problems: compliance violations, incorrect decisions, duplicated effort, lost trust.

The most effective implementations position governance as enablement—guardrails that let people move faster safely, not gates that stop movement.

Technology: The Amplifier, Not the Solution

With people and processes in place, technology becomes the amplifier rather than the solution. The most compelling vendor demonstrations weren't about technical capabilities; they were about how technology enables better collaboration, faster decision-making, and trusted outcomes.

Evaluate your transformation through all three dimensions simultaneously. If you're investing heavily in technology but not addressing skills development and governance processes, you're setting yourself up for expensive failures.

Regulatory Intelligence: Governance Gets Strategic

The collaborative panel featuring ICO, FCA, and OFCOM—three major UK regulators discussing their roles in an AI-enabled world—signals an important shift.

Rather than regulators reacting to problems after they occur, they're proactively positioning how to think about AI governance, data protection, and consumer safety. This matters because proactive regulatory alignment is becoming a competitive advantage. While competitors scramble with remediation, organizations that build with compliance from the start move faster and with greater confidence.

The regulators aren't trying to stop innovation—they're trying to ensure it happens responsibly. Organizations that proactively embrace regulatory frameworks will move with confidence while competitors struggle with remediation.

Regulatory compliance is increasingly a competitive differentiator. Organizations in highly regulated sectors should prioritize platforms and practices that help them meet these requirements rather than creating additional compliance headaches. Factor regulatory positioning into your platform selection criteria now.

Three Strategic Imperatives from Big Data LDN 2025

Invest in Foundations Before Racing to Agents - The organizations demonstrating real AI success had established semantic layers, governance frameworks, and data product practices first. Don't skip generations of maturity—the excitement around AI agents is justified, but the differentiator will be those who deploy them on solid foundations.

Solve for People and Process, Not Just Technology - Technology platforms are maturing rapidly, and open standards (Delta Lake, Iceberg) reduce vendor lock-in risks. But people capability remains the constraint. Focus on building internal data literacy and establishing governance as enablement, not bureaucracy.

Focus on Patterns and Practices, Not Specific Tools - Many organizations are in a position to skip generations of technology, jumping from legacy systems to modern cloud-native architectures. But with limited hands-on experience, technology selection feels daunting. By focusing on patterns (data as a product) and practices (DataOps), you maintain flexibility to transition between platforms as needs evolve.

Final Thought

Big Data LDN 2025 demonstrated that the industry continues to evolve at pace. The most valuable discussions weren't about which platform has the fastest query performance or the most connectors. They were about how organizations successfully navigate the socio-technical complexity of data transformation, how they build trustworthy AI on solid foundations, and how they develop the organizational capabilities that technology alone can never provide.

For senior leaders making strategic decisions now: the opportunity is significant, but so is the risk of rushing ahead without proper foundations. The winners will be those who recognize that AI agents represent the next chapter, not the entire story, and that the story requires careful authoring across people, process, and technology.

Ready to assess your organization's readiness for agentic intelligence? Consider where you are across the three imperatives above. The organizations thriving in 2025 started this foundational work in 2022-2023. Don't wait for the next Big Data LDN to realize you're behind.

Note: Big Data LDN records and publishes theatre sessions to YouTube in the weeks following the event. If you missed sessions you prioritized, they'll be available for thoughtful consumption soon. Also consider smaller community-driven events like DATA:Scotland, Data Science Festival, and SQLbits (on the Saturday) which offer free attendance with a less overwhelming scale.