Under the Hood: What Makes Polars So Scalable and Fast?

TL;DR: Polars' impressive performance (5-20x faster than Pandas) comes from multiple architectural innovations working together: a Rust foundation provides low-level performance and memory control; a columnar storage model optimizes analytical workloads; lazy evaluation enables a sophisticated query optimizer that can rearrange, combine, and streamline operations; parallel execution automatically distributes work across CPU cores; and vectorized processing maximizes modern CPU capabilities. By bringing database optimization techniques to DataFrame operations, Polars delivers exceptional performance while maintaining an elegant API.

In our previous article, we introduced Polars as a next-generation DataFrame library that's taking the Python data ecosystem by storm. We explored its origin story, key features, and how it fits into the broader data landscape.

Now, let's look under the hood to understand how Polars achieves its remarkable performance. This isn't just an academic exercise - understanding these mechanisms will help you write more efficient code, debug performance issues, and make informed decisions about when and how to use Polars in your projects.

Ritchie Vink, Polars' creator, often emphasizes that Polars' speed comes from multiple factors working together rather than a single performance trick. This philosophy mirrors the "aggregation of marginal gains" strategy championed by Sir Dave Brailsford, who led the British Olympic cycling team to world dominance. Just as Brailsford believed that a 1% improvement in many small areas would cumulate in significant competitive advantage, Polars achieves its blazing speed not through a single breakthrough, but by meticulously optimizing a multitude of small details. Let's examine each of these factors in detail.

The Origin Story: From Performance Challenge to DataFrame Revolution

Every successful open-source project has an origin story, and Polars' begins with Ritchie Vink.

In late 2019, while learning Rust (then an emerging systems programming language), Vink faced a practical problem: he needed to join two CSV files efficiently. Rather than setting up a database for this seemingly simple task, he decided to implement his own join algorithm in Rust.

When he benchmarked his implementation against popular Python package Pandas, the results were disappointing: his code was slower. For most developers, this might have been the end of the experiment. For Vink, it was the beginning of a journey.

"This unsatisfying result planted the seed of what would later become Polars," Vink explains1. The challenge sparked his curiosity: why was Pandas faster, and how could he improve his implementation?

As he dove deeper into database engines, algorithms, and performance optimization, his goals evolved. What began as a simple join algorithm grew into a DataFrame package for Rust, and eventually into a high-performance query engine designed to rival industry standards in the Python ecosystem.

The name "Polars" itself carries a playful significance - the polar bear representing something stronger than a panda (a nod to the incumbent Pandas library), with the "rs" suffix reflecting its Rust foundation.

In March 2021, Vink released Polars on PyPI, initially as a research project. Its exceptional performance quickly gained attention, and by 2023, Polars had grown into its own company, with Vink at the helm as its creator and CEO.

Designed for Analytical Workloads

Polars is specifically designed for analytical processing (OLAP) rather than transactional workloads (OLTP). This means it excels at operations common in data analysis:

- Aggregations across large datasets

- Complex joins between tables

- Filter operations that reduce large datasets

- Transformations that reshape or derive new columns

- Time series operations

This analytical focus drives decisions throughout Polars' design, from its columnar storage (optimal for reading subsets of columns) to its execution model (optimized for scanning and processing large volumes of data).

Built on Database Research

Unlike many DataFrame libraries that evolved organically from array manipulation libraries (Pandas is built on NumPy), Polars applies decades of database research to DataFrame operations. This brings sophisticated query optimization techniques, columnar processing, and other database innovations directly to the Python data ecosystem.

Vink emphasizes this distinction: "Polars respects decades of relational database research"2. This isn't just marketing - it's reflected in Polars' architecture, from its query optimizer to its columnar storage to its expression system.

The Rust Foundation: Performance from First Principles

At the core of Polars' performance advantage is its implementation in Rust, a systems programming language that offers several key advantages for a high-performance DataFrame library:

- Zero-cost abstractions - Rust's compiler generates machine code that's as efficient as hand-written C, without the safety risks

- Fine-grained memory control -direct control over memory allocation and layout

- No garbage collection - predictable performance without GC pauses

- Memory safety guarantees - protection against common bugs like buffer overflows and use-after-free

- Fearless concurrency - safe parallelism without data races

Unlike Pandas, which is built on a mix of Python, Cython, and C through NumPy, Polars is written entirely in Rust from the ground up. This means every performance-critical component - from memory management to algorithm implementation - can be optimized with low-level control.

Python Bindings: Best of Both Worlds

While Polars' core is Rust, it exposes a carefully designed Python API through bindings. This gives users the convenience and familiarity of Python with the performance of Rust:

# What you write in Python

result = df.select(

pl.col("value").sum()

)

What actually happens:

- The Python code builds an abstract query plan

- This plan is passed to the Rust engine (via bindings, within the same OS process)

- The Rust engine optimizes the plan

- The Rust engine executes the plan, releasing Python's Global Interpreter Lock (GIL) so it can run multi-threaded without Python involvement

- Results are returned to Python as Arrow-formatted memory buffers: Python receives a pointer to Rust-managed memory, not a copy of the data

The last stage above is important: because Arrow is a specification, different tools that conform to it can share data without serialization:

As Vink explains: "if you know that a process can deal with Arrow data you can say 'I have some memory laying around here, it's laid out according to the Arrow specification' - at that point you can say to another process 'this is the specification and this is the pointer to where the data is'. If you read this according to this specification you can use this data as-is without needing to serialize any data"1

This is the zero-copy benefit. When Polars returns results to Python there's no conversion - just a pointer to Arrow-formatted memory.

This architecture minimizes the "Python tax" - performance-critical computation happens in Rust-managed memory and threads, while Python remains a thin orchestration layer. This is why using apply with Python lambdas is discouraged: it forces Polars to acquire the GIL, blocking parallel execution.

Columnar Architecture: Designed for Analytical Workloads

Polars stores data in columnar format, conforming to the Apache Arrow specification. This architecture provides the potential for significant performance gains - but realising that potential requires an engine built to exploit it.

What the columnar format enables:

- Better compression: Homogeneous data types stored contiguously compress more efficiently (see below)

- Selective I/O: Column-oriented storage makes it possible to read only needed columns

- Cache-friendly access: Contiguous memory layout allows efficient CPU cache utilisation

- SIMD potential: Homogeneous data can be processed with vectorised CPU instructions

What Polars adds on top:

These benefits don't materialise automatically - they require an engine purpose-built to exploit them. As Vink emphasises: "We've written Polars from scratch - every compute is from scratch."2 The Arrow specification provides the memory layout; Polars provides the query engine that makes it fast.

Let's compare row-based and columnar storage visually:

Row-based storage (like traditional databases):

[Row 1: float1, string1, date1] -> [Row 2: float2, string2, date2] -> [Row 3: float3, string3, date3]

Columnar storage (like Polars):

value: [float1, float2, float3]

name: [string1, string2, string3]

date: [date1, date2, date3]

When computing something like SUM(value), a columnar system only needs to load the value array, while a row-based system loads all data, including unused columns.

Compression Benefits with Columnar Storage

The Apache Arrow columnar storage specification offers exceptional compression opportunities, particularly for columns with low cardinality (few unique values):

Dictionary encoding: For low-cardinality columns (like categories, countries, or status codes), values can be replaced with integer indexes into a dictionary of unique values:

Original: ["USA", "Canada", "USA", "Mexico", "Canada", "USA"] Dictionary: ["USA"(0), "Canada"(1), "Mexico"(2)] Encoded: [0, 1, 0, 2, 1, 0] (much smaller than storing the strings)RLE: For columns with repeated consecutive values, store the value and count:

Original: [5, 5, 5, 5, 5, 7, 7, 7, 8, 8, 8, 8] Encoded: [(5,5), (7,3), (8,4)] (value, count)Delta encoding: For monotonically increasing values (like timestamps or IDs), store differences:

Original: [1000, 1005, 1010, 1015, 1020] Encoded: [1000, 5, 5, 5, 5] (first value, then differences)

These compression techniques are particularly effective because each column contains homogeneous data types. In row-based storage, compression across different data types is much less efficient.

Metadata and Statistics for Query Optimization

File formats like Parquet (frequently used with Polars) use columnar storage and additionally store column-level statistics that enable powerful optimization when reading data:

Min/max statistics - each chunk of column data stores minimum and maximum values, allowing for predicate pushdown:

# If a data chunk has max_value=50 and your query is: query = pl.scan_parquet("data.parquet").filter(pl.col("value") > 100) # The entire chunk can be skipped without reading any dataNull counts and positions - track where NULL values appear, allowing for more efficient processing

Value distribution information - some formats store approximate histograms or count distinct estimates

Row groups and column chunks - data is organized into row groups with separate column chunks, making it possible to read only relevant portions

When scanning multiple Parquet files (a common Big Data scenario), these statistics become even more powerful. For example, when scanning a directory of parquet files such as the example below:

query = pl.scan_parquet("data/*.parquet").filter(pl.col("date") > "2023-01-01")

Polars can use file-level statistics to skip entire files without opening them if they don't contain relevant data.

This metadata-driven optimization is crucial for performance when working with large datasets spread across many files. It is particularly impactful on cloud storage platforms such as Amazon S3 or Microsoft OneLake which implement data lake or lakehouse architectures where data tends to be written in Parquet or Delta format. This is a key advantage of columnar formats that Polars fully leverages and we'll illustrate this in action in our next blog.

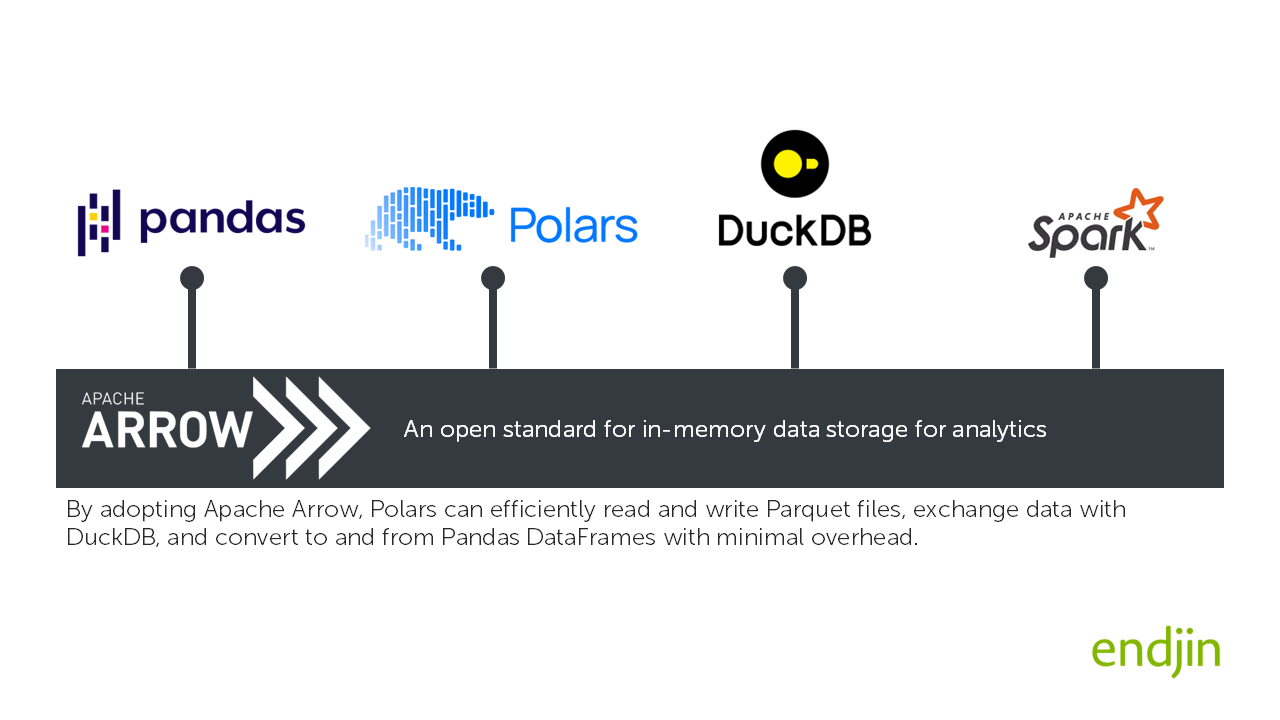

Apache Arrow Memory Model

Polars implements columnar storage using the Apache Arrow specification for in-memory analytical data storage, enabling seamless integration with a growing ecosystem of popular data tools which have adopted the same standard. This includes Apache Spark, pandas and DuckDB.

Apache Arrow is particularly popular for its ability to handle large datasets efficiently and its support for zero-copy data sharing between different tools which may have been written in different languages - this eliminates the need for a serialization and deserialization overhead. This feature is crucial for applications that require low-latency data access and processing, such as machine learning pipelines, data streaming systems, high-performance computing and data engineering, where you are often integrating multiple tools to deliver the solution.

Polars implements its own query engine while adhering to the Arrow specification for memory layout. This foundation allows Polars to efficiently process data without the overhead of converting between different memory representations.

This approach delivers two key benefits:

- Zero-copy data sharing between processes and tools that understand Arrow.

- Ecosystem compatibility with the growing universe of Arrow-enabled tools.

For example, because it adopts Apache Arrow, Polars can efficiently read and write Parquet files, exchange data with DuckDB, and convert to and from Pandas DataFrames with minimal overhead. This inter-operability helps with migration as it allows incremental adoption of Polars alongside legacy technology. This is illustrated below:

Composable Expression System

Polars' expression system represents perhaps its most elegant innovation from a user perspective. Expressions in Polars are more readable and give the optimizer visibility of the logic you want to apply.

Vink is emphatic about the importance of expressions: "we see the requirement of a Lambda... as sort of a failure of our API."1 This philosophy drives continuous improvement of the expression system to make it increasingly flexible.

Here's an example of such a Polars expression from Part 3 of this series. We'll provide more background there, but hopefully you will see similarities with the PySpark SQL and DataFrame API and functional programming:

countries = (

countries

.filter(~pl.col("region").is_in(["Aggregates"]))

.select(["country_code", "country_name", "region", "capital_city", "longitude", "latitude"])

.sort(["country_name"])

)

This composable expression system is a domain specific language (DSL) which provides the foundation for further optimisations we set out below.

Lazy Evaluation and Query Optimization

One of Polars' most distinctive features is its query optimizer, which draws directly from database technology. When using Polars' lazy execution mode the user is building an expression, operations aren't performed immediately but collected into a query plan. Before execution, Polars analyzes this plan and applies optimizations that can yield orders of magnitude performance improvements, and users get them automatically without changing their code.

While Pandas executes operations immediately, Polars can defer execution to build and optimize a complete query plan.

This is similar to how LINQ Expression Trees work in .NET and in Reactive Queries in Rx & Reaqtor where the execution of the query is deferred until a result is needed, adopting the futures and promises design pattern.

How Lazy Evaluation Works

When using Polars' lazy API, operations don't execute immediately but instead build a logical query plan:

By using pl.scan_csv() in place of pl.read_csv() in the code below, the data is not loaded or processed. Instead it returns a polars.LazyFrame object which allows Polars to build a query plan.

plan = (

pl.scan_csv("large_file.csv")

.filter(pl.col("value") > 100)

.group_by("category")

.agg(pl.col("value").mean().alias("avg_value"))

)

It means that subsequent operations we may wish to add simply get added to the query plan, for example:

plan = (

plan

.filter(pl.col("category").is_in(["Category X", "Category Y", "Category Z"]))

.sort("avg_value", descending=True)

)

Execution happens only when you call collect():

result = plan.collect()

By separating the stages of building, optimising and executing the plan, Polars can analyze the entire operation chain and apply optimizations.

The Query Optimizer

Polars' optimizer applies various transformations to the logical plan:

- Predicate pushdown - move filters earlier to reduce data volume

- Projection pushdown - only read necessary columns from source

- Join optimization - select efficient join strategies based on data properties

- Common subexpression elimination compute repeated expressions only once

- Function simplification - replace complex operations with simpler equivalents

We show this in action in our next blog.

In benchmark tests, these optimizations alone can provide 5-10x performance improvements over naively executed queries.

Beyond Basic Optimizations

Polars' optimizer goes beyond simple rule-based transformations to apply more sophisticated optimizations:

- Query rewriting - replace operation sequences with more efficient alternatives

- Specialized algorithms - use purpose-built implementations for common patterns

- Meta-optimizations - decide whether certain optimizations are worthwhile based on data characteristics

For example, if you write df.sort().head(10), Polars might replace this with a top-k algorithm that's much more efficient than sorting the entire dataset.

Parallel Execution: Using All Your Cores

Polars automatically parallelizes operations across all available cores. This isn't something users need to configure or enable, it happens transparently. In an era of 8, 16, or even more CPU cores on standard laptops (I have 20 on my Microfot Surface Studio laptop! 💪😎), this automatic parallelization represents a massive performance advantage without requiring any special coding patterns.

Unlike Pandas, which primarily operates on a single CPU core, Polars automatically parallelizes operations across all available cores by leveraging its Rust foundations. This parallelization happens in two complementary ways:

Parallel-Aware Query Nodes

Major operations like joins, group-bys, filters, and sorts know how to divide work across threads. Each node in the query plan can implement its own parallelization strategy based on the specific operation and data characteristics.

Expression Thread Pool

For expression evaluation, Polars uses a work-stealing thread pool:

- Work is divided into manageable chunks

- Chunks are distributed across a pool of worker threads

- When a thread finishes its work, it "steals" pending work from other threads

- This continues until all work is complete

This approach maximizes CPU utilization while avoiding the overhead of excessive thread creation and context switching.

The beauty of Polars' parallelism is that it's completely transparent. You don't need to explicitly parallelize your code or manage threads yourself.

The command pl.thread_pool_size() will return the number of threads that Polars is using - it is set automatically by the Polars engine and will generally equal the number of cores on your CPU. This can also be overriden by setting the POLARS_MAX_THREADS environment variable before process start.

Vectorized Execution: Batch Processing for Performance

Polars uses vectorized execution to process data efficiently by leveraging modern hardware and SIMD (see below). Rather than processing one value at a time (like traditional loops) or entire columns at once (which can exhaust memory), Polars processes data in optimally-sized batches.

The Goldilocks Zone: Vector Sizing

Polars processes data in vectors of 1024-2048 items. This size is carefully chosen to:

- Fit in CPU L1 cache - typically 32-128KB per core on modern processors

- Amortize function call overhead - processing batches reduces per-item overhead

- Enable compiler optimizations - predictable sizes allow better code generation

- Balance memory pressure - not too large to cause cache misses, not too small to waste cycles

This "Goldilocks" approach to batch sizing delivers significant performance benefits over both row-by-row and whole-column processing.

SIMD Instructions: One Instruction, Multiple Data

Modern CPUs include special registers that can process multiple values with a single instruction - known as SIMD (Single Instruction, Multiple Data). The specific capabilities vary by hardware:

| Instruction Set | Register Width | Values per Operation (32-bit) | Platform |

|---|---|---|---|

| SSE2 | 128-bit | 4 | Intel/AMD (baseline since ~2001) |

| AVX2 | 256-bit | 8 | Intel/AMD (since ~2013) |

| AVX-512 | 512-bit | 16 | Intel Xeon, some consumer chips |

| NEON | 128-bit | 4 | ARM (including Apple Silicon) |

Without SIMD:

Instruction 1: a[0] + b[0]

Instruction 2: a[1] + b[1]

Instruction 3: a[2] + b[2]

Instruction 4: a[3] + b[3]

With SIMD (one instruction does the work of four):

Instruction 1: [a[0],a[1],a[2],a[3]] + [b[0],b[1],b[2],b[3]]

Historically, exploiting SIMD required writing platform-specific assembly or intrinsics. This presented a significant portability challenge. However, modern compilers (GCC, Clang, and the Rust compiler LLVM backend) can now auto-vectorize code written in a certain style: tight loops, minimal branching, and predictable memory access patterns.

Polars is written to exploit SIMD instructions, through a combination of compiler auto-vectorization and, where necessary, manual implementation. The details vary by operation, but the result is transparent to the user: significant performance gains on modern hardware without platform-specific configuration.

Memory Management: Efficiency from the Ground Up

Polars' architecture includes careful attention to memory management:

Efficient Data Types and Memory Layout

Polars has adopted the Apache Arrow memory specification and therefore its data types are based on that specification. The advantages of this approach is that data in memory is optimized for both memory usage and processing speed:

- Primitive types - stored as packed arrays of values, offered at different bit sizes: 8, 16, 32 and 64.

- Decimal - 128 bit type, can exactly represent 38 significant digits.

- String data - uses a string cache for repeated values

- Categorical - optimal for encoding string based categorical columns which have low cardinality

- Enum - similar to

Categoricalbut the categories are fixed and must be defined prior to data being loaded - Temporal types - represented as efficient integers internally,

Int32for Date (days since Unix epoch) andInt64for Datetime (ns, us, or ms since Unix epoch), Duration and Time (ns since midnight). - Nested - enable complex data structures to be modelled via

Array(fixed length),List(any length) andStruct(key value pairs). - Missing values - uses "validity bitmaps" rather than sentinel values

Each of these choices reduces memory consumption compared to Pandas' approach.

Zero-Copy Operations Where Possible

Polars uses zero-copy operations whenever feasible:

Selecting columns is a zero-copy operation:

subset = df.select("a", "b", "c") # No data is copied

Filtering can also be highly efficient:

filtered = df.filter(pl.col("a") > 0) # Minimal memory overhead

This approach minimizes memory usage and improves performance by avoiding unnecessary data copying.

Spill to Disk for Large Workloads

For operations that don't fit in memory, Polars can transparently spill to disk:

- Process data in manageable chunks

- Write intermediate results to disk when memory pressure is high

- Read back as needed for final results

This capability allows Polars to handle datasets larger than RAM, particularly with its streaming engine. But it comes at a performance penalty due to file IO generally being an order of magnitude slower than RAM IO.

Putting it all together: Polars architecture overview

At this stage we've covered all of the small incremental gains that Polars achieves, but it is also worth stressing that the architecture as a whole applies robust computer science:

The following diagram consolidates the end to end architecture we've described above:

The Polars expressions you write in Python form a Domain Specific Language (DSL) that allows you to describe operations declaratively.

This DSL is translated into an Intermediate Representation (IR) - what Vink describes as similar to "an AST in Python"1. You can inspect this using .explain() or .show_graph() on a LazyFrame.

The IR captures not just the chain of operations, but also the schema at each node. As Vink explains: "Polars knows the schema on any point in the lazy frame - on any node you can ask what the schema is."2 This enables Polars to detect type mismatches and other errors before execution begins.

The plan is then passed to the optimizer, which seeks opportunities to reduce computation and data volume - projection pushdown, predicate pushdown, common subexpression elimination, and more.

Finally, the optimised plan is passed to the appropriate execution engine. As Vink notes: "you can have different engines, different backends for different data sizes because you have the distinction between the front end and the back end"1 - whether that's the in-memory engine, streaming engine, or GPU-accelerated execution via NVIDIA RAPIDS.

The engine executes and the result is either returned to Python via Arrow-formatted memory (enabling zero-copy access), or written directly to storage formats like Parquet without materialising the full result in memory.

Performance in Practice: Research and Real-World Results

While theoretical advantages are important, what matters is real-world performance. Independent research and testing consistently show significant performance advantages for Polars across a wide range of operations.

Academic and Industry Research

A 2024 study by Felix Hänestredt et al. published in the Proceedings of the Evaluation and Assessment in Software Engineering (EASE) conference compared the energy efficiency and performance of various Python data processing libraries. Their findings confirmed that Polars significantly outperforms Pandas:

- Polars consumed approximately 8 times less energy than Pandas in synthetic data analysis tasks with large dataframes

- For TPC-H benchmarks (an industry-standard decision support benchmark), Polars was ~40% more efficient than Pandas for large dataframes

More recently, a benchmark test published on LinkedIn by Mimouned Jouallah. The benchmark involved processing 150 GB of CSV files and writing them to the Fabric lakehouse in Delta format. The benchmark was run on the smallest size of Fabric notebook with only 2 cores and 16GB of RAM. The results placed Polars ahead of DuckDB and CHdb.

Real-World Adoption

Beyond benchmarks, real-world adoption provides evidence of Polars' performance advantages. Organizations that have switched from Pandas to Polars frequently report:

- Batch processing jobs completing in minutes instead of hours

- Ability to process larger datasets without upgrading hardware

- Reduced cloud computing costs (and energy consumption) for data processing pipelines

- Faster inner dev loop for developers, reducing time to value

The growing adoption of Polars in production environments across various industries provides perhaps the strongest evidence of its performance benefits.

At endjin, our default choice for dataframe driven pipelines is Polars. We only revert to Apache Spark for datasets that are genuinely "Big Data" in scale. We find that Polars is optimal for at least 90% of use workloads.

Is there a commercial case?

We have not yet quantified the benefits, therefore we can't give you a concrete RoI.

However, we can say the positive impact of migrating to Polars is immediately apparent. Moving from Spark to Polars in production will typically reduces runtime and therefore the ongoing cost of running pipelines. But perhaps what is more apparent is that Polars unlocks the ability to develop and test locally - developers can use their laptops rather than spinning up extra capacity for development, which can often incur significant costs on Big Data platforms such as Databricks, Synapse and Microsoft Fabric. Developers are also more productive - test suites run in seconds not minutes. For example, when we migrated one workload from Spark to Polars our test suite for a specific use case reduced from 60 minutes to 9 seconds. This allowed us to extend our test suite and run tests more frequently - the result was more confidence in releases, and reduced time to value.

Conclusion: Performance by Design

Polars' exceptional performance is the result of deliberate architectural choices and careful implementation, applying best practices in computer science and decades of database research. By combining a Rust foundation, columnar storage, query optimization, parallel execution, and vectorized processing and support for the latest hardware features (SIMD), Polars delivers dramatic performance improvements over traditional DataFrame libraries.

What makes Polars particularly remarkable is how it achieves this performance while maintaining an elegant, user-friendly API. The complexity of the underlying engine is hidden behind a clean interface that focuses on expressing what you want to do rather than how to do it efficiently.

For data practitioners working with datasets that fit on a single machine, Polars represents a significant advancement in processing capability. It brings many of the optimization techniques previously found only in sophisticated database systems directly to the Python ecosystem, packaged in a form that's accessible to data scientists and analysts. It's like giving a data engineer superpowers!

Data practitioners who have traditionally worked with Apache Spark on platforms like Databricks, Azure Synapse Analytics, or Microsoft Fabric are discovering that workloads they've historically run on distributed Spark clusters can be handled efficiently by Polars on a single machine.

The advantages of adopting Polars in place of the PySpark SQL and Dataframe API are compelling: simpler architecture without cluster management overhead, faster iteration cycles during development, lower infrastructure costs, and the ability to run complex data processing pipelines on commodity hardware or even locally. While Spark remains essential for truly massive datasets that require distributed processing, Polars' combination of performance and simplicity makes it an excellent choice for the substantial portion of analytical workloads.

This is Part 2 of our Adventures in Polars series:

- Part 1: Why Polars Matters - The Decision Makers Guide for Polars.

- Part 3: Code Examples for Everyday Data Tasks - Hands-on examples showing Polars in action.

- Part 4: Polars Workloads on Microsoft Fabric - Running Polars on Fabric with OneLake integration.

In our next article in this series, we'll show Polars in action.

Have you experienced performance improvements with Polars in your projects? What operations have you found particularly faster? Share your experiences in the comments below!