Polars: Faster Pipelines, Simpler Infrastructure, Happier Engineers

TLDR; Polars is a DataFrame library written in Rust with Python bindings. We've migrated our own IP and several of our customers from both Pandas and Spark to Polars, and the benefits extend beyond raw speed: faster test suites, lower platform costs, and an API that developers actually enjoy using. It's open source, has zero dependencies, and can be deployed on a broad range of infrastructure options. If you're still defaulting to Pandas or reaching for Spark when datasets grow, it's worth understanding what this new generation of tooling can offer.

Why We're Writing This

Over the past eighteen months, we've been migrating our core data engineering IP - and helping a number of our customers do the same - from PySpark and Pandas based solutions to Polars. The results have been compelling enough that we felt it was time to share what we've learned.

At endjin, Polars is our default choice for DataFrame-driven pipelines. We reach for Apache Spark on the few occasions when data volumes genuinely require distributed compute.

This is a practitioner's perspective on a tool we've bet on, deployed to production, and would choose again.

What We've Seen in Practice

The headline benefits are significant. Here's what changed when we migrated from PySpark to Polars:

| Metric | Before | After |

|---|---|---|

| Test suite execution | ~60 minutes (Spark spin-up) | ~30 seconds |

| Developer iteration cycle | Deploy to cluster, wait, check logs | Run locally, iterate, commit |

| Infrastructure model | Distributed compute on PaaS (e.g. Databricks) | Single-node commodity hardware |

| Monthly compute costs | >50% reduction (one customer) |

Beyond the numbers, there's a qualitative shift that's harder to measure but equally important: developers spend less time fighting their tools and more time solving business problems.

The intuitive API and strict type system mean fewer runtime surprises. The lightweight footprint means local development works, giving developers access to modern IDE tooling and coding agents: a complete contrast to remote Spark environments and the frustrations of working in a web browser based UI. And because Polars is open source with zero dependencies, there's no vendor lock-in, no licensing complexity, and no heavyweight runtime to manage.

Independent research has measured the environmental impact too: Polars uses approximately 8x less energy than equivalent Pandas operations - something that matters increasingly to our clients with sustainability commitments.

It's time for organisations to seriously evaluate this new generation of data tooling. The technology has matured, the ecosystem is growing, and an increasing number of organisations are seeing order-of-magnitude gains from making the transition.

This blog aims to give you the information you need to determine whether deeper evaluation is merited for your organisation.

What is Polars?

As Polars creator Ritchie Vink puts it:

"Polars is a query engine with a DataFrame front end... it respects decades of relational database research."1

This is a subtle but important distinction from describing it merely as a "DataFrame library", highlighting database-inspired optimization capabilities. At its core, Polars is designed to provide:

- Lightning-fast performance - 5-20x faster than Pandas for most operations, with some users reporting up to 100x speedups in specific scenarios

- Memory efficiency - dramatically reduced memory usage compared to Pandas

- An expressive, consistent API - a thoughtfully designed interface that balances power with readability

- Scalability on a single machine - making the most of modern hardware through parallelization and efficient algorithms

Where Polars Fits

To understand where Polars adds value, it helps to see the landscape it sits within.

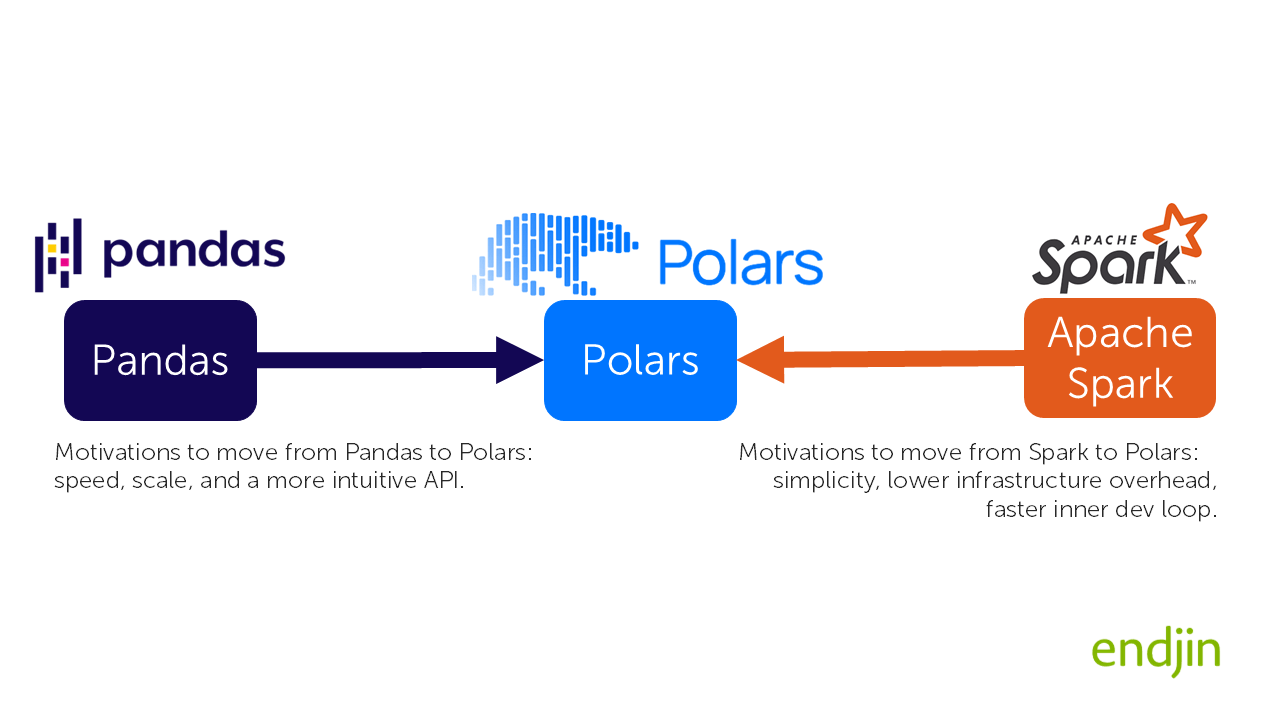

The Two Migration Paths

We see organisations coming to Polars from two directions:

From Pandas: Teams hitting scaling limits. Datasets that used to fit comfortably in memory now cause out-of-memory errors. Operations that used to be fast enough now take minutes. The reflexive answer is "we need Spark" - but that's often overkill.

From Spark: Teams realising they're over-engineered. They're paying for distributed compute to process datasets that would fit on a laptop or a single small commodity compute node. They're waiting for clusters to spin up to run tests. The infrastructure complexity is slowing them down rather than enabling them.

Polars sits in the middle: powerful enough to handle datasets that break Pandas, simple enough that you don't need a platform team to run it.

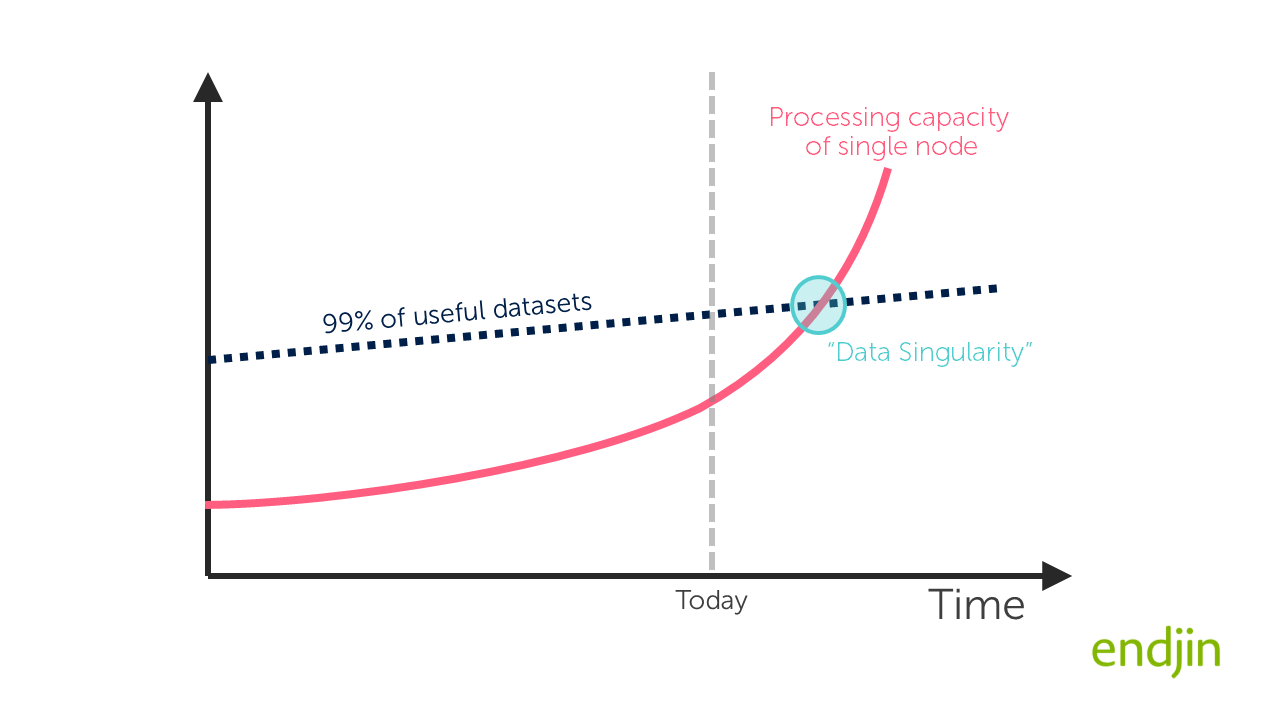

The Data Singularity

There's a broader trend at play here. Hannes Mühleisen, co-creator of DuckDB, co-founder and CEO of DuckDB Labs and Professor of Data Engineering at the University of Nijmegen describes what he calls the "data singularity":

We are approaching a point where the processing power of mainstream single-node machines, [including laptops], will surpass the requirements of the vast majority of analytical workloads.

CPU core counts have increased dramatically. RAM is plentiful. NVMe storage offers throughput that would have seemed impossible a decade ago. But most data tools were designed before this shift, and they don't take advantage of it.

However, Polars does. It's designed from the ground up to exploit modern hardware: automatic parallelisation across all cores, efficient memory use, and algorithms optimised for contemporary CPU architectures.

This means datasets that "required" Spark five years ago can now run on a single node IF you adopt the right tooling - i.e. in process engines such as Polars or DuckDB.

What Makes This Possible

You don't need to understand the internals to benefit from Polars, but it helps to know why it's fast. Here's the short version:

Built for analytics - Polars is designed specifically for analytical workloads (aggregations, joins, transformations) rather than trying to be general-purpose. This focus drives design decisions throughout.

Automatic optimisation - when you write a Polars query, you're describing what you want, not how to compute it. Polars analyses your query and figures out the most efficient execution plan - reordering operations, eliminating redundant work, pushing filters down. You get database-grade query optimisation without writing SQL.

Parallelism by default - Polars automatically uses all available CPU cores. No configuration, no special coding patterns. On a 16-core laptop, that's a potential 16x speedup over single-threaded tools - and you get it for free.

Rust foundation - written in Rust (a systems programming language with C++-level performance), Polars has full control over memory layout and execution. There's no Python interpreter overhead in the hot path.

The practical upshot: you write readable, declarative code, and Polars makes it fast. As Polars creator Ritchie Vink puts it: "Write readable idiomatic queries which explain your intent, and we will figure out how to make it fast."

We explore the technical details in Part 2: What Makes Polars So Scalable and Fast? for those who want to go deeper.

Common Questions We Get

When we recommend Polars to clients, certain questions come up repeatedly. Here's how we answer them:

"Isn't Pandas 2.0 with PyArrow good enough now?"

Pandas has adopted Arrow for storage, which is a step forward. But the execution model is unchanged - Pandas still processes data single-threaded without query optimisation.

As Ritchie Vink has noted: "Pandas is using PyArrow kernels for compute... those are totally different implementations."2 Adopting Arrow for storage doesn't give you Polars' query optimizer or automatic parallelisation.

Our benchmarks show Polars still outperforming Pandas 2.x by 5-10x on typical analytical workloads - and often more on complex queries where optimisation matters most.

Furthermore, because Pandas operates only in "eager" execution mode, we find we tend to hit out of memory limitations, switching to Polars and leveraging its "lazy" model of execution will often overcome that limitation allowing you to get more mileage out of existing infrastructure.

"Why not just optimise our Spark jobs?"

You can, and sometimes you should. But ask yourself: do you actually need distributed compute?

We've seen teams running Spark clusters to process datasets that fit comfortably in memory on a single node. The overhead of cluster management, network serialisation, and distributed coordination often exceeds the benefit.

If your data fits on one machine, Polars will almost certainly be faster and simpler. If it doesn't, Spark (or Polars Cloud) makes sense. The key is being honest about which category you're in - and many teams overestimate.

"What's the migration risk?"

Lower than you might expect, with caveats.

The main prerequisite is good test coverage. If you have comprehensive tests for your data pipelines, migration becomes a matter of swapping out the implementation and verifying the outputs match.

When migrating from Pandas, we have found that Polars' stricter type system often catches bugs that Pandas was silently propagating. The APIs share conceptual similarities, especially if you're already using method chaining in Pandas. The mental model shift is less about syntax and more about embracing lazy evaluation and expressions over imperative loops and .apply().

Migration from PySpark DataFrame API is more straightforward. The APIs are more similar. PySpark also uses lazy evaluation.

We've migrated multiple production systems successfully. The pattern that works: migrate incrementally, run both implementations in parallel initially, validate outputs match, then cut over.

"Is this mature enough for production?"

Yes. Polars has been in production use since 2021. There's now a company (Polars Inc.) providing commercial support and building enterprise features. The ecosystem has reached critical mass.

Perhaps more tellingly, Microsoft has included Polars in the default build for Fabric Python Notebooks - they're not betting on immature technology.

"What if we need to scale beyond a single machine?"

Polars has a streaming engine for larger-than-RAM datasets on a single node. For true horizontal scaling, Polars Inc. offers Polars Cloud, which distributes queries across multiple machines while maintaining the same API.

And because Polars is built on Apache Arrow, it interoperates cleanly with Spark if you need to hand off to distributed compute for specific workloads.

"Can we use AI agents to do the migration?"

Yes, with appropriate guardrails. AI agents such as GitHub Copilot or Claude Code could be used for the heavy lifting.

An AI assisted migration will be more effective if your legacy code base is well structured and documented. You will also likely need to document or provide examples of patterns that you want the AI agent to apply in the migrated code base. A suite of tests and "human in the loop" code reviews are essential.

We recommend doing the initial proof of value manually to understand the nuance and then capture learnings from that to be carried forward by the AI agent. For example, an AI is likely to carry forward the use of imperative loops and .apply() in Pandas into a Polars version of the same code, which is sub-optimal. By adding coding standards and examples into the context for the AI agent, you will encourage it to use Polar's rich expression language and therefore take full advantage of its Rust based engine.

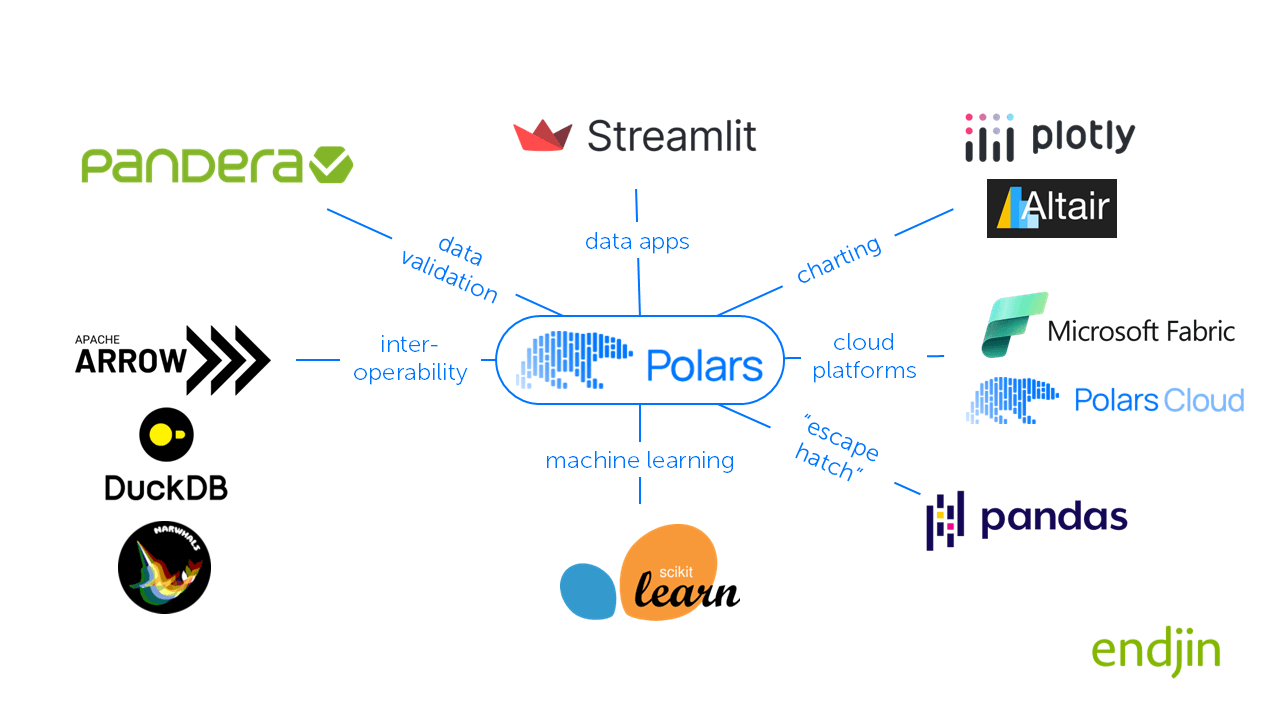

Our Stack

Polars doesn't exist in isolation. A key part of our evaluation was whether it integrates with the tools our clients already use.

Here's what we've found works well in practice:

Visualization

- Plotly - Plotly works cleanly with Polars. While Python charting libraries often expect Pandas DataFrames, Polars' zero-copy conversion to arrow makes passing data to plotting libraries efficient.

- Altair - a declarative statistical visualization library that has excellent native support for Polars.

Data Validation

- Pandera - a statistical data validation toolkit. Pandera allows you to define DataFrame schemas (including checks for data types and value ranges) and validate your Polars DataFrames at runtime to ensure data quality.

Interoperability

- Apache Arrow -a Polars is built on top of the Arrow specification. This allows for Zero-Copy data exchange with other Arrow-based tools. You can pass a Polars DataFrame to

pyarrowor other Arrow-consumers without duplicating the data in memory. - DuckDB + Polars - we often use these together. DuckDB can query Polars DataFrames directly via SQL without copying data. This lets us mix SQL (for complex window functions or ad-hoc exploration) with Polars expressions (for transformation pipelines) in the same workflow. See our DuckDB blog series for more details.

- Narwhals - a DataFrame-agnostic API that lets library maintainers write code once and support Polars, Pandas, and other DataFrame libraries automatically. Pass in a Polars DataFrame, get a Polars DataFrame back. This is how libraries like Altair added Polars support without taking on Polars as a dependency—keeping them lightweight and broadly compatible.

Machine Learning

- scikit-learn - Polars integrates well with the standard ML stack. You can pass Polars DataFrames directly to many scikit-learn models, or efficiently convert them to the required format for training.

Web Applications

- Streamlit - popular framework for building interactive data apps. Streamlit has added native support for Polars, meaning you can pass

pl.DataFrameobjects directly to functions likest.dataframe()andst.line_chart()without manual conversion. We love Streamlit and have a series of videos on this topic such as: Getting Started with Python & Streamlit

The "Pandas Escape Hatch"

- Pandas - the reality is that the Python ecosystem is vast, and you may find libraries that strictly require Pandas input. You can bridge this gap using

.to_pandas().

Health Warning ⚠️: this converts your data into the Pandas format. This is an expensive operation that copies data in memory and forces eager execution. Doing this routinely undermines the performance and memory-efficiency benefits you chose Polars for in the first place! Use it only when strictly necessary.

Cloud platform

- Polars Cloud - the commercial offering from Polars Inc. extends the open-source engine with serverless execution, horizontal scaling for partitioned data, and fault tolerance. Polars Cloud lets you take local Polars queries and run them at scale without managing infrastructure—using the same open-source engine under the hood.

- Microsoft Fabric - Polars is part of the default build for Fabric Python Notebooks, providing an out-of-the-box experience for running fast analytics on the platform. With the flexibility to revert to Spark (which is available on Fabric PySpark Notebook) for the larger workloads.

- Azure Container Apps - enable containerised deployment of Polars workloads onto Azure which can be triggered by Data Factory, Synapse or Fabric pipelines connecting to ADLS2 or OneLake storage.

Development environment

- Visual Studio Code - we love VS Code! Polars is very suited to local development and works well with features such as dev containers and data wrangler to create a developer experience that feels like mainstream software engineering.

Getting Started with Polars

Ready to try Polars? The official documentation provides comprehensive guides, examples and API definition.

The Road Ahead

Polars is actively developed, with a clear trajectory:

Streaming Engine: Now available, this handles datasets larger than RAM by processing in batches and spilling to disk when needed - extending Polars' reach without requiring distributed compute.

Polars Cloud: The commercial offering from Polars Inc. adds horizontal scaling, fault tolerance, and serverless execution while maintaining the same API. Write queries locally, run them at scale.

GPU Support: Integration with Nvidia RAPIDS for GPU-accelerated processing on supported operations.

Growing Ecosystem: The plugin system allows custom extensions, and the community continues to build integrations with specialised tools.

We get a sense of an organically growing ecosystem with solid foundations - one that can scale across multiple dimensions (scale up, scale out, batch and streaming) as needs evolve.

Our Position

After eighteen months and multiple production migrations, Polars is our default choice for DataFrame-driven pipelines. We revert to Spark only when data volumes genuinely require distributed compute, which in our experience, is for less than 5% of use cases.

The technology has matured. The ecosystem is production-ready. The benefits compound over time as your team builds fluency with the expression API and lazy evaluation model.

Should you evaluate Polars? Consider your situation:

Strong fit:

- You're hitting performance or scaling limits with Pandas

- You're using Spark but suspect it's overkill for your data volumes

- You value developer experience and fast iteration cycles

- You want to reduce infrastructure costs and complexity

- You're building new pipelines and want to start with modern tooling

Weaker fit:

- Your existing pipelines are genuinely fast enough

- You lack test coverage to validate migration correctness

- You have deep investment in Spark-specific features (MLlib, GraphX, etc.)

- Your data volumes genuinely require distributed compute

For most data teams, we believe Polars represents a significant opportunity to simplify infrastructure, accelerate development, and reduce costs - without sacrificing capability.

If you're still defaulting to Pandas out of habit, or spinning up Spark clusters for datasets that fit in memory, it's time to reconsider.

What's Next

This is Part 1 of our Adventures in Polars series:

- Part 2: What Makes Polars So Scalable and Fast? - The technical deep-dive: lazy evaluation, query optimisation, parallelism, and the Rust foundation.

- Part 3: Code Examples for Everyday Data Tasks - Hands-on examples showing Polars in action.

- Part 4: Polars Workloads on Microsoft Fabric - Running Polars on Fabric with OneLake integration.

What's your experience with Polars? Are you evaluating it, migrating to it, or already using it in production? We'd be interested to hear - share your thoughts in the comments below.