Spark dev containers: running Spark locally

In this post, I'll show the steps required to set up a container running an instance of Spark. As I explained in the introduction, there are a couple of reasons for doing this. One is that can speed up your inner dev loop, and the other is that it can make it more practical to run automated tests. In short, it's all about improving feedback loops, which ultimately lets us create better systems.

Note that this is just a first step. There's more work required to get tests working, and there are further steps you can take to improve the inner dev loop. I'll talk more about this later in this series.

Choose your base image

The basic idea of a dev container is to provide a precise definition of the development environment, so that all developers are working in the same world. Docker will provide that environment, so a dev container always describes the docker image to use. There are many pre-defined images available, and some devcontainers just use one of these, but I want to customize the container by installing Spark. That means I need to write my own Dockerfile.

As with any Dockerfile I'll start by choosing a base image. I'll want to use Python, so I'll pick one of Microsoft's Python dev container images.

These use Debian as the base OS, and Microsoft currently makes two sets of images available, built on two different versions of Debian: Bullseye and Bookworm. Bookworm is the newer of the two. It became the stable release in June 2023, at which point Bullseye was designated the oldstable release. Bullseye long term support (LTS) ends in August 2026, while Bookworm will remain in LTS until June 2028, so I'll go with that.

I also need to pick a version of Python. As I write this, images for up to Python 3.13 are available. I happen to have been doing some work recently where a library didn't actually work with anything after Python 3.11, so I'm slightly leery of going for the latest available version here. Then again, I was running a machine learning library on acceleration hardware, and those kinds of things tend to be very finicky about machine configuration; in all likelihood you'd be fine just going for the latest Python version. But I'll use 3.11 here.

So I have the first line of the Dockerfile:

FROM mcr.microsoft.com/devcontainers/python:3.11-bookworm

Install Java

Spark is written in Scala, which relies on the Java runtime, so I need to install Java. Recent versions of Spark support Java versions 8, 11, and 17.

According to this Red Hat article on OpenJDK Life Cycle, OpenJDK 11 went out of support at the end of October 2024. The oldest, version 8 is still in support, but that's because there's a lot of friction transitioning from a Java v8 to a post-v8 world, and I really don't want to be starting on the wrong end of that transition. So that seems like it pretty much leave version 17.

However, nothing is ever that simple in the world of open source software. You will want to take into account the Java version that will be available in whatever cloud-hosted Spark service you will use in production. Synapse offers runtime environments for Spark 3.3 and Spark 3.4. These use Java 8, and Java 11 respectively. Microsoft Fabric offers runtimes supporting Spark 3.3, 3.4, and 3.5 (through Fabric's runtime versions 1.1, 1.2, and 1.3 respectively), and these use Java 8, Java 11, and Java 11 respectively.

When I get to the part of this series where I deploy code to a hosted environment, I'll be using Microsoft Fabric's 1.3 environment, which means Spark 3.5 and Java 11. So now I have a dilemma: using a Java version that matches the one Fabric uses (Java 11) means using an unsupported OpenJDK version; using a supported OpenJDK version means either being stuck on Java 8, or using an SDK designed for a more recent Java version (17) than is available.

The compromise I've chosen is to go with OpenJDK 17. I use the same Spark distributables as I would for 11 or 8, and it should behave in the same way. If I were writing any custom Java code, this would create a risk that I might write something that relies on a new Java feature, meaning it might work locally but fail in the cloud. But I won't be writing any Java, so my view is that the risk of using Java 17 is low, and I don't want to use an unsupported SDK.

So I can add these lines to install the Java Runtime Environment (JRE) from OpenJDK 17:

RUN apt-get update && export DEBIAN_FRONTEND=noninteractive \

&& apt-get -y install --no-install-recommends \

openjdk-17-jre \

&& apt-get autoremove -y && apt-get clean -y && rm -rf /var/lib/apt/lists/*

ENV JAVA_HOME=/usr/lib/jvm/java-17-openjdk-amd64

This assumes you're not planning to compile any of your own Java code in this container. If you want the Java compiler, you would need the JDK instead of the JRE.

Install Spark

Now that I've got a Java runtime, I can install Spark. I need to pick a version. Remember, the reason I'm doing this is to enable local development of code that will eventually run in a hosted Spark environment in the cloud. So I need to pick a version that matches that target cloud environment.

For Microsoft Fabric, there's a page describing the Spark runtime you get, and it depends on how you've configured Fabric. They recommend you use the latest available Fabric runtime settings, which at the time I write this means Fabric Runtime 1.3, which is based on Apache Spark 3.5.0.

So I'll add this to the Dockerfile to install Spark 3.5.0:

RUN cd /tmp && \

wget https://archive.apache.org/dist/spark/spark-3.5.0/spark-3.5.0-bin-hadoop3.tgz && \

cd / && \

tar -xzf /tmp/spark-3.5.0-bin-hadoop3.tgz && \

mv /spark-3.5.0-bin-hadoop3 /spark && \

rm -rf /tmp/spark-3.5.0-bin-hadoop3.tgz

ENV SPARK_HOME=/spark

Alternative option: don't install Spark

If you are using Spark purely from Python, you don't technically need to install Spark yourself. If you use Python's pip tool to install PySpark (which I'll show towards the end of this blog) it actually includes its own copy of Spark! So it's not strictly necessary to fetch and expand the Spark redistributables as I've just done.

However, there are a few reasons to install Spark yourself. You have more options on how to launch Spark, and as we will be discussing in forthcoming blogs, this can have an impact on how quickly your tests run. Also, if you want to customize your Spark setup with additional .jar files, you'll want to install Spark as I've shown here.

Trying it

It's time to test the Dockerfile. (If installed the Docker extension for VS Code you could use that to perform these tests. I like to see the details of what's going on, so I'll be showing how to do this from the command line.)

Build and run

First, I need to build the image that the Dockerfile describes:

docker build . -t testspark

I've used testspark as the tag name, but it can be anything. I'm not going to push this to any container registries, so this is just a local name to refer to this image.

Next, I can run a self-destructing container. (The --rm tells it to delete itself on shutdown.) The Python devcontainer images are set up so that when you start the container, it runs Python by default. I will want to run some shell commands, so I'll tell it to launch bash instead:

docker run --rm -it testspark bash

The -it option makes this interactive: when the container starts, the bash prompt will be right there in whatever console window I ran that docker command from:

root ➜ / $

Talk to Spark

I should now be able to ask Spark to do things for me. I'll test that by connecting to it with the spark-shell command:

/spark/bin/spark-shell

Note that because I've not given this a URL, it will launch Spark automatically in local mode for me. This runs Spark in a single process. There are other ways we could do it: we could pre-launch a standalone Spark cluster and then connect to its address. There are pros and cons to each option, which we'll discuss in another blog series, but for now I'm using the simplest approach.

Here's what I see:

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

24/11/29 08:16:51 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Spark context Web UI available at http://22d590831dfc:4040

Spark context available as 'sc' (master = local[*], app id = local-1733820580547).

Spark session available as 'spark'.

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 3.5.0

/_/

Using Scala version 2.12.18 (OpenJDK 64-Bit Server VM, Java 17.0.13)

Type in expressions to have them evaluated.

Type :help for more information.

scala>

Looks good. Let's try creating a dataframe from some values, and then asking Spark to count the rows of the resulting dataframe:

scala> val values = List(1,2,3,4,5)

values: List[Int] = List(1, 2, 3, 4, 5)

scala> val dataFrame = values.toDF()

df: org.apache.spark.sql.DataFrame = [value: int]

scala> dataFrame.count()

res1: Long = 5

Looking good. But I wanted to use Python. So I'll type Ctrl+D to quit the Spark shell, and then I can try some Python.

Talk to Spark from Python

I can get a Python prompt set up for PySpark with this command:

/spark/bin/pyspark

I see this output:

Python 3.11.10 (main, Sep 27 2024, 06:09:18) [GCC 12.2.0] on linux

Type "help", "copyright", "credits" or "license" for more information.

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

24/11/29 08:31:16 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/__ / .__/\_,_/_/ /_/\_\ version 3.5.0

/_/

Using Python version 3.11.10 (main, Sep 27 2024 06:09:18)

Spark context Web UI available at http://826ae8b43781:4040

Spark context available as 'sc' (master = local[*], app id = local-1732869076783).

SparkSession available as 'spark'.

>>>

OK, let's try creating a dataframe:

>>> df = spark.createDataFrame([[1],[2],[3],[4],[5]])

>>> df.count()

5

>>> df.show()

+---+

| _1|

+---+

| 1|

| 2|

| 3|

| 4|

| 5|

+---+

So it looks like I can control Spark from Python. Great! Again, pressing Ctrl+D leaves this prompt.

In fact, I'm done with this test session so at the bash prompt I press Ctrl+D again. This causes the shell to exit, at which point Docker will tear down the container for me.

To save you from having to piece all the bits together, here's the whole Dockerfile:

FROM mcr.microsoft.com/devcontainers/python:3.11-bookworm

RUN apt-get update && export DEBIAN_FRONTEND=noninteractive \

&& apt-get -y install --no-install-recommends \

openjdk-17-jre \

&& apt-get autoremove -y && apt-get clean -y && rm -rf /var/lib/apt/lists/*

ENV JAVA_HOME=/usr/lib/jvm/java-17-openjdk-amd64

RUN cd /tmp && \

wget https://archive.apache.org/dist/spark/spark-3.5.0/spark-3.5.0-bin-hadoop3.tgz && \

cd / && \

tar -xzf /tmp/spark-3.5.0-bin-hadoop3.tgz && \

mv /spark-3.5.0-bin-hadoop3 /spark && \

rm -rf /tmp/spark-3.5.0-bin-hadoop3.tgz

ENV SPARK_HOME=/spark

Make it a dev container

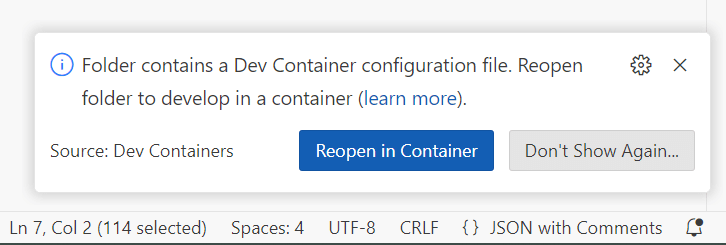

Now that I've shown that the Dockerfile works, I want to package it up as a dev container. The convention is that to create a .devcontainer folder at the root folder. This will typically be at the root of some git repository, but VS Code doesn't actually require source control. You can just create a folder anywhere on your filesystem, and if that folder contains a .devcontainer subfolder with a devcontainer.json file in it, VS Code will offer to use that.

The devcontainer.json file doesn't need to be complex. I can just point it to the Dockerfile:

{

"name": "Local Spark",

"build": {

"dockerfile": "Dockerfile",

"context": ".."

}

}1

For that to work, you'll also need to move your Dockerfile from wherever you wrote it into that .devcontainer folder.

Now if I open the parent folder (the one containing the .devcontainer subfolder) in VS Code, then after a few seconds I see this:

If I click the "Reopen in container" button, VS code closes and then reopens in remote mode. It starts up an instance of the container based on the Dockerfile, and then installs the VS Code remoting components in that container. Once it's ready, I can start Spark using the same commands as before. (It would be possible to put those commands into the devcontainer.json file so that it starts automatically. However, there are several different ways to launch Spark, and I'm not fixing it to just one here because we've found that for some testing scenarios, some ways work faster than others. But that's a topic for a different blog series.)

Once you've run the start-master.sh and start-worker.sh commands from earlier in a bash terminal in VS code, you could repeat the earlier experiments with the Spark Shell, and the PySpark prompt if you want to verify that it still works now I'm using the dev container tools.

But what I really want to do is just write Python files as normal in VS code. So I can create a file called try_pyspark.py:

from pyspark.sql import SparkSession

spark = SparkSession.builder.getOrCreate()

df = spark.createDataFrame([[1],[2],[3],[4],[5]])

print(df.count())

df.show()

Visual Studio Code will most likely show squiggly lines on the first line, because it can't find the pyspark library. Although that was available in the Python session I launched with the /spark/bin/pyspark command, VS Code can't see it.

But that's easily fixed by running this command:

pip install pyspark==3.5.0

After this, I can run this .py file in the usual way inside VS Code, and it talks successfully to Spark:

vscode ➜ /workspaces/SparkContainerExample $ /usr/local/bin/python /workspaces/SparkContainerExample/try_pyspark.py

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

24/11/29 09:43:04 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

5

+---+

| _1|

+---+

| 1|

| 2|

| 3|

| 4|

| 5|

+---+

Some of your data probably resides in files. Creating dataframes on the fly from Python lists is not how most applications use Spark, so you might also want to try this:

df = spark.read.option("header", True).csv('data.csv')

Assuming you've got a CSV file called data.csv in the same folder as the python file, this should also work.

Earlier I mentioned that PySpark brings its own copy of Spark. So if it's able to use that, is the copy we installed even doing anything? In fact, PySpark looks for the SPARK_HOME environment variable, and if that is set, it will use that Spark installation instead of its own copy. So it is using the copy I installed, even though it's capable of using its own.

Conclusion

With a relatively simple Dockerfile and devcontainer.json I can get an instance of Spark running locally, and access it from Python.

In the next blog in this series, I'll show how you can write a unit test for some Spark-based code, run that test locally, and then deploy the code to a hosted Spark environment such as Azure Synapse.