How .NET 6.0 boosted Ais.Net performance by 20%

At endjin, we maintain Ais.Net, an open source high-performance library for parsing AIS message (the radio messages that ships broadcast to report their location, speed, etc.). Naturally, when .NET 6.0 came out recently, we tested Ais.Net on it. We've made extensive use of performance-oriented techniques such as Span and System.Io.Pipelines, and we wondered whether we'd need to tweak any of this to work better on the latest runtime.

We were delighted to discover that .NET 6.0 has given us a free lunch. Without making any changes whatsoever to our code, our benchmarks improved by roughly 20% simply by running the code on .NET 6.0 instead of .NET Core 3.1. We've not had to release a new version—the existing version published on NuGet (which targets netstandard2.0 and netstandard2.1) sees these performance gains the moment you use it in a .NET 6.0 application.

We also saw a 70% reduction in memory usage! As it happens our amortized allocation cost per record is already 0 bytes, so this was a reduction in the startup memory costs, which was also fairly low to start with, but it's all good.

Benchmark results

Our benchmarks perform two kinds of test. One looks at the maximum possible rate of processing messages: we perform the bare minimum work that would be required when inspecting any message. This reports the upper bound on the rate at which an application can process AIS messages on one thread. And the second tests a slightly more realistic workload, retrieving several properties from each message. Each of these benchmarks runs against a file containing one million AIS records.

On .NET Core 3.1, I saw these results running the benchmarks on my desktop, which corresponds to an upper bound of 3.03 million messages per second, and a processing rate of 2.60 million messages a second for the slightly more realistic example. (My desktop is about 3 years old, and it has an Intel Core i9-9900K CPU.)

| Method | Mean | Error | StdDev | Allocated |

|------------------------------------ |---------:|--------:|--------:|----------:|

| InspectMessageTypesFromNorwayFile1M | 329.2 ms | 3.16 ms | 2.80 ms | 26 KB |

| ReadPositionsFromNorwayFile1M | 384.2 ms | 3.93 ms | 3.68 ms | 25 KB |

Here are the results for the same benchmarks running on .NET 6.0:

| Method | Mean | Error | StdDev | Allocated |

|------------------------------------ |---------:|--------:|--------:|----------:|

| InspectMessageTypesFromNorwayFile1M | 262.9 ms | 3.21 ms | 2.84 ms | 5 KB |

| ReadPositionsFromNorwayFile1M | 322.8 ms | 4.50 ms | 3.99 ms | 7 KB |

This shows that on .NET 6.0, our upper bound moves up to 3.82 million messages per second, while the processing rate for the more realistic example goes up to 3.12 million messages per second. Those are improvements of 26% and 20% respectively. (The first figure sounds more impressive, obviously, but I put the 20% figures in the opening paragraph because that benchmark better represents what a real application might do.)

You can also see the significant reduction in the Allocated column. These allocation counts are measured only in kilobytes, and not megabytes, even though each benchmark processes a million messages. That's down to our amortized per-message allocation cost being 0. So this suggests that .NET 6.0 is now allocating a lot less memory when initializing the mechanisms required to run our code. Whether you'd be able to measure any practical impact on performance from that one change in a real application using this library is doubtful, but it is significant in the bigger picture. Changes like this are typical of .NET 6.0: everything is more efficient. Even if individual gains are small, they add up.

The bottom line is that moving your application onto .NET 6.0 may well give you an instant performance boost with no real effort on your part.

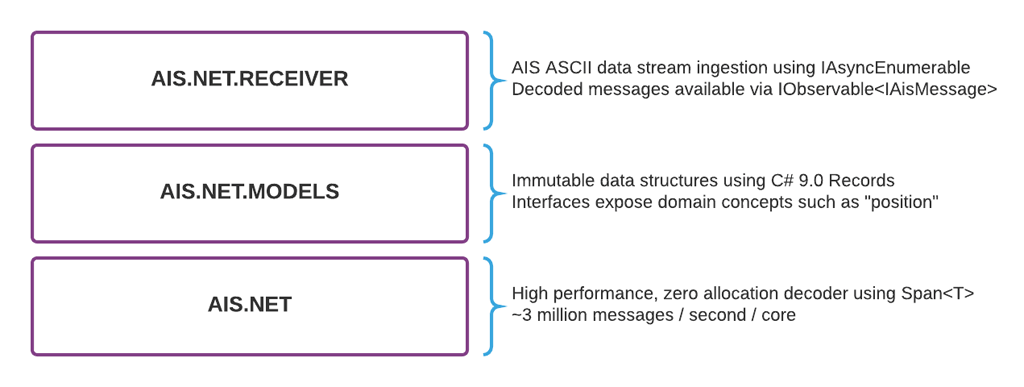

You can learn more about our Ais.Net library at the GitHub repo, http://github.com/ais-dotnet/Ais.Net/ and in the same ais-dotnet GitHub organisation you'll also find some other layers, as illustrated in this diagram:

(Note that Ais.Net.Models is currently inside the Ais.Net.Receiver repository.)