Garbage collection, a memorandum on memory in C#

Not coming from a background in computer science, the inner workings of a computer have always been somewhat of a mystery to me. This is something I've always meant to remedy but never quite gotten around to. When I transitioned from C++ to C# my main thought surrounding memory management was "well thank god I don't have to think about that anymore". However, in the last few weeks I have been really drilling down into the inner workings of the language (a big thank you to Ian Griffiths for his book Programming C# 5.0). In doing this I have realised that memory management is not only something that does require some consideration, but also something that I find quite interesting.

I've always had a vague idea what the difference between the stack and heap was, but it was good to get it fully defined:

The stack uses static memory allocation. This makes it very fast. The memory is allocated at compile time and freeing it up just involves adjusting one pointer.The stack is allocated on a LIFO (last in first out) basis. This means that it's easy to keep track of, and freeing up memory just involves adjusting one pointer.- The heap uses dynamic memory allocation. The CLR manages the heap at run-time and it is constantly changing and being updated. This means it is more complex and slower, but the size is only limited by the size of virtual memory. Keeping track of the heap can prove difficult… Enter the garbage collector.

The garbage collector

I've now built up a little mental picture of the garbage collector.

In my mind's eye this little guy runs around the computer memory, cleaning up unreachable references. The top hat I think signifying that he knows what he's doing. As you can tell, my background is also not as an artist. (I'm even more embarrassed by this now that our designer Paul has created the beautiful graphic above ^)

However, from a more technical perspective…

When a program starts, and the first memory is allocated, it is allocated as a block, with each new piece of memory adjacent to the last.

Now, at some point (and I'll discuss when later) the garbage collector is triggered. It goes through all of memory that is currently in use and checks whether it is reachable via any references. (The GC is also not tripped up by circular references - if two things reference each other they are still not necessarily reachable. I told you he knew what he was doing.) If memory is found to be unreachable, then it is no longer needed and can be reclaimed. This leads to the heap looking something like this:

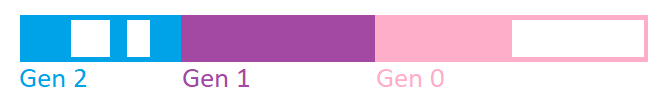

Objects that are then created are unlikely to fit exactly into the gaps left by the newly freed memory and finding appropriate blocks could be expensive. Therefore, the garbage collector needs to get the free memory back into a continuous state. However, the movement of existing memory comes with a performance cost as it must be copied as part of the relocation. To minimize this cost the heap is organised into generations. When an object is initialised, its memory is allocated, and it is a part of "generation 0". If that object then survives a first garbage collection, it moves to generation 1. If a second GC is survived then the object is moved up to the final generation, generation 2.

We can see that if an object has been around for longer, it will be more expensive to clean it up. This is because more memory will have to be moved in order to get the heap back into a continuous state.

Generations 0 and 1 are known as the ephemeral generations. They contain short lived objects, which are generally in the CPU's cache and compaction is not particularly expensive. However, compaction of generation 2 objects is often deferred as reclaiming them can be very expensive. Not only this, but objects that have been around for that long may not have been used for some time so may no longer be in the CPUs cache. This means that copying them is even more expensive. After a garbage collection, the heap will look something like this:

Do not keep objects around for longer than necessary

When a certain memory threshold is reached for each generation, a garbage collection is triggered. These thresholds are adjusted for efficiency throughout the running of a program. This means that we cannot predict when a garbage collection will happen. Therefore, if objects are kept around for a long time they could be moved up into generation 2 at any point. This could end up having a large impact on the program's performance.

Larger objects also cause the CPU to work harder. Not only do they put a strain on memory, but they cause more garbage collections to be triggered as the generational thresholds are reached more quickly. This could also have an impact on performance as garbage collection is a relatively expensive process. This is assuming they are not large enough to be stored in the "Large Object Heap". This is reserved for objects larger than 85,000 bytes. The LOH is not compacted (though adjacent empty memory is combined in an optimization process) and instead memory allocation is done based on the size of the blocks available.

Defeating the garbage collector

The garbage collector can only tell when a program can access memory, not whether it will. For example, if you add objects to collections and keep those collections reachable, even if the individual objects aren't used again (e.g. if you keep collection around just to count the number of objects), then the memory for each individual object will still not be reclaimed. This is something to keep in mind when designing a program; do you need a collection of those objects, or could you just store the count in a variable?

Pinning of objects

Sometimes memory needs to be "pinned" in place. For example, if data was being read from a disk then a reference will be passed to the disk to tell it where to write to. This memory cannot then be moved before the write has happened. To do this explicitly, the "fixed" keyword is used. However, sometimes pinning will be done for you. For example, stream often pins memory. This can be an issue if memory in the ephemeral generations is pinned and the GC needs to move memory blocks around.

There are a couple of ways to get around this. If you only pin objects that are larger than 85,000 bytes, then only objects in the LOH will be pinned. As the LOH is not compacted this will not impact your performance. However, the threshold for the LOH is somewhat arbitrary so cannot be guaranteed to remain constant. The other option is to:

Use a pool of objects allocated at the start of your program

This means that the objects will likely be generation 2, so cause fewer issues when pinned.

In conclusion...

None of these may have been massive revelations, but I feel I now have a far better understanding of how the CLR works under the hood. In understanding the fundamentals of the language, I have a better base from which to build on my knowledge. Also, it is good to keep in mind that although for the most part memory is managed for us, in certain situations (for example when designing a caching policy) some consideration needs to be given to the ways in which that management is carried out.