Benchmarking the Cloud against on-premise data centres

Barry Smart was the CTO at Hymans Robertson, the largest independent firm of consultants and actuaries in the UK, between 2012-2019. He was responsible for leading the firm's technology strategy, modernising the 100 year old organisation, and transforming them through the adoption of Microsoft's Azure Cloud platform to enable them to innovate faster and produce new digital products and services.

Since 2013, endjin has been helping Hymans Robertson with their Azure adoption process. As a Financial Services organisation, they are regulated by the FCA, and as early adopters of Azure, were at the bleeding edge of storing Personally Identifiable Information (PII) in the cloud. THis meant that they were ahead of the regulator, were breaking new ground, and needed a evidence-based approach to demonstrate to the regulator that they could identify and mitigate the risks of moving to The Cloud.

Since developing this approach, we (endjin) have used it for many different customers; from global enterprises to early start-ups, in many difference sectors. It's a useful strategic tool to help you understand the the gotchas of moving to the cloud, and how you can put people, process and technical barrier in place to prevent the worse outcome from ever happening.

In this three-part series, Barry Smart describes the risk & mitigations analysis process we came up with, and explains how you can use the same process to understand the risk of your own cloud journey.

- Part 1 - Cloud Adoption: Risks & Mitigations Analysis

- Part 2 - A Deep Dive into the Swiss Cheese Model

- Part 3 - Benchmarking the Cloud against on-premise data centres

This is the third and final in the series of blogs about a risk driven approach to embracing Public Cloud technology.

Part 1 described the process I adopted to engage a wide range of stakeholders to bring them on board with adopting the Cloud. I used a risk analysis technique called the Swiss Cheese Model as a structured way of exploring the risks, mapping out the key controls and consolidating our due diligence. I then used the one page summary of this model as a key artefact when engaging with stakeholders.

In the second instalment, walked through the Swiss Cheese model in more detail, in the order that we constructed it - beginning with the end (undesired outcomes), then flipping to the start (threats) before completing the middle (barriers).

In this final blog post, I will describe the conclusions of the due diligence process which aimed to benchmark our on premise data centre risk against Public Cloud risk. It sounds daunting, but the Swiss Cheese Model I've described in my two previous blog posts made this quite a straightforward process.

Benchmarking approach

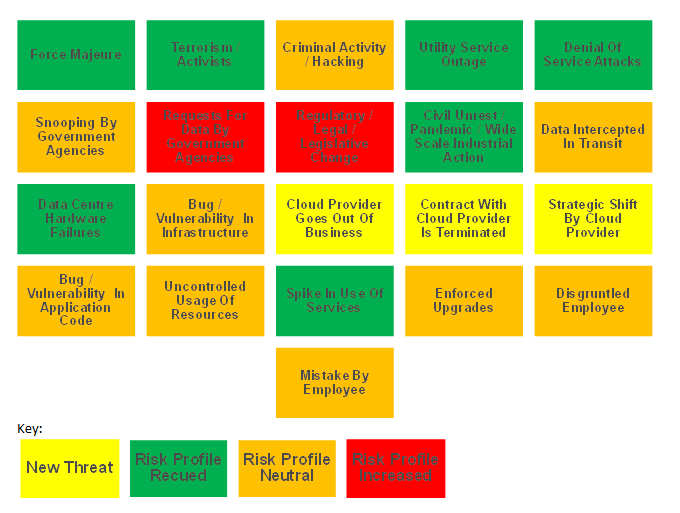

We cycled through each of the 21 threats in turn and evaluated:

- Was this a new threat specific to the Cloud? We found 3;

- Of the remaining 18 threats, we considered whether the risk profile changed between on premise and Public Cloud by considering the nature of the threat, the barriers associated with it and how the strength of those barriers varied between our on premise capability and those put in place by the Cloud provider:

- We identified 7 where we felt the risk profile reduced;

- We identified 9 where the risk profile was neutral between on premise and Cloud.

- Finally, we found 2 where we felt the risk profile increased.

New threats

There were 3 new threats that we identified that were not present with our on premise data centres:

- Cloud provider goes out of business - the risk that the Cloud provider retires the service or is liquidated with resulting impact being a forced migration back on premise or to an alternative Cloud provider;

- Strategic shift by Cloud provider - we were also concerned that the Cloud provider made a strategic shift that could impact us in some way, such as ceasing support for specific services that we chose to consume;

- Contract with Cloud provider is terminated - finally there was also a risk that the contract with the Cloud provider would be terminated. For example, through a significant hike in prices or a failure by either party to adhere to key terms and conditions in the contract.

These threats are often at the forefront of organisations considering the use of Public Cloud technology under the general theme of "vendor lock in". They are not unique to Public Cloud and need to be considered carefully. One common approach is to hedge this risk by engaging with multiple Cloud providers. However this adds additional complexity and cost, as well as limiting options to adopt PaaS or SaaS services which tend to be Cloud provider specific.

Areas where the risk profile reduces

We identified 7 threats which presented a reduced risk profile given that the "industrial grade" capabilities that Cloud providers were able to put in place based the sheer scale of their operation. Good examples of this included the level of physical security around Public Cloud facilities and the level of redundancy put in place such as dual connections to the power grid. These were capabilities that we could only dream of having in our on premise data centres!

Areas where the risk profile is neutral

For 9 of the threats, we felt that the risk profile was neutral. In some cases, whilst we determined that the threat profile increased with Public Cloud, we also recognised that the increased strength of the barriers adopted by the Cloud provider compensated for this, neutralising the increased threat. For example, the threat of criminal activity

/ hacking is higher given the multi-tenanted nature of the Public Cloud, however Cloud providers put in place significant effort to mitigate this threat such as sophisticated tools to defend against DDoS attacks and strict logical segregation between tenant subscriptions.

In other cases we felt that the threat was neutral because it was not dependent on the underlying infrastructure - for example, the risk of a bug / vulnerability in application code is the same whether you deploy on premise or onto a Public Cloud platform.

Areas Where Risk Profile Increased

Finally there were a small number of threats where we felt there was an increase in risk:

- Requests for data by government agencies - at the time of completing this due diligence we were at the height of the Snowdon scandal and also a case in the USA where the Department of Justice was requesting access to data held in Microsoft's Dublin data centre;

- Regulatory / legal / legislative chance - we were also concerned about future regulatory change that could render the use of Public Cloud technology obsolete. At time of completing this assessment, we were concerned specifically about the new General Data Protection Regulation (GDPR) that is due to come into effect in 2018.

I am glad to note that profile of both threats above is generally reducing, for example:

- The test case concerned with access to personal data in Microsoft's Dublin data centre seems to be reaching a favourable outcome: reference BBC article "Major win for Microsoft in 'free for all' data case";

- There has been a cautious shift from regulators such as the FCA towards supporting the adoption of Cloud technology.

However uncertainty still surrounds both areas, in particular given the shifting political landscape in both the UK and USA.

Summary Of Risk Assessment

The results are summarised in the diagram below.

Conclusions

Our overall conclusion was that, on balance, the risk profile associated with Public Cloud was reduced in comparison with our on premise data centres.

When we took this risk based view and combined it with the wider benefits of our PaaS focused strategy, the business case for adopting Public Cloud was compelling: the risks were lower and the commercial benefits offered by PaaS were highly attractive.

What's next?

We've been using our Swiss Cheese analysis as a means of taking our clients through the risks associated with adoption of Public Cloud and have been successful in helping them to become comfortable with adoption of this technology. A key trend I have noticed is that we are increasingly not the first to introduce our clients to Public Cloud technology, many have their own programmes well under way or have adopted Cloud hosted SaaS from other parties.

We are keen to take the Swiss Cheese Model approach further. The hypothesis with application of this model to health and safety is that major catastrophic accidents only occur when multiple barrier fails enabling a trajectory from the threat, through the holes in the barriers to the undesired outcome.

On this basis, the principle is that major catastrophic events can be prevented by putting place leading indicators which are used to constantly monitor the health of each barrier to proactively maintain a minimum level of performance that will prevent holes developing.

Where the barriers are entirely under the ownership of the cloud Provider, this will be difficult to do. For example, we would not be in a position to proactively monitor the health of physical perimeter security around the Cloud provider's data centre - we need to trust them to put sufficient monitoring in place. However, where the barrier is the responsibility of the tenant, it is good practice to establish leading indicators.

We also note that the Cloud providers are tuned into this requirement. After all, they have a vested interest in helping tenants to have a strong handle on the risks that they are managing. Solutions such as Microsoft's Security Centre on Azure are a big step in this direction.

If you enjoyed this series on Cloud Adoption, you may like to download the accompanying free poster, check out our Cloud Platform Comparison (AWS vs Azure vs Google Cloud Platform) poster and blog series, or sign up to Azure Weekly, our free weekly newsletter round up of all things Azure.