Spinning up 16,000 A1 Virtual Machines on Azure Batch

Big Compute, like Big Data has a different meaning for every organisation; for Big Data this generally tends to be when data grows to a point where it can no longer be stored, queried, backed up, restored or processed easily on traditional database architectures. For Big Compute this tends to be when computation grows to a point of complexity where it can no longer run on a non-distributed architecture within some pre-defined organisation Service Level Agreement.

We've had a number of conversations over the past 5 years with organisations who have scaled their compute intensive workloads (SAS, SQL Server, financial models, image processing) to the biggest box, with the highest number of cores, and the largest amount of memory that their hardware vendor can supply, but still their application creaks and slowly creeps towards exceeding their SLA. These organisations would like to move to the cloud, in the hope that Microsoft can provide "a bigger box". Obviously this strategy will not work as Microsoft too, only has access to the same vendor hardware as the customer does.

The secret is to re-architect your computational workload to enable execution to be distributed across many different machines; the old saying "the first step is always the hardest" applies here too; redesigning your application to scale from 1, to 2 machines will require a fundamental shift in architecture and a large engineering effort to implement it, but once you have worked out how to scale to 2 machines, the effort required to scale to 3, 10, or 100 is small in comparison, and you should be able to scale out until you hit a hard limit (available network bandwidth and storage throughput limits being the two most common constraints), at which point, you may need to come up with additional architectural adjustments to compensate.

Building distributed architectures is hard; it requires a root and branch understanding of your application (I hate to use the term "full stack", but this is probably one of the only real use cases for that that term), from the shape of your data, to the algorithms you use to process it, as well as network and storage I/O considerations, and the user experience you are delivering. You also need to remember Time is Money in Big Compute.

The reason the Big Data revolution has been as successful as it has can be directly attributed to Hadoop; the prescriptive MapReduce architecture forced people to think about their data in a different way from the traditional relational database mind-set. The constraints in Hadoop enabled faster distributed processing and new insights that were previously out of reach of traditional data processing architectures.

Azure Batch is to Big Compute, as Hadoop is to Big Data.

Since 2010 we've helped a number of organisations use Azure Cloud Services to build distributed computational grids for financial modelling, image and data processing; these architectures have been complex multi-year endeavours; Azure Batch significantly lowers the barrier to entry, with a prescriptive, easy to follow architecture that allows you to distribute and schedule your computational workload.

One of the biggest challenges with adopting Azure is understanding your computational workload at sufficient granularity which allows you to choose the service best suited host it.

- Azure Kubernetes Service (AKS) is perfect for hosting containerised applications that can be orchestrated via Kubernetes.

- Azure Compute Fleet allows the rapid provisioning on virtual machines, taking advantage of low (spot) pricing.

- Container Instances allows you to create containers on demand, without having to manage the underlying infrastructure.

- HDInsight (Hadoop) is perfect if your data processing algorithm can be expressed via one of the Hadoop query languages (Pig / Hive)

- Service Fabric excels at reliably hosting complex distributed services via its actor model

- Batch is best suited to processing highly parallelizable algorithms, where a single "job" can be broken down into a number of tasks, each of which operates on a subset of data. This subset of data is distributed along with the code to run algorithm to a Task Virtual Machine (TVM). When all tasks have completed, the individual results can, if necessary, be combined in a secondary step, but this scenario is not (yet) supported out of the box and requires some custom coding.

A great example of a parallelizable algorithm is running economic simulations in financial models; rather than running 1000 scenarios in serial, these scenarios can be divided up into 1000 tasks in Batch which can be distributed across 1000 TVMs; providing you with a faster result but for the same overall amount of core compute hours (not including the time it takes to grown and shrink the TVM Pool).

Batch reached General Availability in 2015, and has a proven track record; it powers Azure Media Services audio & video encoding and media indexing. Media Services submits encoding tasks to Batch and takes advantage of Batch's auto-scaling rules to use virtual machines efficiently and to reduce idle capacity. Batch is also used by the wider Azure Team to run scale and availability tests against the underlying Azure Fabric.

If you combine Batch with Data Factory and HDInsight for automating input data production and Power BI for visualising results, you have an incredibly powerful end-to-end solution.

Earlier this year, as part of a proof of concept, endjin created a framework for performing scale, soak and performance tests against Microsoft Azure Batch Service. In this experiment we created a scale test to spin up a pool of 16,000 A1 (1 core, 1.75 GB RAM, 2x 500 IOPS) Task Virtual Machines.

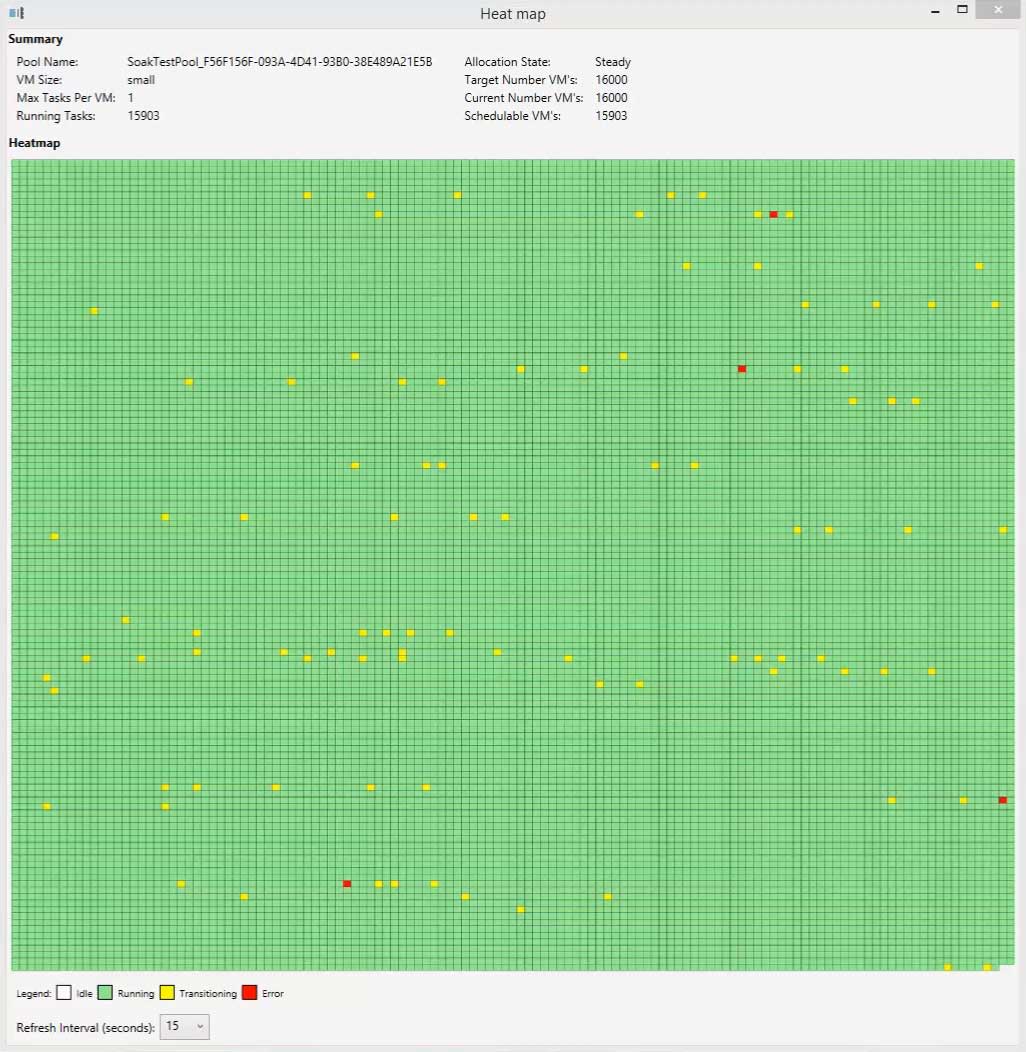

Microsoft provide, as part of their extensive code samples, a neat tool called Batch Explorer, which allows you to interact with the Batch Service to create, delete and monitor jobs. One of its nicest features is the heat map which allows you to visualize all of the running TVMs and their current state:

In the image above:

- Yellow blocks signify TVMs that are starting up (or shutting down) - they cannot do work in this state.

- White blocks indicate the TVM is idle and ready to accept work.

- Green blocks indicate the TVM is running a workload.

- Red blocks indicate the TVM is in a faulted state. The Azure Batch Fabric will try to heal these TVMs automatically.

When you are operating at this scale, errors are a certainty (a 1% error rate equates to 160 TVMS), luckily most errors are transitory in nature and the underlying retry logic will compensate (in the video below, you will see a number of nodes change from red to yellow & green as Batch compensates).

The experiment took 45 minutes, this video has been compressed to 30 seconds. This experiment consumed 12,000 compute core hours at a cost of £660.00. See the video below:

In all we conducted exhaustive tests on Azure Batch for a variety of real world business scenarios consuming over 1,000,000 compute core hours.

The confidence we gained from running these experiments led us to create a large scale image matching solution on Azure Batch, which could perform 4.25 billion comparisons an hour with a relatively small pool of TVMs.

At Microsoft Build 2017, the Azure Batch Team invited Milliman to be part of their talk "Hollywood blockbusters to financial modelling - Using Azure Batch for large-scale Compute":

If you would like more information about Azure Batch, our Azure Batch Evaluation Framework, or our Azure Batch Evaluation Whitepaper which describes the risks and benefits of adopting Batch, please get in contact.

Keep up-to-date on developments in the Azure ecosystem by signing up for our free Azure Weekly newsletter, you'll receive a summary of the weeks' Azure related news direct to your inbox every Sunday, or follow @azureweekly.endj.in on Bluesky for updates throughout the week.