Azure data services part 1: HDInsight

Last Autumn, Richard Kerslake and I were lucky enough to land in the warmth of Barcelona, for a Microsoft Analytics training event.

The sessions gave an introduction to Azure's HDInsight, Stream Analytics and Machine Learning services. I'm going to write up a quick summary of what I learned about each service, starting with HDInsight.

What it's for:

HDInsight is an Azure implementation of Hadoop. Hadoop is a big data framework which came to public notice as a technology used by search engine providers such as Yahoo and Google to manage their burgeoning data*.

The purpose of Hadoop is to let you process and analyse big data efficiently. It does this by distributing the data, carrying out processing where the data is, in parallel, then aggregating the results, rather than fetching data back to a central location for processing.

Use cases include large scale data processing of any kind, particularly when there are huge amounts of varied data coming in quickly, and queries are complex. In other words, where the volume, velocity, or variety ('the 3 Vs') of the data makes processing and analysing this data using SQL and a traditional RDBMS slow and inefficient.

For example, Hadoop could be used for the analysis of financial data, click stream data, server log data, social media data, and scientific data, or to manage the data for a very large-scale search or messaging service (such as Facebook messaging).

Microsoft have partnered with Hadoop distributer Hortonworks to provide HDInsight.

The core components of Hadoop are the Hadoop Distributed File System (HDFS), coordinator Yarn ('Yet Another Resource Negotiator'), and MapReduce, the system which carries out parallel processing of the data. Alongside this is a zoo of languages and components to make working with Hadoop easier, such as tools for mapping Hadoop data to a virtual table storage system (HBase), and tools for querying in Hadoop without writing MapReduce queries by hand (Hive, Pig, and others). Three hands went up in a crowded room when we were asked who actually wrote raw MapReduce queries.

What it consists of:

HDInsight comes in three flavours: Hadoop, HBase and Storm.

Apache HBase is a tabular NoSQL database that runs on top of the HDFS, providing random real-time access to data in HDFS. Apache Storm is a stream analytics platform for processing real-time events (e.g. sensor data).

Sticking with the plainest version, a Hadoop HDInsight instance makes use of the following Azure components:

- A set of nodes, which divide into head nodes and worker nodes. HD Insight clusters can be deployed on Windows or Linux.

- An Azure storage account used for HDFS. The Azure implementation of HDFS is backed on blob storage.

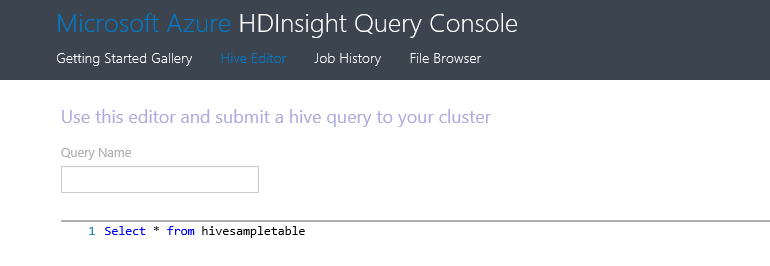

You can interact with an HDInsight cluster using Azure Powershell, by remoting into the cluster, through an application, or using the Azure portal, which you can use to access a query editor for the Hive query language.

How you get data into it and out of it:

Data can be imported from storage endpoints such as Azure Blob Storage, Azure Table Storage, Azure SQL Server, or other Azure services such as Stream Analytics.

The results of HDInsight processing can be saved to any of these endpoints, or consumed via the Power Query Excel Add-In/ PowerBI.

How it's charged:

The main benefit of a SaaS cloud-implementation of Hadoop such as HDInsight over an on-premise Hadoop installation is time and money saved on hardware and maintenance. Billing starts once a cluster is created and stops when the cluster is deleted, and charged per node per hour.

Resources:

* Somewhat glossing over the history of Hadoop and the involvement of these two organisations! Derrick Harris's article The history of Hadoop provides an interesting description of Hadoop's creation.

Next up, Stream Insight.

Sign up to the Azure Weekly to receive Azure related news and articles direct to your inbox or follow on Twitter:@azureweekly