Azure data services part 2: Stream Insight

This blog is part of a series where I'm writing up my notes from a training session on Azure's data services. The previous post dealt with Azure's Hadoop implementation, HDInsight. This week, I'm going to write about Stream Insight.

What it's for:

Stream Insight is an Azure service for real-time event processing.

Use cases for Stream Insight would include real-time processing of sensor data (the archetypal example used in training sessions is a traffic management system), financial data, for example for fraud detection, server logs, clickstream data, or social media activity.

What it consists of:

The Stream Analytics service consists of:

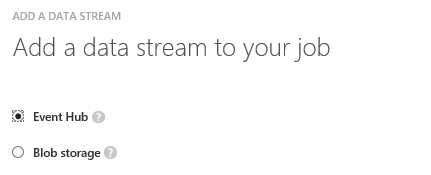

- Event Hubs, used to ingest data from producers (applications or devices)

- Stream Analytics jobs, which specify the query to be used on the ingested data. Queries are written using a SQL variant (Stream Analytics Query Language) which lets you specify time-based constraints

- A storage account used to store the monitoring data for Stream Analytics jobs

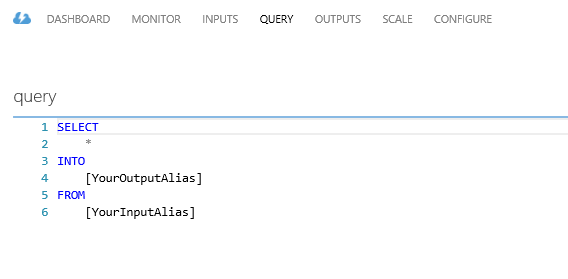

Queries are constructed using the Query Editor built into the Azure portal.

The Stream Analytics Query Editor.

How you get data into and out of it:

Live data is ingested through an Azure Event Hub. This can be augmented with slower-moving reference data from storage. Alternatively, blob storage can be used as a data stream.

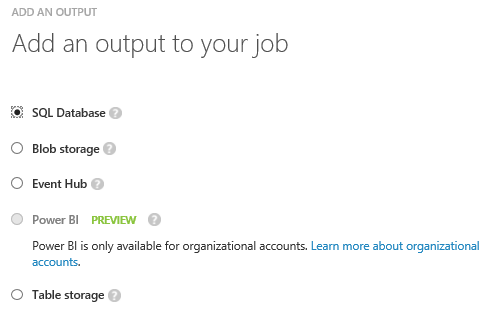

The results of a Stream Analytics job can be saved to storage - Azure Blob Storage, Azure Table Storage or Azure SQL Database - or passed on to an Event Hub for further processing by the service. They can also be exported to Power BI, if you have an organisational account.

How it's charged:

The service is charged by volume of data processed by the streaming job and the number of streaming units required to process it.

Resources:

Sign up to the Azure Weekly to receive Azure related news and articles direct to your inbox or follow on Twitter:@azureweekly