Year 1 as an endjin software engineering apprentice

Year one of my apprenticeship with endjin has gone incredibly quickly.

I came to endjin having completed the taught modules of a Computer Science conversion MSc (the project was completed this year).

When I look back on what I've learnt, it's not just technical facts, but also the skills to deal with common issues, a set of working practices, and an understanding of the tools we use.

Here's a quick run-down of the main things I've taken from the previous year, with assistance from my patient and helpful colleagues.

The software engineer's toolbox

Before starting at endjin, my idea of the tools you needed to write and publish software consisted of: an IDE like Visual Studio, source control like TFS, and a web server.

In the first week here, I got introduced to the idea of a Continuous Integration Server, and why you might need one.

A CIS lets you fully or partially automate processes such as building (compiling) the codebase to check this works, and not just on your machine, running tests, generating packages, and deployment.

It integrates with other systems like your source control, hosting platform, and even bug tracking system, and can react to events such as pushing to a Git repo. We use the TeamCity CIS. The advantages of using a CIS over manually following these processes are time and effort saved, and consistency, since we humans are very good at doing something a little bit differently each time.

I spent some of the first part of the year getting familiar with the source control system Git, and its facility for frequent branching and merging, and wrote a series of blogs on the topic:

- Using Git for .NET development – what's Git?

- Basic operations and tools

- Branching and pushing your changes

- Resolving merge conflicts

We use Git clients such as SmartGit, the Visual Studio Git tools, and SemanticMerge. We follow the GitFlow workflow which defines a standard set of branches and workflows for moving code between these branches.

This year has also involved getting very familiar with Visual Studio and learning the basic ReSharper commands that speed up routine tasks, particularly, extracting a method from a block of code, generating method stubs, generating classes, then moving them to their own files, navigating around the solution to types, usages, and errors, and initialising a variable through the constructor for Dependency Injection.

I've also used ReSharper's more advanced re-factoring features such as structural search and replace.

Some other tools in the toolbox:

- A diff tool (Beyond Compare)

- A nippy text editor (Sublime Text)

- A HTTP debugging tool which lets you see HTTP traffic to & from your machine (Fiddler)

- A REST API development tool (Postman)

- A data store management tool (Azure Management Studio)

The Agile methodology – organising work into short bursts with a usable outcome

endjin follows the Agile methodology, which means that we deliver projects iteratively and incrementally, building a usable version of the product, getting feedback, and repeating.

More than anything, we focus on establishing and delivering business value and a good end user experience.

We begin projects by working out user stories, and mapping these into features and individual tasks, which are each associated with a work item on our tracking system YouTrack and (normally) worked on in their own Git feature branch.

I've worked with endjin's other developers to group work items into sprints - short bursts of work resulting in a usable outcome that can be demonstrated to the product owner to get their feedback, which informs later work. Working with other developers on a sprint is enjoyable, and a very different experience to working on a university project on your own.

The well-defined goals and short time-scale really focus your mind, and pair programming, which is encouraged under Agile, saves time by having another pair of eyes to pick up on simple issues, find solutions, and avoid pitfalls.

Accurate estimation is the hardest part of this process - you must factor in setting up your environment, and dealing with the environment or system issues that crop up unexpectedly from time to time.

Basic software architecture and best practices

Working on large codebases has helped me learn about the specialised uses of classes in a large .NET application, such as MVC controllers and models, domain objects, services, mappers, custom attributes, library classes mapping to external service components (e.g. an Azure table) et al., and best practices for structuring a large application, moving functionality that isn't a core part of a web application out into an API layer.

Architecture is something I hope to focus on more in year 2.

Properly understanding how to use programming constructs such as lambda expressions and Generics, which are used very widely, featured heavily in the first part of the year.

Another interesting area was learning about best practices for front end development, and ways of integrating the client-side and server-side code.

The need to write code that runs quickly really comes to the fore here, and informs your decisions about ways of passing data to and from the browser, and processing data.

For example, where possible, data processing for the initial page load should be carried out server side, and the resulting chunk of data injected directly into the JavaScript through the model. Any further requests for data, in response to user actions, can be mediated by an API layer, to keep the client side and server side code de-coupled.

One big learning exercise this year was understanding dependency injection - a particularly tall order when there's debate among experts about how it's best carried out in the first place.

To summarise quickly, it's a way of keeping control and visibility of relationships between classes in an application, to ensure loose coupling and make code more testable.

Dependency injection goes hand in hand with the practice of programming to an interface. Rather than classes creating new instances of other types they use (their dependencies), each class's dependencies are injected into it at runtime, usually through its constructor. Interfaces rather than interface implementations are used as constructor parameters, to de-couple classes from their dependencies.

Injection takes place in a cascading process triggered by the retrieval of some top level class which nothing else depends on, early on in the lifecycle of the application. This is made possible by the use of DI containers, which register interfaces against interface implementations.

Endjin have written an open source library for dependency injection, the composition framework. Using dependency injection makes testing easier because you can inject behaviour using a Mock of a particular interface, to focus testing on a particular part of the application.

I've learnt about asynchronous programming with .NET - luckily this has become much simpler recently with the introduction of .NET 4.5 and the await and async keywords, and much prompting from Visual Studio if best practice patterns are not followed.

The key messages drummed into me this year regarding async programming are to use it wherever possible, to use it in a complete way over an entire chain of methods, rather than mixing async and non-async code, and to return a Task<T> or Task from async methods, never void, as exceptions thrown in the async method won't be handled correctly if void is returned.

Tests aren't just an afterthought

I'd thought of tests as just a best practice for making sure bugs don't get introduced, but they turn out to be the driving force behind any application we write. We use a methodology known as behaviour driven development, where you start by writing specifications defining the behaviour that users want from the application, and use these to build the application, adding functionality to the application until tests pass.

The test focuses your efforts into building something that actually meets requirements, and acts as 'living documentation' for any future developers including future you working on the project, as well as providing an ongoing way of checking that you've not broken any functionality.

We are big fans of the SpecFlow testing framework, which lets you write specifications/ tests in human-readable language, which really helps to make you think about what you're trying to achieve, and surfaces any issues (such as contradictory aims) with your plans before you start developing, in a way that can easily be discussed with product owners.

Using libraries

A lot of my university-based Computer Science training involved writing my own programs using only the standard packages offered by the framework.

Working as a developer, you quickly realise how much of your work involves consuming other libraries, including ones written by yourself or colleagues.

It would be an enormous waste of effort to write everything from scratch when you or other people have already built the functionality you need.

Using libraries requires a new set of skills:

- Assessing the existing libraries to find one which does what you need, follows currently accepted best practices, seems likely to be supported into the future

- Installing and managing the packages and their dependencies using NuGet and the NuGet console

- Dealing with any package or reference version conflicts caused by installing a library

- Managing library updates

- Recognising when something you've written is going to be needed in future projects and should be converted to a library itself - this is another area I want to learn more about in year 2.

There is a place called package hell - and tricks for getting out of it

The downside of consuming libraries is 'package hell'… a place all developers must pass through at some time, to get to the sunny uplands of actually writing some code.

When each package can depend on other packages, and may require a specific range of versions of those packages, things can get a bit complex.

Here are a few tips for escaping:

Installing a package A fails, because another package B it requires isn't present and NuGet isn't able to install B during the installation of A it for some reason.

- Try individually installing the package B whose absence caused the problem, repeat patiently if there's a new complaint about another package

- If this doesn't work, try uninstalling packages and re-installing in a different order, with the packages that don't depend on any other packages first

The application won't build, because it can't find the specific version of the package or assembly it that needs.

- Try updating the package using the package manager. If an update isn't shown in the GUI, try using the package manager console, specifying the version number if required

There are different versions of the same package or assembly, and the wrong one is being picked up somewhere in your application

- Use a binding redirect in the project, to make sure it chooses the correct version of the assembly

- Better still, update the application so that only one version of the package or reference is present for the entire solution. You can find out which package or reference is being used where in your application by right clicking on the reference in the references folder, and clicking Find code dependent on module

An inner circle of package hell is where you need to update a package A, and this requires that some of the packages that it uses B, C and D are updated, but this means that another package E, that uses the older version of D, no longer works.

- Check if an update for package E is available, letting it used the new version of package D

- Alternatively, use binding redirects

- If all else fails, find alternative libraries, or consider forking the troublesome library yourself if it's open source and essential to your application.

Other things that commonly go wrong and ways of fixing them

A lot of time is spent setting up the development environment, and dealing with issues in this environment.

For example, a solution not building when you're pretty sure it should:

- Delete the bin & obj folders, and re-build the solution. You might need to close Visual Studio to be able to do this. In extreme cases, literally switch the machine off and on again during this process

- Check the version of any SDKs required by the application - for example, some applications may require that you're using an older version of the Azure SDK.

Bug investigation tactics

The joy of realising what's making it do that thing is the main reason I love this job. I've learnt to:

- Quickly try to reproduce the unexpected behaviour as experienced by users, to get an idea of where to focus the investigation and to understand what logically can and cannot be the cause, given the situations in which the unexpected behaviour occurs and the situations in which it does not. This might sometimes be enough to work out the cause of the problem

- If the application was behaving correctly beforehand, check source control to see exactly what has changed since it was last working

- Set up a test to prove the buggy behaviour, then another test that proves the correct behaviour (if you don't have one already). This lets you reproduce the undesired behaviour quickly and consistently, and deterministically prove that it is fixed in a given set of situations

- Step through every statement in the run up to the unexpected behaviour, and get a thorough understanding of the components involved in this part of the application, and the behaviour of any external services they interact with. Checking names and values of the data being passed around is often informative, even if they appear to be correct. If a simple run through doesn't show anything untoward, consider everything, and carry out experiments to rule out the options one by one

- If you're not seeing any exceptions that explains the unwanted behaviour, alter Visual Studio's debug settings to break when Common Language Runtime exceptions are thrown

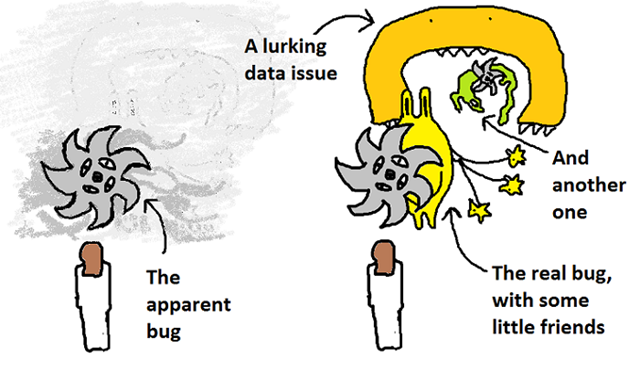

- Really confusing behaviour might be the result of several bugs, rather than one.

Pictorial representation of the year's best bug

Azure 101

I had some limited experience of AWS when I came to endjin, but Azure was a new world. There's quite a big gap for a newcomer between the generic concepts of IaaS, PaaS, SaaS and the multitude of Azure products and services, many of which address specific needs that occur when building distributed applications, and affect the architecture of these applications.

For example, to host a website or web service on Azure, you have broadly three choices, on a spectrum of control vs ease of management and agility:

- Custom Windows or Linux VMs (IaaS), with a web server and other applications of your choice installed

- Cloud Services, a PaaS service which provides an Azure-managed VM where you can run Web Roles (services with IIS which respond to HTTP requests) and Worker Roles, which can scale up in terms of instances running the Worker Role without taking resources from Web Roles, for background tasks. Applications written to run on a cloud service will usually make use of other Azure-managed services such as Azure Storage

- Azure Websites, a PaaS hosting service which provides built in auto-scaling and load balancing, and offers 'WebJobs' - the ability to carry out background processing using a .bat, .sh or other script, or any .NET application compiled with the WebJobs SDK, in the same VM as the website, running either continuously or on a trigger. As with Cloud Services, Azure Websites will usually make use of other Azure-managed services. There is an equivalent service for running backends for mobile apps, Mobile Services.

This is just scratching the surface of a larger 'ecosystem' encompassing:

- Specialised tools that integrate with your applications such as Media Services, CDN, Azure Cache and Redis Cache, Notification Hubs (push notifications), Service Bus (messaging) and Event Hubs (event ingestion), and Application Insights (telemetry)

- Tools for storage, including SQL Database, noSQL table storage and document storage, DocumentDB

- Tools for data processing and analytics such as Azure & Hortonwork's Hadoop implementation HDInsight,

- Identity and access management tools

- Services for automation and backup such as Azure Automation

- Networking services such as Virtual Networks and Traffic Manager

- Services for hybrid integration such as Backup, Site Recovery, BizTalk and ExpressRoute

- Visual Studio online (the SaaS bit!)

For the first half of the year my encounters with Azure were mostly storage related, in particular using Azure Blob Storage and Azure's NoSQL key-attribute Table Storage, which were quite a change from the RDBMS SQL databases I'd used before.

I've mostly been working on web applications, where efficient data access is the key concern informing data structures. It's been interesting trying to stop seeing every data structure as a RDBMS table, and instead see documents and metadata.

In particular, I've learnt to use the endjin storage library that wraps the Azure Storage SDK, simplifying the process of setting up and using a repository consisting of blobs (usually JSON objects) and tables containing metadata for these blobs.

Towards the end of the year, I've been introduced to a wider range of Azure products, beginning with a trip to Barcelona for a data and analytics training event which covered HDInsight, Stream Analytics and Machine Learning. Recently I've been putting Azure Machine Learning to use to help automate the repetitive parts of generating our weekly Azure newsletter (the Azure Weekly :) )

Luckily for me, Azure is one of endjin's areas of expertise. Howard, Matthew & designer Paul have recently made a downloadable Azure Technology Selector poster to guide people through the many options available.

Difficult fun with data

I received a huge amount of support from endjin to complete my MSc project, which was to build and analyse a web information extraction system. I was completing the final part of the MSc through distance learning, after moving to the bright lights of London, so endjin's show and tell sessions were a good motivator.

Putting our tools to work, I scheduled work in sprints using YouTrack, and used GitHub for source control. Crucially, endjin gave me paid time at work to complete the project, without which I probably wouldn't have managed.

Web information extraction (IE) is the task of retrieving information about relationships between entities from structured (tabular), semi-structured or unstructured (free text) sources on the web. IE can be seen as part of a wider movement to automate the gathering and sharing of human knowledge.

It spans the fields of ontology, scraping, natural language processing and machine learning, The project involved a period of reading up on information extraction systems over the years, from wrappers aimed at extraction from a single known source, to systems that use machine learning to extract knowledge from unseen sources, without the requirement for manually labelled training data, such as the Turing Centre's TextRunner and later Open IE systems.

This was accompanied by a crash course in machine learning and its application to the rather complex task of getting facts from semi-structured text. I used the NUML library for machine learning, and the .NET wrappers for Stanford's part of speech tagging and named entity recognition packages.

Thinking like a developer

One of the hardest tasks of the year was to train myself to ask people for help quickly if I'm stuck rather than just having 'ten more minutes' (which turns into hours) trying to work it out.

From my super-effective colleagues, I've learnt the benefits of a calm and rational approach. The most effective attitude for software development seems to be an unemotional one. For example, not to take a persistent problem when you have a deadline to meet personally; your computer is not actually trying to kill you.

Someone who can carry on plugging away at the issue with the same patience as the machine giving them baffling error messages will be more likely to fix the problem in time.

I can't help anthropomorphising, so instead I try to think of this process as communicating with an intelligent alien which is trying to help, across vast cultural and linguistic barriers.

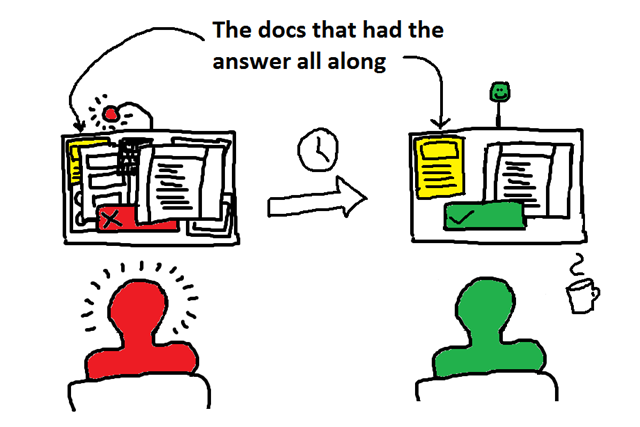

The answer is often in the error message you first got back or in the docs that you had open all along…

Working as a developer gives you a lot of responsibility - for the end user's ability to do things which are important to them using the application (like apply for a job), and for the data that belongs to the client and end user.

I find this very daunting, but the same principle applies – a calm, rational attitude is the best way of working safely, combined with tests which prove that the application is behaving correctly.

On to Year 2, and beyond

This year I'm looking forward to deepening my knowledge of C#, the .NET framework and Azure, as well as keeping up with the changes to these technologies – it would help if things stayed still while you tried to learn them but that is not the case!

At least it puts you on a more level footing with experienced developers. I want to learn more about best practices for architecting solutions, and the intersection between architecture and the choice of Azure services, and to carry on playing with Azure's data processing technologies such as the fabulous machine learning service.

I'd like to become more confident as a developer – at the moment there's still quite a lot of the fear that comes from a lack of knowledge combined with an awareness of the power you hold as a developer.

Experience is probably the key to feeling more secure.

A year in, and I continue to be fascinated and concerned by the use of technology to automate increasingly complex human behaviours, to glean new knowledge from the huge amounts of data being amassed by search engines and other applications, and to join people around the world.

As a longer term aim, I would like to find ways of applying technology to generate and share knowledge, and help people form connections for the good of society.

If you're interested in joining endjin whether that's as an apprentice, or as a senior software engineer, please get in touch with us hello@endjin.com