Bye bye Azure Functions, Hello Azure Container Apps: Migration of the existing test environment

In this series of posts, we've been looking at the process we went through to migrate APIs that support one of our internal applications from Azure Functions to Azure Container Apps.

Part one, covers the background to the project, and parts two three and four go into details about the changes that were needed to get the code running on ACA.

This part of the series is a bit of an anomaly; as we mentioned in part 3, our application was hosted in Azure's UK South region. At the time we were going through this process, ACA wasn't available in UK South. We decided to press on anyway, but this meant we needed to migrate other resources - App Configuration, Key Vault and storage - across to North Europe, where we ended up deploying our ACA instance.

Step 1: Analysis

The first thing we did was review the resources that make up our application (ignoring the Functions Apps and their supporting storage accounts) and determine what requirements we had for each. The resources are as follows:

- An App Insights resource

- An App Configuration resource

- A Key Vault resource

- A storage account in which Key Vault diagnostic data is stored

- A B2C Tenant

- A Static Web App

- A storage account in which application data is stored, using blob storage only

We were quickly able to split our resources into four categories:

- Resources that can be deleted and redeployed into the new region with either no data loss, or acceptable data loss

- App Insights (accepting we would lose historical data)

- App Configuration

- Key Vault and its supporting storage account

- Resources that can be moved to the new region via the portal/

az cli/PowerShell.- The B2C Tenant

- Resources that are either region free, or whose region is irrelevant.

- The Static Web App

- Resources that can't be moved, and whose data must be preserved

- The storage account in which application data is stored

We also identified a further issue; we store data relating to user claims, allowing us to determine who has access to what. The ability to manage these claims is locked down using AAD. One of the APIs has the ability to manage claims, meaning that access had been granted using its Managed Identity. Unfortunately we're using System Assigned Managed Identities, meaning that when we delete the Functions App and replace it with a Container App, the Managed Identity will be different.

Step 2: Exploration, experimentation, and the right tool for the job

The next step was to work out exactly how to make the changes above and ideally, put a script together that we would be able to use to migrate our production environment when the time came.

The challenge with this type of activity is that you need a means to capture notes, explain the steps you're working on and include snippets of script or code to make the necessary changes. Up until recently, this has normally meant keeping some kind of shared document with the notes in and referring out to scripts stored alongside it. However, recent years have seen the introduction of notebooks to the .NET ecosystem, with Polyglot Notebooks (formerly known as .NET Interactive Notebooks) now supporting a variety of languages.

Notebooks allow you to integrate code (and its output) and explanatory text into a single document. The text is normally written in Markdown, allowing for formatting, embedding of imagery and so on.

This makes them an outstanding tool for our task. We could work in a notebook to map out the steps in the migration, detail manual steps where required, and experiment with snippets of Powershell to automate steps where possible. We could easily capture common error scenarios and their resolutions, reorder steps as we learned more about the process and so on.

I'd come across notebooks before, and my colleagues have used them extensively in other projects but this was my first experience using one in anger and it's easy to see how they could be used in all sorts of scenarios.

There's a Visual Studio Code extension for Polyglot Notebooks, allowing you to create, edit and execute them from inside the editor. It supports both the older .ipynb and the experimental .dib format; in our experience, the .dib format is preferable - it is more full-featured for our use cases and it's a lot easier to work with in diff tools, which makes PR reviews more pleasant.

Step 3: Mapping out the migration process

The next step was to map out the steps of the migration. This list evolved as we went through the process, but ended up as follows:

- Create a temporary resource group & storage accounts for migration

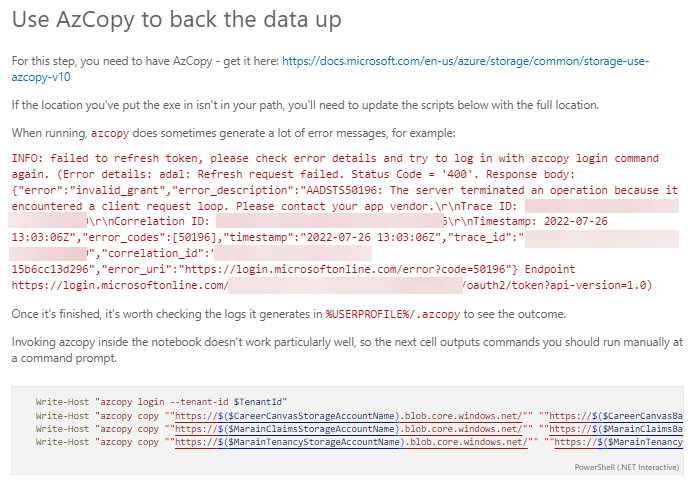

Because we wanted to recreate storage accounts with the same name in a different region, we'd need to delete the existing account first. As with most Azure resources, storage accounts don't support renames so this meant creating a new temporary account to hold the data while we carried out the delete & recreate step. - Use AzCopy to back the data up

The go-to tool for copying data between storage accounts is AzCopy. We would use this to move data from the source storage accounts to the temporary ones, then (in step 5) back into the newly created ones. - Move the B2C tenant into the temporary resource group The B2C tenant was the only thing we could move, and it made more sense to do this than to redeploy it; when our deployment pipeline was originally built, automating deployment of a B2C tenant and its configuration was challenging so we don't currently have everything needed in script form. This is a gap we'll be plugging in the near future.

- Delete the old environment resources and resource group

- Deploy the new environment

This is done by simply rerunning our deployment script retargetted at the North Europe region instead of UK South. - Move the B2C tenant into the new resource group

- Use AzCopy to restore the data

- Update Claims data with the new managed identity ids As mentioned above, the fact that our system assigned managed identities would be recreated meant they'd end up with new Ids. We would need to modify the claims data accordingly.

- Verify new environment

Run through testing on the newly redeployed environment to ensure that everything works as expected and all data is in place. - Clear down temporary resource group & storage accounts

Finally, once we're happy all is well, we could delete the temporary resources we created in step 1.

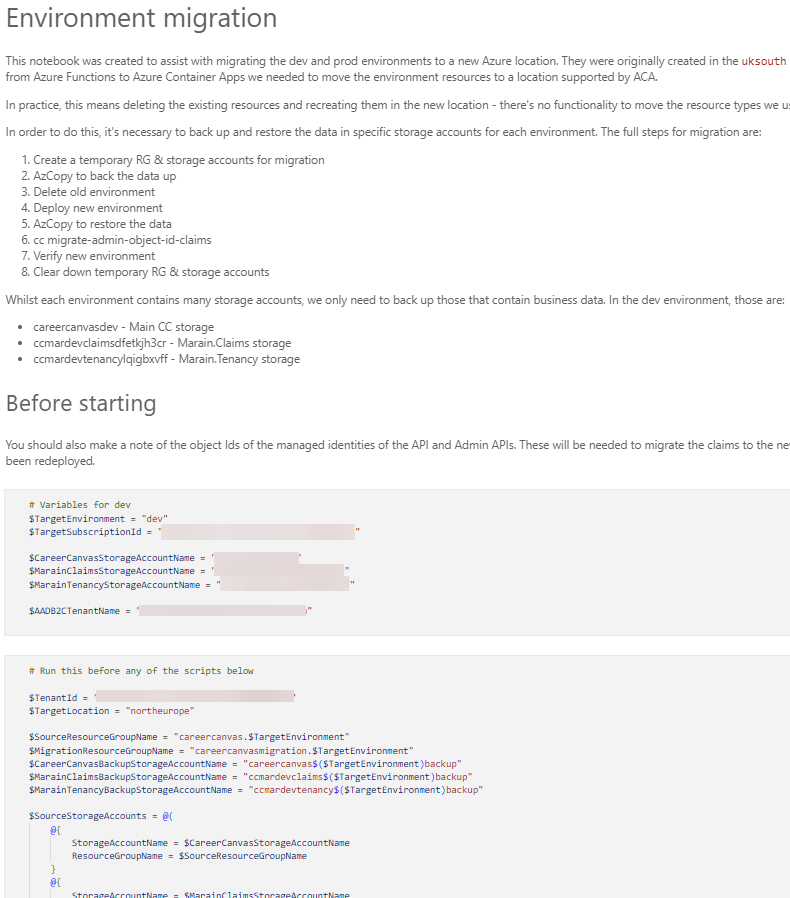

Step 4: Putting together the notebook

Our final notebook was relatively simple. It contained an introductory section with background information and an explanation of the process, followed by some Powershell cells to set up variables that will be used elsewhere. They also run the Connect-AzAccount command to authenticate the user executing the script.

After this, we have a section of the notebook for each of the steps listed above, containing the necessary code and notes to enable anyone picking up the notebook to understand and execute that step. Even the simple steps - e.g. deletion of resoure groups - were scripted to avoid any need to jump in and out of the Azure portal.

For example, here's what the section for the second step looks like:

This section should give you an idea of how useful the notebook approach can be. We've been able to capture the script needed, plus useful information about how to prepare, execute and validate that it's run correctly as well as details of a potential error scenario we've encountered and investigated.

Step 5: Execution

Once we'd put the notebook together, our intention was to use it to migrate the dev environment, capturing any additional learning as we went.

There's not a lot to report on this; the data migration took a little longer than we initially expected, which is primarily because our data is stored as a large number of small blobs. We added some extra detail to the notebook during the process, but the testing and experimentation we'd already done meant that each individual operation was smooth and the end to end process worked well.

Summary

Polyglot Notebooks have been an excellent addition to the .NET toolbox. As well as the more common experimentation scenarios, there are two areas where I think they really shine.

The first is operational support; building your runbooks as Polyglot Notebooks for common support tasks is a huge step up from just having a library of scripts. The ability to enrich the code with well formatted explanatory content containing links, images, tables, etc, is a significant step up from comments, and the approach of splitting code between cells that carry out steps in the process makes it possible to build far more flexible tools for support staff.

The second is sample code. Here again, the ability to intersperse snippets of code with well formatted explanatory text is invaluable. We've recently started an internal project to update our open source libraries with these types of examples - you can see the first examples in our Corvus.Extensions repository.

There are plenty of other great use cases for Polyglot Notebooks - for example, I haven't even touched on the visualisation capabilities in this post. So, if you haven't spent any time with them yet I strongly recommend you download the Visual Studio Code extension and check out the samples in the GitHub repository.

Footnote

Three weeks after we put together this notebook, but thankfully before we migrated the production environment, Azure Container Apps was made available in the UK South region. This meant that the majority of steps above were redundant; we were able to simply run our deployment scripts to provision the new resources then remove the old Function Apps once everything was running successfully.

We haven't bothered migrating our development environment back as there isn't any particular need. However we were a little surprised to note that ACA is more expensive in UK South than in North Europe:

| UK South (£/sec) |

North Europe (£/sec) |

Difference % |

|

|---|---|---|---|

| vCPU (Active usage) | 0.0000294 | 0.0000208 | -29 |

| vCPU (Idle usage) | 0.0000035 | 0.0000026 | -26 |

| Memory (/GiB) (Active usage) | 0.0000035 | 0.0000026 | -26 |

| Memory (/GiB) (Idle usage) | 0.0000035 | 0.0000026 | -26 |

Looking at the stats, vCPU and Memory costs in both active and inactive modes are roughly 25% cheaper in North Europe than they are in UK South - so this is clearly something worth thinking about if you're planning to host on Azure Container Apps.