Adventures in Dapr: Episode 4 - Containerising with Visual Studio

At the end of the previous episode we had migrated the last of the infrastructure services by switching from using Mosquitto to Azure Storage Queues. We also dabbled with the Dapr programming model to tweak the behaviour of the Simulation app. In this episode we will leave behind our self-hosted Dapr environment and look at what is needed to containerise the whole solution, whilst maintaining our ability to run it locally and keep using the Azure platform services we've migrated to.

The sample already provides a Kubernetes-based deployment, but we're going to concentrate on a simple container-based solution that can integrate nicely with a low friction dev inner-loop. To do this we're going to use Visual Studio and its Container Tools as, in my opinion, it currently has slicker support for multi-app debugging than Visual Studio Code.

Specifically, this is the plan:

- Get the sample running in Visual Studio

- Build container images for each of our services

- See what changes are needed for the Dapr component configuration

- Find a way to share the Dapr component configuration across containers

- Use Docker Compose to get everything running and connected

- Test whether everything still works!

As before you can follow along with these changes via the episode's branch in the supporting GitHub repo.

Create a new Visual Studio solution from existing projects

This is much easier to walk-through now we have the dotnet cli - open a terminal and navigate to your copy of the adventures-in-dapr repo:

- Create a new solution file:

PS:> cd src PS:> dotnet new solution -n dapr-traffic-control - Add all the projects to the solution (NOTE:

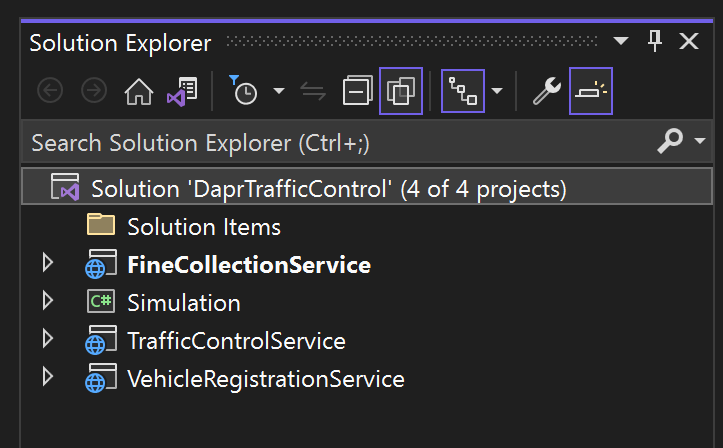

gciis an alias forGet-ChildItem):PS:> gci -recurse *.csproj | % { dotnet sln add $_ } - Once all the projects are added, opening the solution in Visual Studio should look like this:

- Finally, before we change anything, ensure the solution builds successfully

Enabling a Visual Studio Solution to use Docker Compose

With the solution setup we can move on to configuring Visual Studio to build the projects as containerised applications.

- Ensure you have the Visual Studio 'Container Development Tools' feature installed

- Ensure Docker is running

- Enable the 'Container Orchestrator' feature by right-clicking on one of the projects and select 'Add' --> 'Container Orchestrator Support ...'

- Choose 'Docker Compose':

- We'll be running the services in Linux-based containers:

- You should now have a new 'docker-compose' project in the solution:

- Viewing the generated

docker-compose.ymlfile you should see an entry for the project you added:version: '3.4' services: trafficcontrolservice: image: ${DOCKER_REGISTRY-}trafficcontrolservice build: context: . dockerfile: TrafficControlService/Dockerfile - The step will have also updated the project file with some additional properties and a reference to the

Microsoft.VisualStudio.Azure.Containers.Tools.Targetspackage - Repeat the above steps for the 3 remaining projects and your

docker-compose.ymlfile should look something like this:version: '3.4' services: trafficcontrolservice: image: ${DOCKER_REGISTRY-}trafficcontrolservice build: context: . dockerfile: TrafficControlService/Dockerfile vehicleregistrationservice: image: ${DOCKER_REGISTRY-}vehicleregistrationservice build: context: . dockerfile: VehicleRegistrationService/Dockerfile finecollectionservice: image: ${DOCKER_REGISTRY-}finecollectionservice build: context: . dockerfile: FineCollectionService/Dockerfile simulation: image: ${DOCKER_REGISTRY-}simulation build: context: . dockerfile: Simulation/Dockerfile - Switch Visual Studio to use container-based hosting/debugging:

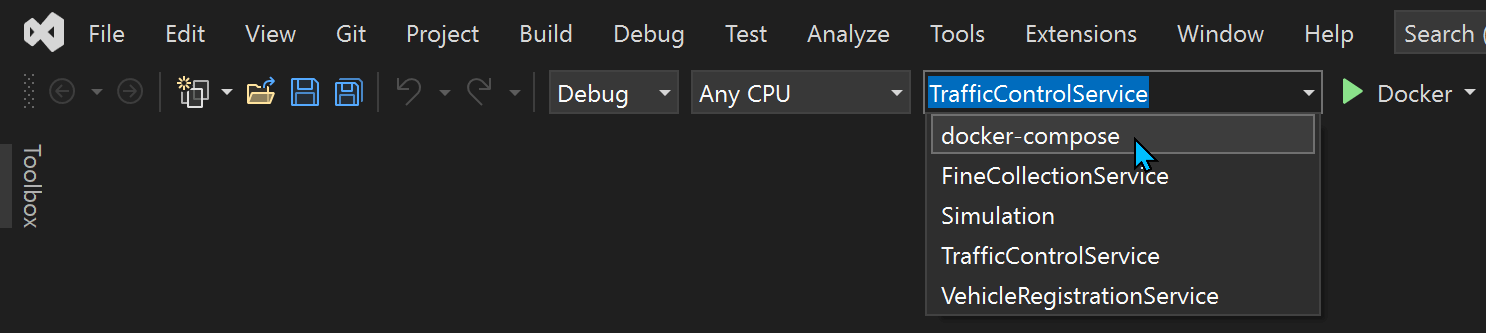

- Change the startup project to be 'docker-compose':

You should now see some activity in the 'Container Tools' Output window as Visual Studios tries to build each of the containers that it will use when you Run or Debug the solution. Unfortunately you will see that this fails with errors similar to those shown below:

Let's fix those errors!

Fixing Dockerfile Context Path Errors

As mentioned earlier, the sample already supports running the services in Kubernetes so there are existing Dockerfiles in the repository. The errors we see are due to the Docker build process being unable to find the associated Visual Studio project files where it expects to:

Step 3/8 : COPY VehicleRegistrationService.csproj ./

COPY failed: file not found in build context or excluded by .dockerignore: stat VehicleRegistrationService.csproj: file does not exist

When building a container image you require a 'Build Context' directory, which is used as the base folder for transferring files available on the machine running the docker build command, to the system running the Docker engine that is actually building the container image.

The existing Docker files were written expecting the context directory to be the same as where they reside, which is the default behaviour. In our case the image build process is being orchestrated by the docker-compose.yml file and the keen-eyed amongst you may have already noticed that each service in that file has a context property.

Note that the context being set to '.' is relative to the compose file rather than the Dockerfile. We can fix this by updating the docker-compose.yml file or each Dockerfile, but since we don't want these changes to break the Kubernetes use-case let's fix the compose file:

version: '3.4'

services:

trafficcontrolservice:

image: ${DOCKER_REGISTRY-}trafficcontrolservice

build:

context: ./TrafficControlService

dockerfile: dockerfile

vehicleregistrationservice:

image: ${DOCKER_REGISTRY-}vehicleregistrationservice

build:

context: ./VehicleRegistrationService

dockerfile: Dockerfile

finecollectionservice:

image: ${DOCKER_REGISTRY-}finecollectionservice

build:

context: ./FineCollectionService

dockerfile: Dockerfile

simulation:

image: ${DOCKER_REGISTRY-}simulation

build:

context: ./Simulation

dockerfile: dockerfile

We will also take the opportunity to fix the dockerfile references to accommodate the inconsistent casing of those filenames across the projects - this way we avoid any issues when running under Linux with its case-sensitive filesystem.

As soon as you save the changes to the docker-compose.yml file, you should see the 'Container Tools' Output window spring into life and attempt re-building the container images. You should eventually be greeted with the following messages indicating that the containers have been successfully built:

Creating network "dockercompose6632545919491133585_default" with the default driver

Creating Simulation ...

Creating FineCollectionService ...

Creating TrafficControlService ...

Creating VehicleRegistrationService ...

Creating Simulation ... done

Creating TrafficControlService ... done

Creating FineCollectionService ... done

Creating VehicleRegistrationService ... done

Done! Docker containers are ready.

We can now use Visual Studio's multiple project launch feature to start each of the services in their respective containers via the usual 'Start Debugging' or 'Start Without Debugging' options.

If things are setup correctly then you should see logging similar to that shown below in the 'Container Tools' Output window:

========== Launching ==========

docker ps --filter "status=running" --filter "label=com.docker.compose.service" --filter "name=^/FineCollectionService$" --format {{.ID}} -n 1

54c300e96af1

docker ps --filter "status=running" --filter "label=com.docker.compose.service" --filter "name=^/Simulation$" --format {{.ID}} -n 1

68bbaad8fc96

docker ps --filter "status=running" --filter "label=com.docker.compose.service" --filter "name=^/TrafficControlService$" --format {{.ID}} -n 1

a22b36d915ef

docker ps --filter "status=running" --filter "label=com.docker.compose.service" --filter "name=^/VehicleRegistrationService$" --format {{.ID}} -n 1

c47ea2a19475

docker exec -i -e ASPNETCORE_HTTPS_PORT="49155" 54c300e96af1 sh -c "cd "/app"; "dotnet" --additionalProbingPath /root/.nuget/packages "/app/bin/Debug/net6.0/FineCollectionService.dll" | tee /dev/console"

docker exec -i 68bbaad8fc96 sh -c "cd "/app"; "dotnet" --additionalProbingPath /root/.nuget/packages "/app/bin/Debug/net6.0/Simulation.dll" | tee /dev/console"

docker exec -i -e ASPNETCORE_HTTPS_PORT="49153" a22b36d915ef sh -c "cd "/app"; "dotnet" --additionalProbingPath /root/.nuget/packages "/app/bin/Debug/net6.0/TrafficControlService.dll" | tee /dev/console"

docker exec -i -e ASPNETCORE_HTTPS_PORT="49157" c47ea2a19475 sh -c "cd "/app"; "dotnet" --additionalProbingPath /root/.nuget/packages "/app/bin/Debug/net6.0/VehicleRegistrationService.dll" | tee /dev/console"

However, soon afterwards you will see Dapr-related errors from the 'Simulation' console application:

Camera 3 error: One or more errors occurred. (Binding operation failed: the Dapr endpoint indicated a failure. See InnerException for details.) inner: Binding operation failed: the Dapr endpoint indicated a failure. See InnerException for details.

Camera 1 error: One or more errors occurred. (Binding operation failed: the Dapr endpoint indicated a failure. See InnerException for details.) inner: Binding operation failed: the Dapr endpoint indicated a failure. See InnerException for details.

Camera 2 error: One or more errors occurred. (Binding operation failed: the Dapr endpoint indicated a failure. See InnerException for details.) inner: Binding operation failed: the Dapr endpoint indicated a failure. See InnerException for details.

Whilst we have the services running as containers, they no longer have access to (or indeed know anything about) the self-hosted Dapr runtime that we have been using in previous episodes.

Adding Dapr Runtime Support to Docker Compose

When running in self-hosted mode we had a number of shared services that were already containerised; required as either part of the Dapr runtime environment or as ancillary services used by the application:

- Dapr Placement service - this is responsible for keeping track of Dapr Actors

- Dapr State Store - in this case a Redis instance

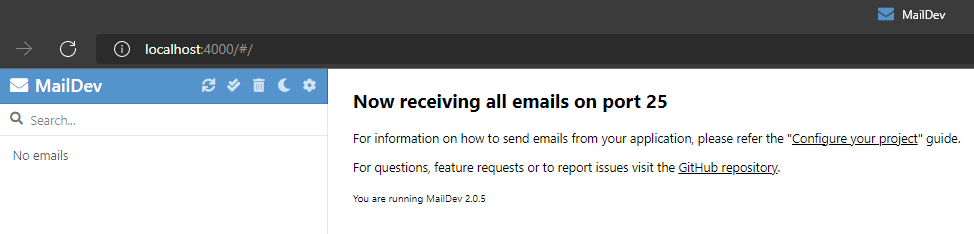

- Email server - using the maildev container

- Distributed tracing system - using Zipkin

Since the above are already containerised they can be easily added to our Docker Compose environment by adding the following services to docker-compose.yml:

dtc-redis:

image: redis:6-alpine

dtc-maildev:

image: maildev/maildev:latest

environment:

- MAILDEV_SMTP_PORT=25

- MAILDEV_WEB_PORT=80

ports:

- "4000:80" # allows us to access the web console

dtc-zipkin:

image: openzipkin/zipkin-slim

ports:

- "19411:9411" # allows us to access the web console

dapr-placement:

image: "daprio/dapr"

command: ["./placement", "-port", "50000"]

Adding Dapr Sidecar Support to Docker Compose

When running Dapr-aware services in a containerised environment the Dapr runtime is made available to each service via the 'Sidecar' pattern. Each service will require its own sidecar container with the following requirements:

- Accessible by its associated service via

localhost - Configuration details about its associated service (e.g. its unique application ID, the port it runs on, how to find the Dapr placement service etc.)

- Dapr component configuration

We can define these sidecars as additional services in the docker-compose.yml file, using the 'daprio/daprd' image and similar command-line arguments as used when launching the services in self-hosted mode.

trafficcontrolservice-dapr:

image: "daprio/daprd:edge"

command: [

"./daprd",

"-app-id", "trafficcontrolservice",

"-app-port", "6000",

"-placement-host-address", "dapr-placement:50000", # Dapr's placement service can be reached via the docker DNS entry

"-dapr-http-port", "3600",

"-dapr-grpc-port", "60000",

"-components-path", "/components",

"-config", "/config/config.yaml"

]

depends_on:

- trafficcontrolservice

network_mode: "service:trafficcontrolservice"

Whilst much of the above configuration is merely replicating what we had in self-hosted mode, the following elements warrant further discussion:

-placement-host-address- Docker Compose will create a default containerised network and all the services will be connected to it. This allows containers to address each other via their service name and Docker will take care of resolving those names to the correct IP address on the containerised network.

- A dependency on

trafficcontrolserviceensures that Docker Compose starts the sidecar after its partner service network_modeis the key to making this a sidecar and specifies that it should attach itself to the network context of its partner service (rather than being treated as a regular service that would otherwise be independently addressable)-components-path&-config- These options need to point to the Dapr component definition files and the main Dapr configuration file. This is our next challenge, how to make those configuration files available to the sidecar containers.

Whilst we could use a host-based volume to make our local files available to the containers, this feels like a bit of a cheat as it is not a solution that would support running this containerised deployment elsewhere, in the cloud for example. The goal of this containerisation work is to have a deployment configuration that we can use anywhere that supports running Docker Compose workloads.

So the the question remains, how can we make these files available to all the containers that need them?

Sharing Configuration Files Across Containers

Let's discuss a couple of approaches we could take for achieving this:

- Include the required configuration files as part of the services' container image

- Include those files into a separate container that the other services can access

Option 1 is arguably the simplest, but the main downside in my opinion is that any configuration changes will require all service images to be rebuilt. It would also make it rather too easy for the services to be running with inconsistent configuration.

Option 2 sounds like an ideal solution, but how can we achieve that? The answer is to use containerised volume mounts.

Whilst most container images we build are designed to run a particular process it is not a requirement. In this case we will build a container that includes the required configuration files in its filesystem, but rather than executing a long-running process it will export parts of its filesystem as volumes. These volumes will then be addressable by our other services, even though the underlying container will not be in a 'running' state.

These are often referred to as 'volume-only' containers.

To do this we will write a new Dockerfile that will reside in the dapr folder that contains the files we need:

FROM tianon/true

COPY components/email.yaml.compose /components/email.yaml

COPY components/statestore.yaml.compose /components/statestore.yaml

COPY components/secrets-file.yaml.compose /components/secrets-file.yaml

COPY components/entrycam.yaml /components

COPY components/exitcam.yaml /components

COPY components/pubsub.yaml /components

COPY components/secrets-envvars.yaml /components

COPY components/secrets-keyvault.yaml /components

COPY components/secrets.json /components

COPY config/config.yaml.compose /config/config.yaml

COPY config/ratelimit-config.yaml.compose /config/ratelimit-config.yaml

VOLUME /components

VOLUME /config

- The

tianon/truebase image contains a tiny program that simply terminates with a success exit code, which is perfect for our needs - We copy the files we need using a directory structure to support the two distinct sets of configuration

- Finally we use the

Volumecommand to expose parts of the image's filesystem as named volumes that will be addressable by other services

You may have noticed that some of the files we're referencing don't yet exist and have a .compose extension - these changes will reflect the different hostnames associated with the services when running in a container. The naming convention of adding the .compose extension is to ensure that these changes do not break things when running in self-hosted mode; in that scenario the Dapr runtime will attempt to load any .yaml files it can find which would result in duplicate component references.

Note how we use the COPY commands in the Dockerfile to rename the .compose files back to the required .yaml extension.

Updating Dapr Component Configuration Files

There are 3 component definition files that need to be updated, so we start by making copies of them:

src/dapr/components/email.yaml-->src/dapr/components/email.yaml.composesrc/dapr/components/statestore.yaml-->src/dapr/components/statestore.yaml.composesrc/dapr/components/secrets-file.yaml-->src/dapr/components/secrets-file.yaml.compose

The email configuration must reference the correct host and port details used by the container:

...

spec:

type: bindings.smtp

version: v1

metadata:

- name: host

value: dtc-maildev # this is the name Docker Compose service running the 'maildev' container

- name: port

value: 25

...

Similarly the state store configuration needs the host details updated so it resolves to the Redis service in the compose file:

...

spec:

type: state.redis

version: v1

metadata:

- name: redisHost

value: dtc-redis:6379 # this is the name Docker Compose service running the 'redis' container

...

You may recall that whilst we moved some secrets to Key Vault in episode 2 there are still some secrets being referenced from the original secrets.json file. This file is referenced by the secrets-file.yaml component definition using a relative path, which will not be valid when using our 'volume-only' container; therefore we need to update the secretsFile property in this component definition:

...

spec:

type: secretstores.local.file

version: v1

metadata:

- name: secretsFile

value: /components/secrets.json

...

Updating Dapr Runtime Configuration Files

There is also a single configuration file that specifies settings used by the Dapr runtime; in this sample it is used to configure the distributed tracing by telling the runtime how to connect to the Zipkin service used for this purpose. Once again the hostname details for this service are different when running in our Docker Compose configuration.

Start by creating a copy of the configuration file:

src/dapr/config/config.yaml-->src/dapr/config/config.yaml.compose

Then update the endpointAddress property in the new file so that it references the hostname used by the Zipkin service defined in our Docker Compose file:

...

spec:

tracing:

samplingRate: "1"

zipkin:

endpointAddress: "http://dtc-zipkin:9411/api/v2/spans"

...

We now have all the configuration files needed by the new Dockerfile, so we can add this 'volume-only' container as a new service to our docker-compose.yml file:

services:

...

dapr-config:

build:

context: ./dapr

...

Similar to the application services we added at the start, this entry will build the Dockerfile in the src/dapr folder and start an instance of it along with all the other services - the difference here being that the container instance will terminate immediately.

You might be wondering how this service will be of any use given that it terminates as soon as it starts?

Using Docker Compose to Share Volumes Between Containers

The answer to this question is the use of the volumes_from property - this allows volumes defined in one service to be mounted into another.

The current version of the Docker Compose specification (v3.9 at the time of writing) has little mention of the volumes_from syntax, however, it still works and I've been unable to replicate this use-case with the named volume syntax covered in the latest documentation.

The configuration files we copied into the dapr-config container above are required by the Dapr sidecars, however, it's easier to demonstrate how volumes_from works using one of our application containers. (This is because the container image used by the sidecars has been optimised for size and as such does not contain an interactive shell).

First let's take a look at the filesystem of the Simulation service - Docker Compose allows us to launch a subset of the specified services:

PS:> docker-compose up -d simulation

Creating network "src_default" with the default driver

Creating src_simulation_1 ... done

Next we connect to the running simulation container, launch a shell and run the ls command to get a directory listing:

PS:> docker exec -it src_simulation_1 /bin/sh

# ls -l

total 2836

drwxr-xr-x 1 root root 4096 Oct 23 22:30 .

drwxr-xr-x 1 root root 4096 Oct 23 22:30 ..

-rwxr-xr-x 1 root root 0 Oct 23 22:30 .dockerenv

-rwxr--r-- 1 root root 283136 Jul 7 22:41 Dapr.Client.dll

-rwxr--r-- 1 root root 306688 Sep 23 2020 Google.Api.CommonProtos.dll

-rwxr--r-- 1 root root 390248 Feb 18 2021 Google.Protobuf.dll

-rwxr--r-- 1 root root 52720 Sep 10 2020 Grpc.Core.Api.dll

-rwxr--r-- 1 root root 95216 Oct 5 2020 Grpc.Net.Client.dll

-rwxr--r-- 1 root root 18416 Oct 5 2020 Grpc.Net.Common.dll

-rwxr--r-- 1 root root 48720 Jan 22 2020 Microsoft.Extensions.Logging.Abstractions.dll

-rwxr-xr-x 1 root root 142840 Oct 23 15:43 Simulation

-rw-r--r-- 1 root root 18473 Oct 23 15:43 Simulation.deps.json

-rw-r--r-- 1 root root 15872 Oct 23 15:43 Simulation.dll

-rw-r--r-- 1 root root 13180 Oct 23 15:43 Simulation.pdb

-rw-r--r-- 1 root root 242 Oct 23 15:43 Simulation.runtimeconfig.json

-rwxr--r-- 1 root root 16896 Mar 14 2017 System.Diagnostics.Tracer.dll

-rwxr--r-- 1 root root 144760 Apr 28 2021 System.Net.Mqtt.dll

-rwxr--r-- 1 root root 1253328 Dec 24 2019 System.Reactive.dll

drwxr-xr-x 2 root root 4096 Oct 4 00:00 bin

drwxr-xr-x 2 root root 4096 Sep 3 12:10 boot

drwxr-xr-x 5 root root 340 Oct 23 22:30 dev

drwxr-xr-x 1 root root 4096 Oct 23 22:30 etc

drwxr-xr-x 2 root root 4096 Sep 3 12:10 home

drwxr-xr-x 8 root root 4096 Oct 4 00:00 lib

drwxr-xr-x 2 root root 4096 Oct 4 00:00 lib64

drwxr-xr-x 2 root root 4096 Oct 4 00:00 media

drwxr-xr-x 2 root root 4096 Oct 4 00:00 mnt

drwxr-xr-x 2 root root 4096 Oct 4 00:00 opt

dr-xr-xr-x 264 root root 0 Oct 23 22:30 proc

drwx------ 2 root root 4096 Oct 4 00:00 root

drwxr-xr-x 3 root root 4096 Oct 4 00:00 run

drwxr-xr-x 2 root root 4096 Oct 4 00:00 sbin

drwxr-xr-x 2 root root 4096 Oct 4 00:00 srv

dr-xr-xr-x 11 root root 0 Oct 23 22:30 sys

drwxrwxrwt 1 root root 4096 Oct 23 22:30 tmp

drwxr-xr-x 1 root root 4096 Oct 4 00:00 usr

drwxr-xr-x 1 root root 4096 Oct 4 00:00 var

We can see the DLLs that the application requires to run, but there are no config or components directories shown. Type exit to close interactive session.

Next we make a temporary change to the simulation service in the compose file and add the volumes_from configuration:

simulation:

image: ${DOCKER_REGISTRY-}simulation

build:

context: ./Simulation

dockerfile: dockerfile

volumes_from:

- dapr-config

If we repeat the steps above we should see the Simulation container recreated due to its definition having been updated:

PS:> docker-compose up -d simulation

Creating src_dapr-config_1 ... done

Recreating src_simulation_1 ... done

In addition to the simulation container we also see that Docker Compose started the dapr-config service, as it was able to infer the dependency from the volumes_from property.

Connecting back into this new instance of the simulation container, we should be able to see that the configuration folders & files defined in the dapr-config container are now available in the simulation container:

PS:> docker exec -it src_simulation_1 /bin/sh

# ls -la /config

total 16

drwxr-xr-x 2 root root 4096 Oct 23 22:39 .

drwxr-xr-x 1 root root 4096 Oct 23 22:39 ..

-rwxr-xr-x 1 root root 197 Oct 22 2021 config.yaml

# ls -la /components

total 44

drwxr-xr-x 2 root root 4096 Oct 23 22:39 .

drwxr-xr-x 1 root root 4096 Oct 23 22:39 ..

-rwxr-xr-x 1 root root 535 Sep 1 10:54 email.yaml

-rwxr-xr-x 1 root root 459 Sep 1 11:01 entrycam.yaml

-rwxr-xr-x 1 root root 457 Sep 1 11:02 exitcam.yaml

-rwxr-xr-x 1 root root 374 May 13 07:17 pubsub.yaml

-rwxr-xr-x 1 root root 275 Jul 21 22:47 secrets-envvars.yaml

-rwxr-xr-x 1 root root 358 Sep 1 16:34 secrets-file.yaml

-rwxr-xr-x 1 root root 666 Sep 1 16:39 secrets-keyvault.yaml

-rwxr-xr-x 1 root root 221 Dec 20 2021 secrets.json

Now we understand the behaviour of volumes_from, it should be clear how we will use this to make the Dapr configuration files available to each of the services' Dapr sidecars.

First though we should revert the changes we made to the simulation service by removing the volumes_from configuration:

simulation:

image: ${DOCKER_REGISTRY-}simulation

build:

context: ./Simulation

dockerfile: dockerfile

Whilst the volumes can be shared across multiple containers, they are effectively read-only from the shared perspective. For example if the Simulation container made a change in the /config directory, then that change would only be available to the Simulation container. In this respect it is treated the same as the filesystem that comes from the container image; all filesystem changes get written to a separate volume (or layer) that is specific to that instance of the running container.

How to Setup the Dapr Sidecars with Docker Compose

As mentioned above each of the services that rely on Dapr need to have their own sidecar container that can act on their behalf in the wider Dapr fabric - in our case, thanks to our changes to the Simulation app in the last episode, this means all 4 services will need a sidecar.

Now we have a solution for making the Dapr configuration files available to our sidecar containers we need to add the rest of them to docker-compose.yml:

vehicleregistrationservice-dapr:

image: "daprio/daprd:edge"

command: [

"./daprd",

"-app-id", "vehicleregistrationservice",

"-app-port", "6002",

"-placement-host-address", "dapr-placement:50000",

"-dapr-http-port", "3602",

"-dapr-grpc-port", "60002",

"-components-path", "/components",

"-config", "/config/config.yaml"

]

volumes_from:

- dapr-config

depends_on:

- vehicleregistrationservice

network_mode: "service:vehicleregistrationservice"

finecollectionservice-dapr:

image: "daprio/daprd:edge"

command: [

"./daprd",

"-app-id", "finecollectionservice",

"-app-port", "6001",

"-placement-host-address", "dapr-placement:50000",

"-dapr-http-port", "3601",

"-dapr-grpc-port", "60001",

"-components-path", "/components",

"-config", "/config/config.yaml"

]

volumes_from:

- dapr-config

depends_on:

- finecollectionservice

network_mode: "service:finecollectionservice"

simulation-dapr:

image: "daprio/daprd:edge"

command: [

"./daprd",

"-app-id", "simulation",

"-placement-host-address", "dapr-placement:50000",

"-dapr-http-port", "3603",

"-dapr-grpc-port", "60003",

"-components-path", "/components",

"-config", "/config/config.yaml"

]

volumes_from:

- dapr-config

depends_on:

- simulation

network_mode: "service:simulation"

With those additions to the docker-compose.yml file, we can reset & relaunch Docker Compose:

PS:> docker-compose down

Stopping src_simulation_1 ... done

Stopping src_vehicleregistrationservice_1 ... done

Stopping src_finecollectionservice_1 ... done

Stopping src_dtc-redis_1 ... done

Stopping src_dtc-zipkin_1 ... done

Stopping src_dapr-placement_1 ... done

Stopping src_dtc-maildev_1 ... done

Removing network src_default

PS:> docker-compose up -d

Creating network "src_default" with the default driver

Creating src_simulation_1 ... done

Creating src_vehicleregistrationservice_1 ... done

Creating src_dtc-maildev_1 ... done

Creating src_dtc-redis_1 ... done

Creating src_trafficcontrolservice_1 ... done

Creating src_finecollectionservice_1 ... done

Creating src_dapr-placement_1 ... done

Creating src_dtc-zipkin_1 ... done

Creating src_dapr-config_1 ... done

Creating src_simulation-dapr_1 ... done

Creating src_vehicleregistrationservice-dapr_1 ... done

Creating src_finecollectionservice-dapr_1 ... done

Creating src_trafficcontrolservice-dapr_1 ... done

We still haven't quite replicated everything that we setup for our self-hosted scenario, so let's check the logs of the Simulation app to see how happy it is:

PS:> docker logs src_simulation_1

...

Camera 3 error: One or more errors occurred. (Binding operation failed: the Dapr endpoint indicated a failure. See InnerException for details.) inner: Binding operation failed: the Dapr endpoint indicated a failure. See InnerException for details.

Camera 1 error: One or more errors occurred. (Binding operation failed: the Dapr endpoint indicated a failure. See InnerException for details.) inner: Binding operation failed: the Dapr endpoint indicated a failure. See InnerException for details.

Camera 2 error: One or more errors occurred. (Binding operation failed: the Dapr endpoint indicated a failure. See InnerException for details.) inner: Binding operation failed: the Dapr endpoint indicated a failure. See InnerException for details.

...

This confirms that the Dapr configuration is still not quite right - looking at the sidecar logs ought to shed more light on the problem:

PS:> docker logs src_simulation-dapr_1

time="2022-10-23T23:41:06.36416533Z" level=info msg="starting Dapr Runtime -- version edge -- commit ac8e621bfd17ec1eae41b81c3d6bec76c84831d9" app_id=simulation instance=83401ec73265 scope=dapr.runtime type=log ver=edge

time="2022-10-23T23:41:06.365120101Z" level=info msg="log level set to: info" app_id=simulation instance=83401ec73265 scope=dapr.runtime type=log ver=edge

time="2022-10-23T23:41:06.368351664Z" level=info msg="metrics server started on :9090/" app_id=simulation instance=83401ec73265 scope=dapr.metrics type=log ver=edge

time="2022-10-23T23:41:06.377062959Z" level=info msg="standalone mode configured" app_id=simulation instance=83401ec73265 scope=dapr.runtime type=log ver=edge

time="2022-10-23T23:41:06.379451909Z" level=info msg="app id: simulation" app_id=simulation instance=83401ec73265 scope=dapr.runtime type=log ver=edge

time="2022-10-23T23:41:06.3805338Z" level=info msg="mTLS is disabled. Skipping certificate request and tls validation" app_id=simulation instance=83401ec73265 scope=dapr.runtime type=log ver=edge

time="2022-10-23T23:41:06.415200246Z" level=info msg="local service entry announced: simulation -> 172.22.0.2:45121" app_id=simulation instance=83401ec73265 scope=dapr.contrib type=log ver=edge

time="2022-10-23T23:41:06.415759528Z" level=info msg="Initialized name resolution to mdns" app_id=simulation instance=83401ec73265 scope=dapr.runtime type=log ver=edge

time="2022-10-23T23:41:06.416077599Z" level=info msg="loading components" app_id=simulation instance=83401ec73265 scope=dapr.runtime type=log ver=edge

time="2022-10-23T23:41:06.424146759Z" level=warning msg="A non-YAML Component file secrets.json was detected, it will not be loaded" app_id=simulation instance=83401ec73265 scope=dapr.runtime.components type=log ver=edge

time="2022-10-23T23:41:06.441586583Z" level=warning msg="component entrycam references a secret store that isn't loaded: trafficcontrol-secrets-kv" app_id=simulation instance=83401ec73265 scope=dapr.runtime type=log ver=edge

time="2022-10-23T23:41:06.442463653Z" level=warning msg="component exitcam references a secret store that isn't loaded: trafficcontrol-secrets-kv" app_id=simulation instance=83401ec73265 scope=dapr.runtime type=log ver=edge

time="2022-10-23T23:41:06.442943095Z" level=info msg="component loaded. name: trafficcontrol-secrets-envvar, type: secretstores.local.env/v1" app_id=simulation instance=83401ec73265 scope=dapr.runtime type=log ver=edge

time="2022-10-23T23:41:06.45119282Z" level=info msg="waiting for all outstanding components to be processed" app_id=simulation instance=83401ec73265 scope=dapr.runtime type=log ver=edge

time="2022-10-23T23:41:06.452273253Z" level=info msg="component loaded. name: trafficcontrol-secrets-kv, type: secretstores.azure.keyvault/v1" app_id=simulation instance=83401ec73265 scope=dapr.runtime type=log ver=edge

time="2022-10-23T23:45:56.886434475Z" level=error msg="error getting secret: ChainedTokenCredential: failed to acquire a token.\nAttempted credentials:\n\tManagedIdentityCredential: Get \"http://169.254.169.254/metadata/identity/oauth2/token?api-version=2018-02-01&resource=https%3A%2F%2Fvault.azure.net\": dial tcp 169.254.169.254:80: i/o timeout" app_id=simulation instance=83401ec73265 scope=dapr.runtime type=log ver=edge

time="2022-10-23T23:46:01.887700946Z" level=warning msg="error processing component, daprd process will exit gracefully" app_id=simulation instance=83401ec73265 scope=dapr.runtime type=log ver=edge

time="2022-10-23T23:46:01.887775352Z" level=info msg="dapr shutting down." app_id=simulation instance=83401ec73265 scope=dapr.runtime type=log ver=edge

time="2022-10-23T23:46:01.887790045Z" level=info msg="Stopping PubSub subscribers and input bindings" app_id=simulation instance=83401ec73265 scope=dapr.runtime type=log ver=edge

time="2022-10-23T23:46:01.887801942Z" level=info msg="Stopping Dapr APIs" app_id=simulation instance=83401ec73265 scope=dapr.runtime type=log ver=edge

time="2022-10-23T23:46:01.887821403Z" level=info msg="Waiting 5s to finish outstanding operations" app_id=simulation instance=83401ec73265 scope=dapr.runtime type=log ver=edge

time="2022-10-23T23:46:06.888776042Z" level=info msg="Shutting down all remaining components" app_id=simulation instance=83401ec73265 scope=dapr.runtime type=log ver=edge

time="2022-10-23T23:46:06.88891572Z" level=fatal msg="process component trafficcontrol-secrets-kv error: [INIT_COMPONENT_FAILURE]: initialization error occurred for entrycam (bindings.azure.storagequeues/v1): init timeout for component entrycam exceeded after 5s" app_id=simulation instance=83401ec73265 scope=dapr.runtime type=log ver=edge

There are quite a few warnings in the above, but let's start with the level=fatal messages and work from there:

process component trafficcontrol-secrets-kv error: [INIT_COMPONENT_FAILURE]: initialization error occurred for entrycam (bindings.azure.storagequeues/v1): init timeout for component entrycam exceeded after 5s

Searching the logs for other references to the trafficcontrol-secrets-kv component we find:

component loaded. name: trafficcontrol-secrets-kv, type: secretstores.azure.keyvault/v1

Finally, looking for other Key Vault related messages we find:

error getting secret: ChainedTokenCredential: failed to acquire a token.\nAttempted credentials:\n\tManagedIdentityCredential: Get \"http://169.254.169.254/metadata/identity/oauth2/token?api-version=2018-02-01&resource=https%3A%2F%2Fvault.azure.net\": dial tcp 169.254.169.254:80: i/o timeout

From this we can determine that there is an issue authenticating to the Key Vault. Recall from Episode 2 that we moved some secrets from the JSON file into Azure Key Vault and configured the Dapr component in secrets-keyvault.yaml to expect Azure credentials to be available via environment variables:

...

- name: azureTenantId

secretKeyRef:

name: AZURE_TENANT_ID

- name: azureClientId

secretKeyRef:

name: AZURE_CLIENT_ID

- name: azureClientSecret

secretKeyRef:

name: AZURE_CLIENT_SECRET

auth:

secretStore: trafficcontrol-secrets-envvar

...

Whilst we have all the component configuration details in place, thus far we've made no reference to these environment variables in the docker-compose.yml file - let's fix that now.

Using Environment Variables with Docker Compose

Docker Compose files can contain references to environment variables, which will be interpolated by the machine running docker-compose up. This allows us to mimic the way the environment variables were used in self-hosted mode.

The following fragment ensures the required environment variables are defined inside the container, using the values from the environment variables set on our local machine:

environment:

- AZURE_TENANT_ID=${AZURE_TENANT_ID}

- AZURE_CLIENT_ID=${AZURE_CLIENT_ID}

- AZURE_CLIENT_SECRET=${AZURE_CLIENT_SECRET}

We will need to add those environment variables to the following services:

trafficcontrolservice-daprfinecollectionservice-daprsimulation-dapr

Note how it's the Dapr sidecar containers that need the configuration, rather than the applications themselves.

With those final changes to the docker-compose.yml file, let's try testing it outside of Visual Studio.

Running Docker Compose

Before starting Docker Compose, we need to ensure that the environment variables that docker-compose.yml requires are setup correctly in our terminal session. To do this we can use the same script we used when running in self-hosted mode, which takes care of creating a Azure Active Directory Service Principal or rotating its secret if it already exists.

For this episode, the script has been updated to improve to the secret rotation logic and also provides an option to skip the ARM deployment:

PS:> ./src/bicep/deploy.ps1 -ResourcePrefix <prefix> -Location <location> -SkipProvision

When the above completes the required environment variables will have been set, which we can double-check as follows:

PS:> gci env:/AZURE_*

Name Value

---- -----

AZURE_CLIENT_ID <some-guid>

AZURE_CLIENT_OBJECTID <some-guid>

AZURE_CLIENT_SECRET <some-strong-password>

AZURE_TENANT_ID <some-guid>

Now we're be ready to launch Docker Compose:

PS:> cd src

PS:> docker-compose up -d

Creating network "src_default" with the default driver

Creating src_simulation_1 ... done

Creating src_finecollectionservice_1 ... done

Creating src_dtc-redis_1 ... done

Creating src_dapr-placement_1 ... done

Creating src_dapr-config_1 ... done

Creating src_dtc-zipkin_1 ... done

Creating src_dtc-maildev_1 ... done

Creating src_vehicleregistrationservice_1 ... done

Creating src_trafficcontrolservice_1 ... done

Creating src_simulation-dapr_1 ... done

Creating src_finecollectionservice-dapr_1 ... done

Creating src_trafficcontrolservice-dapr_1 ... done

Creating src_vehicleregistrationservice-dapr_1 ... done

If everything is working correctly then we should see emails start arriving in the mail server web UI.

However, we don't, so what's wrong? We'll start by reviewing the logs of each service to isolate where the problem is.

First up is the simulation app:

PS:> docker logs src_simulation_1

Start camera 1 simulation.

Start camera 2 simulation.

Start camera 3 simulation.

Simulated ENTRY of vehicle with license-number XK-411-P in lane 3

Simulated ENTRY of vehicle with license-number LK-48-GX in lane 1

Simulated ENTRY of vehicle with license-number 7-LHX-06 in lane 2

Simulated EXIT of vehicle with license-number LK-48-GX in lane 3

Simulated ENTRY of vehicle with license-number KL-809-L in lane 1

Simulated EXIT of vehicle with license-number XK-411-P in lane 1

Simulated ENTRY of vehicle with license-number 47-HJS-2 in lane 3

...

This looks healthy.

Sometimes you may see some errors at the start before the entry & exit messages start flowing.

Next up is the traffic control service:

PS:> docker logs src_trafficcontrolservice_1

info: TrafficControlService.Controllers.TrafficController[0]

ENTRY detected in lane 3 at 11:59:59 of vehicle with license-number XK-411-P.

info: TrafficControlService.Controllers.TrafficController[0]

ENTRY detected in lane 1 at 12:00:00 of vehicle with license-number LK-48-GX.

info: TrafficControlService.Controllers.TrafficController[0]

EXIT detected in lane 1 at 12:00:08 of vehicle with license-number XK-411-P.

info: TrafficControlService.Controllers.TrafficController[0]

ENTRY detected in lane 2 at 12:00:01 of vehicle with license-number 7-LHX-06.

info: TrafficControlService.Controllers.TrafficController[0]

ENTRY detected in lane 1 at 12:00:07 of vehicle with license-number KL-809-L.

info: TrafficControlService.Controllers.TrafficController[0]

EXIT detected in lane 3 at 12:00:10 of vehicle with license-number 7-LHX-06.

info: TrafficControlService.Controllers.TrafficController[0]

EXIT detected in lane 1 at 12:00:13 of vehicle with license-number KL-809-L.

info: TrafficControlService.Controllers.TrafficController[0]

ENTRY detected in lane 3 at 12:00:10 of vehicle with license-number 47-HJS-2.

We should be able to see the matching license numbers as the entry & exit messages are processed. We should also occasionally see some messages to signify catching a speeding car, for example:

Speeding violation detected (22 KMh) of vehicle with license-number 4-KXD-04.

Assuming that you see such messages being logged and no errors, then let's assume that the traffic control service is happy and move on to the next step.

With speeding tickets being issued, we should see requests being processed by the fine collection service:

PS:> docker logs src_finecollectionservice_1

fail: Microsoft.AspNetCore.Diagnostics.DeveloperExceptionPageMiddleware[1]

An unhandled exception has occurred while executing the request.

System.AggregateException: One or more errors occurred. (Secret operation failed: the Dapr endpoint indicated a failure. See InnerException for details.)

---> Dapr.DaprException: Secret operation failed: the Dapr endpoint indicated a failure. See InnerException for details.

---> Grpc.Core.RpcException: Status(StatusCode="InvalidArgument", Detail="failed finding secret store with key kubernetes")

at Dapr.Client.DaprClientGrpc.GetSecretAsync(String storeName, String key, IReadOnlyDictionary`2 metadata, CancellationToken cancellationToken)

--- End of inner exception stack trace ---

at Dapr.Client.DaprClientGrpc.GetSecretAsync(String storeName, String key, IReadOnlyDictionary`2 metadata, CancellationToken cancellationToken)

--- End of inner exception stack trace ---

at System.Threading.Tasks.Task.ThrowIfExceptional(Boolean includeTaskCanceledExceptions)

at System.Threading.Tasks.Task`1.GetResultCore(Boolean waitCompletionNotification)

at System.Threading.Tasks.Task`1.get_Result()

at FineCollectionService.Controllers.CollectionController..ctor(ILogger`1 logger, IFineCalculator fineCalculator, VehicleRegistrationService vehicleRegistrationService, DaprClient daprClient) in /pp/Controllers/CollectionController.cs:line 27

at lambda_method8(Closure , IServiceProvider , Object[] )

at Microsoft.AspNetCore.Mvc.Controllers.ControllerActivatorProvider.<>c__DisplayClass7_0.<CreateActivator>b__0(ControllerContext controllerContext)

at Microsoft.AspNetCore.Mvc.Controllers.ControllerFactoryProvider.<>c__DisplayClass6_0.<CreateControllerFactory>g__CreateController|0(ControllerContext controllerContext)

at Microsoft.AspNetCore.Mvc.Infrastructure.ControllerActionInvoker.Next(State& next, Scope& scope, Object& state, Boolean& isCompleted)

at Microsoft.AspNetCore.Mvc.Infrastructure.ControllerActionInvoker.InvokeInnerFilterAsync()

--- End of stack trace from previous location ---

at Microsoft.AspNetCore.Mvc.Infrastructure.ResourceInvoker.<InvokeFilterPipelineAsync>g__Awaited|20_0(ResourceInvoker invoker, Task lastTask, State next, Scope scope, Object state, Boolean isComplted)

at Microsoft.AspNetCore.Mvc.Infrastructure.ResourceInvoker.<InvokeAsync>g__Awaited|17_0(ResourceInvoker invoker, Task task, IDisposable scope)

at Microsoft.AspNetCore.Mvc.Infrastructure.ResourceInvoker.<InvokeAsync>g__Awaited|17_0(ResourceInvoker invoker, Task task, IDisposable scope)

at Microsoft.AspNetCore.Routing.EndpointMiddleware.<Invoke>g__AwaitRequestTask|6_0(Endpoint endpoint, Task requestTask, ILogger logger)

at Dapr.CloudEventsMiddleware.ProcessBodyAsync(HttpContext httpContext, String charSet)

at Microsoft.AspNetCore.Diagnostics.DeveloperExceptionPageMiddleware.Invoke(HttpContext context)

Well that doesn't look to clever, amongst the plentiful stack trace we can see this message:

---> Dapr.DaprException: Secret operation failed: the Dapr endpoint indicated a failure. See InnerException for details.

---> Grpc.Core.RpcException: Status(StatusCode="InvalidArgument", Detail="failed finding secret store with key kubernetes")

Another option for diagnosing the system is to use the Dapr telemetry & instrumentation that is hooked up to Zipkin, which has it's own web dashboard.

In the search bar at the top, we can add a couple of filters serviceName=finecollectionservice tagQuery=error to show us distibuted transactions associated with any errors:

Clicking the 'Show' button will display the full trace:

From this we can see a bunch of Kubernetes events that have this error:

failed finding secret store with key kubernetes

So it seems like the Fine Collection Service is trying to look-up a secret from Kubernetes, which is rather odd given that our container orchestrator is Docker Compose!

So the time has come to open Visual Studio and debug the Fine Collection Service to get to the bottom of what's happening - which will be where we start in the next episode!

Review

This was quite a long post but we've accomplished quite a lot:

- Containerised the solution and setup Visual Studio to support that

- Used Docker Compose to setup serveral connected containers

- Discovered how we can use Docker Compose to enable the Sidecar pattern

- Looked at how Docker Volumes can be used to share file-based configuration across multiple containers

- Used the native Docker command-line tools to troubleshoot our containersed .NET services

All that, means next episode we can dive straight in to debugging this final issue and experience Visual Studio's container-based inner dev-loop first hand.