Running Azure Functions in Docker on a Raspberry Pi 4

At our endjin team meet up this week, we were all presented with Raspberry Pi 4b's and told to go away and think of something good to do with them. I first bought a Raspberry Pi back in 2012 and have to admit, beyond installing XBMC and playing around with it, I haven't done a great deal. The most recent iteration of the Pi is a completely different beast – 4GB of RAM instead of the original's 512MB, a 1.5GHz quad core processor instead of a 700MHz single core, on board wifi, dual HDMI outputs – the list goes on, and the vastly improved spec really opens up a lot of new options for how it can be used.

As I've been doing a lot with Azure Functions recently, I thought it would be interesting to get a .NET Core Azure Function running on the Pi. Here's how I did it.

You will need…

- A Raspberry Pi. Obviously. I used a Pi 4; if you have a 3 then the instructions below should still work, although the setup steps for the Pi may vary.

- On your development machine

- Docker Desktop

- Your .NET Core dev tool of choice

- An SSH client - you can SSH from the standard Windows command prompt or Powershell. Alternatively, you can use a tool such as Windows Terminal or PuTTY.

Part 1 – Get the Pi ready for action

You'll need your Raspberry Pi up and running. There's plenty of docs on how to do this; if you've just taken your brand new Pi 4 out of the box, you can follow these steps to get ready. Note that if you're connecting your Pi to your network via ethernet, you will be able to get away without plugging it into a screen at any point; I had to connect mine to Wifi which meant it was easier to start off connected to screen and keyboard, so this is what the instructions below cover.

- Download the Raspbian image from here. I used the "Raspbian Buster with desktop" image.

- Follow the instructions here to get your image onto the SD card.

- Boot the Pi using your newly created SD card. It will take you through the first time setup process, including connecting to Wifi.

- Enable SSH. This is done from the main menu by opening the "Raspberry Pi Configuration" tool from the Preferences submenu. When the tool opens, choose the "Interfaces" tab and set SSH to enabled (in my install it's the second option in the list.

- Once you've done this, you will start to get warnings about changing your password, which you should do.

- It's also a good idea to change the hostname of the device, which is done from the System tab on the same Raspberry Pi Configuration tool used in step 4.

Note - If you want to do a fully headless setup, this page tells you how. Once it's up and running and you've connected via SSH, you can access the terminal equivalent of the configuration tool via the command sudo raspi-config.

At this point you are free to disconnect the monitor and keyboard and move back to your main computer, as the rest of the steps can be executed at the terminal via SSH. Before you do that, you'll want to know the IP address of your Pi - you can either get this from your router, or you can start a terminal session on the Pi and execute ifconfig to see the IP address of the device. If you're connected via wireless then ifconfig wlan0 will show you your address, if you're hooked up via the on-board ethernet connection it's ifconfig eth0. Use this to connect via your SSH client of choice - the username is pi and if you didn't change the password, the default value is raspberry. To connect from a standard Windows command prompt, use

ssh pi@<put the IP address/hostname of the Pi here>

The next step is to install Docker on the Pi. From the command line, execute

curl -fsSL get.docker.com -o get-docker.sh && sh get-docker.sh

This uses cURL to download the Docker installation script and then executes it on your Pi. Once it's done, you should be able to execute

docker --version

to verify that Docker is installed.

In order to check that the basics are working, you can spin up Docker's "hello world" container by executing

docker run hello-world

This should pull and execute the hello-world container, which will tell you that all is well.

When I first did this, I got the following error:

docker: Got permission denied while trying to connect to the Docker daemon socket at unix:///var/run/docker.sock: Post http://%2Fvar%2Frun%2Fdocker.sock/v1.40/containers/create: dial unix /var/run/docker.sock: connect: permission denied.

See 'docker run --help'.

This is caused by my current user not having sufficient permissions to access the socket used to communicate with the Docker engine. You can get round this by executing

sudo docker run hello-world

which executes the command with superuser privileges, but what you really want to do is to add the current user to the docker security group which will give you the access you need. Assuming you're logged in as the default user "pi", this is done by executing

sudo usermod -a -G docker pi

usermod is the Linux command for modifying a user, –a is the switch to add the specified user to a group, –G specifies the group to add to – in this case, "docker", and the last argument is the user to add. The whole thing is executed using sudo, as this command requires elevated privileges.

That done, you should now be able to run the hello-world container without the need for sudo.

With this initial setup done, you can move onto the next step.

Part 2 – Build and containerize the function

The next step is to put together a simple functions app, then create a Docker container ready to run on the Pi.

Firstly, we're going to build an Azure functions app. It'll contain a single HTTP-triggered function as that leaves us with no dependencies on any other services (e.g. Azure Storage).

We're then going to use Docker to build the function using the .NET Core CLI tools, and package it up with the Azure Functions runtime into a container. To create this container, we take a base image – a pre-built container that already contains the Azure Functions runtime and everything it needs to run – and simply inject our compiled function into the correct location on the file system, resulting in a new image that we'll run on the Raspberry Pi. Using a base image makes our process super-simple, as we don't have to worry about making sure the image contains all the dependencies necessary for the Functions runtime to work.

So let's have a look at that in more detail.

The function code can be found on GitHub. What it does isn't hugely important – it's a simple "Hello, World" world function, with next to no processing. The interesting part are the two Docker-related files.

The .dockerignore file simply tells Docker to ignore the local.settings.json file when moving files around. This is important as when the function runs in the container, configuration will be set via another mechanism – typically via environment variables.

The Dockerfile is where the magic happens.

This is the file that Docker will use to build our container and is split into two parts.

The first part – lines 1 to 6 – build the app. They reference a Docker image that contains the .NET Core SDK, published by Microsoft and available on Dockerhub. This contains the .NET Core CLI as well. Line 3 copies our function app's source code into the container, then executes the necessary commands to build and publish it to a known location in that container's file system.

The second part – lines 8 to 12 – create our container. Line 8 references the Azure Functions base image that we'll be adding to. You'll notice that it's tagged 2.0-arm32v7. Since this is the container that will be run on the Raspberry Pi, it's necessary to use a build that matches our target processor architecture, which is ARM. As well as builds for AMD64, Nanoserver and IoT Edge, there are similar base images for different functions worker runtimes – Node, Python and Powershell, which gives us a lot of options for target environments and languages. However, in this case, we're sticking with .NET Core.

Lines 9 and 10 set a couple of standard environment variables. These can be overridden when the container is started if required, but it makes sense to set some known default values here. Note also that there are various other environment variables set up in the base image which you would normally not need to change.

Finally, line 12 copies the published files from our first image into the required location in the second image. The resulting image will be what we run on the Pi.

So, without further ado, open a command prompt, navigate to the folder containing the Dockerfile and execute the command

docker build –t azure-func-hello-world .

(note the full stop at the end; this is not a typo).

The –t parameter allows you to specify the name of the image. The full stop sets the "context" for the build to the current directory.

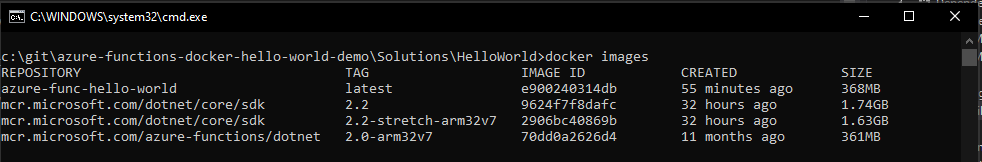

The first time you run this command it will take quite a while; Docker will need to download the two images we're referencing in the Dockerfile, and these are fairly large downloads. However, this will only happen on the first time – if you rebuild, or containerize other functions that depend on the same containers, they won't be downloaded again. Once the process has finished, you can run docker images and you should see something like this:

As you can see, as well as our two referenced images we also have our azure-func-hello-world image. Since it's targeting ARM-powered hardware you won't be able to run it locally; you can however have a look at its metadata using the command

docker image inspect azure-func-hello-world

Amongst other things, the output contains the list of environment variables that will be available by default to code running in the container, and confirms that the target OS and architecture are Linux and ARM respectively.

So, with the container built, the final step is to get it up and running on the Raspberry Pi.

Part 3 – Hello, Raspberry World

The first step here is to get the image moved onto the Raspberry Pi. You have a couple of choices here. Firstly you could upload the image to Docker Hub, and then pull it down from the Hub onto the Pi. However, with images being quite large, this can take time so a faster way is to export the image to a tar archive and then import it onto the Pi. The simplest way to do this is via SCP, but if that doesn't work for some reason - or if your home network is too sluggish - it can be done via a USB drive.

Transferring via SCP

Since you've enabled SSH on your Raspberry Pi, you can use Secure Copy to move the file from your dev machine to the Pi. This is built into Windows 10, if you're using an older version of Windows (or a different OS) you'll need an alternative SCP client such as Windows Terminal or WinSCP.

First, on your dev machine, export the image from Docker using the command

docker image save azure-func-hello-world -o c:\Temp\azure-func-hello-world.tar

(This assumes you have a Temp folder in the root of your C: drive - obviously you can change the target path to whatever suits you best.)

This exports the image to the specified location. Then, from the Windows command prompt or Windows Terminal, you can execute

scp c:\Temp\azure-func-hello-world.tar pi@<put the IP address/hostname of the Pi here>:~/

You'll be asked for the password and then the file will be transferred across to the home directory of the Pi user.

Then, back in your terminal session on the Pi, you can load the image into Docker using

docker load -i ~/azure-func-hello-world.tar

Once this completes, executing docker images on the Pi should show you that the image has been loaded and is ready for use. You can delete the tar file at this point if you like, as it's no longer needed - use

rm ~/azure-func-hello-world.tar

to get rid of it.

Transferring via a USB drive

Assuming your USB drive is plugged into your dev machine and is available as D:, you can export the image to it using the command

docker image save azure-func-hello-world -o d:\azure-func-hello-world.tar

You can then move the USB drive to the Pi, where you will need to mount it in order to access the file. If you're using the Raspian desktop, you'll get a prompt asking you to enter your password to grant permission to mount it and it will happen automatically. If you're using the terminal, either locally or via SSH, you'll need to mount it manually.

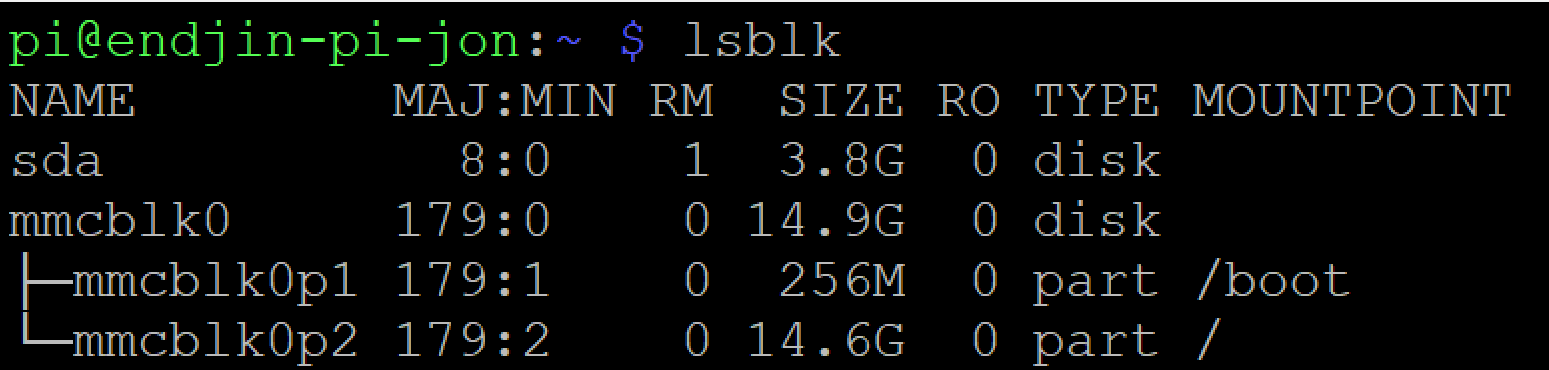

Assuming it's the first USB drive you've plugged in, it will likely be called sda; you can confirm this by executing lsblk, which will give you output that looks like this:

This tells us that we have two disks available, one of which is split into two partitions, both of which are mounted. The unmounted disk, sda is the USB drive. You can mount it by executing

sudo mount /dev/sda /mnt

Then, with the device mounted, you can import the image into Docker:

docker load -i /mnt/azure-func-hello-world.tar

Once this completes, executing docker images on the Pi should show you that the image has been loaded and is ready for use.

Running the image

To run the image you execute

docker run -p 8080:80 azure-func-hello-world

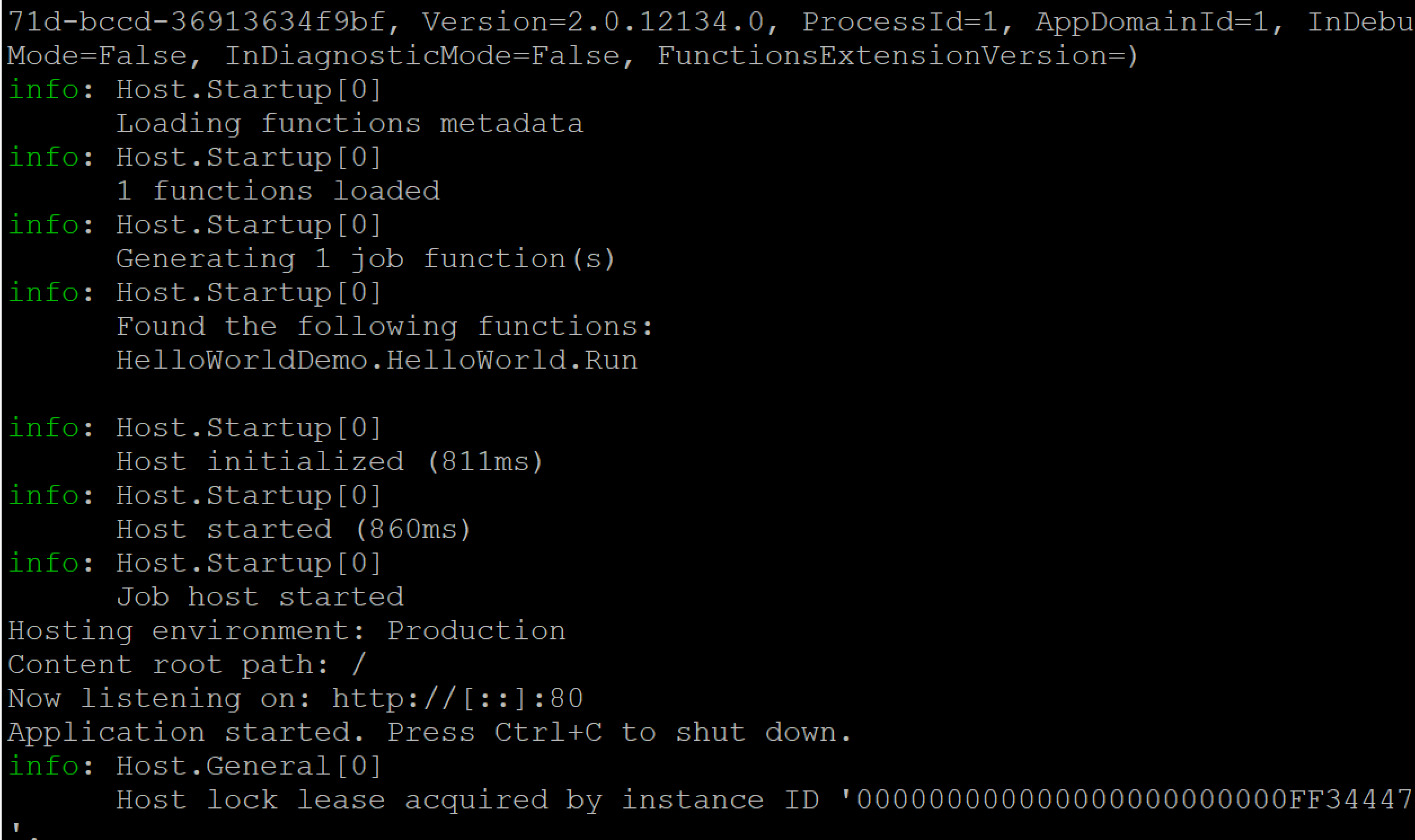

You may have noticed if you looked closely at the docker image inspect output earlier that there's an environment variable which enables console logging. As a result, you should see the familiar console output of the function starting up.

Once it's started up, you should be able to hit the endpoint by executing

curl http://localhost:8080/hello

This will hit the endpoint and write out the response to the console. The Pi has no firewall running by default, so you should also be able to hit the endpoint from a browser, Postman, or the tool of your choice running elsewhere on your network. If you can set up port forwarding on your router, then you're one firewall rule away from making it externally accessible too.

Take a moment to celebrate; you now have an Azure function, written in C# on the dev environment of your choice, and running on your Raspberry Pi.

The command to run the image is largely self explanatory; the only point of note is the -p parameter, which tells Docker to publish the container's ports to the host, making them accessible outside the container. In the above command, we're publishing the container's port 80 - on which the functions runtime listens - on port 8080 of our host system, which means Docker will route requests that the Pi receives on port 8080 into the container to be handled by our function listening on port 80. You can use any port number you like as long as it's not already in use.

There are a couple of variants on the above command that are of interest:

Firstly, you can use the -e parameter to supply environment variables. In this example, we supply a value for AzureFunctionsJobHost__Logging__Console__IsEnabled which results in logging to console being disabled.

docker run -p 8080:80 -e AzureFunctionsJobHost__Logging__Console__IsEnabled=false azure-func-hello-world

Run this command and you will see that you receive only a few log messages to confirm that the functions host has started successfully.

Secondly, you can tell docker to run the container in the background (referred to as "detached mode") using the -d parameter.

docker run -p 8080:80 -d azure-func-hello-world

When you execute this you'll be given the Id of the resulting container but then you'll be returned to the command prompt to continue working; running docker ps will show you that your container is up and running. The container will stay up until the process used to run it exits - if you're connected via ssh, this means the container will stop running when your session ends. To prevent this, add the --rm parameter and the container will stay running until either it terminates itself, or the docker daemon exits:

docker run -p 8080:80 -d -rm azure-func-hello-world

You can interact with a detached container using docker container commands.

It's also possible to configure docker to automatically run images on startup; you can read more about this here.

Hopefully this post has got you through the basics of getting your functions running on your Raspberry Pi. If you'd like some further inspiration about what else you could do with your containerized functions, I suggest having a look at this post by Dan Clarke in which he explains how to set up a Kubernetes cluster on multiple Raspberry Pis, which will probably start making you wonder if it might actually be just possible to run some production functions on these tiny bits of kit...