Long Running Functions in Azure Data Factory

Azure Functions are powerful and convenient extension points for your Azure Data Factory pipelines. Put your custom processing logic behind an HTTP triggered Azure Function and you are good to go. Unfortunately many people read the Azure documentation and assume they can merrily run a Function for up to 10 minutes on a consumption plan or with no timeout on an App Service plan.

Unfortunately, this logic is flawed for 2 reasons:

- Processing times vary, whether it is due to variances in data being processed or simply down to running on multi-tenanted infrastructure. Unless your workload is well below documented threshold the chances are that every now and again, you will run up to and exceed the maximum timeout. You may be tempted to switch over to an App Service plan. Unfortunately, this logic is also flawed for reason #2.

- The advertised 10 minute timeout, or unlimited timeout for app service plans, DOES NOT apply to HTTP triggered functions. The actual timeout is 230 seconds. Why? Because the Azure Load Balancer imposes this limit on us and we have no control over this.

Fortunately, there is a simple solution. Rather than the client waiting for an operation to complete, the server can respond with a 202 Accepted status code along with information that the client can use to determine if and when the operation has completed.

This sounds a great idea but we seem to have taken our simple asynchronous operation and added a fair amount of complexity. Firstly, our service logic now needs to expose a second endpoint that clients can use to check on progress, this in turn requires state to be persisted across calls. We need some sort of queuing that will allow our processing to run in an asynchronous manner, and we need to think about how we report error conditions to the client. The client itself now needs to implement some sort of polling so that it can respond when processing is complete.

The good news is that the Microsoft Azure Functions team and the Data Factory team have thought about all of this. Durable Functions enable us to easily build asynchronous APIs by managing the complexities of status endpoints and state management. Azure Data Factory automatically supports polling HTTP endpoints that return 202 status codes. So let's put this all together with a trivial example.

Durable Functions

The examples below are for Durable Functions 2.0.

Start by creating an HTTP triggered function to start the processing. To keep things simple we will pass a simple Json which accepts a secondsToWait that will be used to simulate a long running operation.

Note the IDurableOrchestrationClient argument, this is used to initiate the workflow and create the response.

Next add an orchestrator function - this function coordinates work to be done by other durable functions.

The orchestrator function doesn't do any of the processing itself, instead it delegates work via IDurableOrchestrationContext.CallActivityAsync(...).

Finally, we'll add an Activity function to do the actual processing. Note that this function will honour the function timeout dictated by the hosting plan. Where possible consider decomposing work into multiple steps coordinated by the orchestrator function, this way you can avoid being subject to timeout limits. Durable functions supports a range of application patterns that you should familiarize yourself with.

Publish your function and trigger it by sending a POST request. You should receive response which looks like this:

The statusQueryGetUri provides information of the long running orchestration instance. If you follow this link you will receive a suitable runtimeStatus that describes the status of the orchestration instance along with some other useful information.

If you pay close attention you may also notice that the status code changes depending on the state of the response.

| Status | HTTP Status Code |

|---|---|

| Pending or Running | 202 Accepted |

| Complete | 200 OK |

| Faulted or Terminated | 500 Internal Server Error |

Bear this in mind as this is the key for how the next part works.

Azure Data Factory

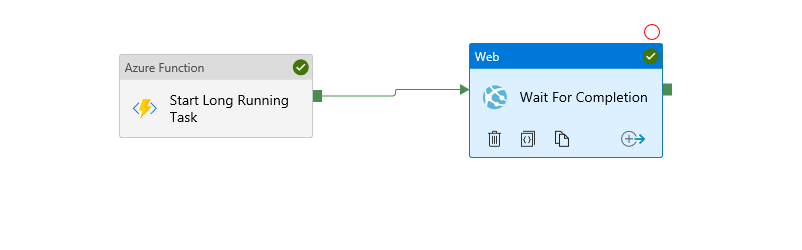

Create a Function linked service and point it to your deployed function app. Create a new pipeline and add a Function activity which will call the asynchronous Function.

This function will simply return the payload containing the statusQueryGetUri seen above.

Next we need to instruct Data Factory to wait until the long running operation has finished. Do this by adding a web activity:

The important piece to note is the url property which should be set to @activity('Start Long Running Task').output.statusQueryGetUri. When the pipeline runs the web activity will dynamically retrieve the status of the operation and will continue to poll the endpoint while the HTTP status code is 202 Accepted. When the operation completes the status url will return either 200 OK, indicating that the activity was successful or 500 Internal Server Error which will cause the activity to fail.

The pipeline should look like this:

Run the pipeline and verify it behaves as expected. You will notice that the web activity waits until the asynchronous function has completed allowing you to trigger subsequent dependent ADF activities after the asynchronous operation has finished.

Summary

Durable Functions are a great way to implement custom long running data processing steps with in Azure Data Factory without falling foul of the 230 second HTTP triggered Function timeout. Data Factory web activity has built in support for polling APIs that return 202 status codes, making it trivial to integrate asynchronous APIs into your data pipelines.