"But it works on my cloud!" - are your developers still making the same mistakes in a world of DevOps and PaaS services?

I guarantee that any of you who have ever worked as, or with, a software engineer will have heard the phrase "it works on my machine!" before.

This statement is so infamous that there's even a certificate for it, that can be handed out to those who have defiantly claimed that the feature that they've built, the task they've completed or the bug that they've fixed is "done" - despite the fact that it doesn't actually work beyond the realms of their local development environment.

Those who find themselves uttering the words "but it works on..." have typically either not tested their code well enough, or at least not tested it under the same conditions that it will eventually be deployed into.

Even if every last edge case has been accounted for when testing an application flow, a failure to fully appreciate what it is that the code is actually doing outside of the specific algorithm or business logic will invariably result in some kind of deployment issue when its time to release into a production environment.

These scenarios often boil down to an "environment issue" - a difference in configuration between the development environment and the target build/test/release/production environment that causes application code to fail.

Differences in operating systems, or framework versions between a development machine and a production server can cause problems, as well as differences in permissions - especially if you're used to running as a local admin on your own machine.

Differences in infrastructure topology (single machine v.s. load balanced servers) or network configuration (HTTP v.s. HTTPS, firewall settings etc) will also no doubt cause problems if not accounted for when designing and developing the code.

There's been various ways to mitigate these issues adopted over the years (e.g. developing on representative virtual machines to mimic production environments as closely as possible, gated check-ins to only allow code to be committed to mainline branches once it's passed all forms of unit and integration testing etc), but essentially these are just there to support the requirement that a software engineer needs to have a bigger picture view than what's going on inside their own IDE.

If you've ever worked in operations or application support, you'll no doubt have a strong opinion on this!

But the cloud changes everything, right?

It's hard to believe that there's already a generation of software engineers out there who have only experienced a cloud-enabled world. The days of having to manually configure physical hosting environments are now long gone, but that doesn't mean that deployment issues are a thing of the past.

On-demand platform services and infrastructure, available through web based portals, REST APIs and configurable templates have given rise to new concepts in recent years, including infrastructure as code and the whole DevOps movement. Developers are no longer able to "throw it over the fence" to another team to deploy as expectations and demands for fully automated, repeatable, testable build and release pipelines increase with the speed and agility that the cloud offers.

The early days of Azure

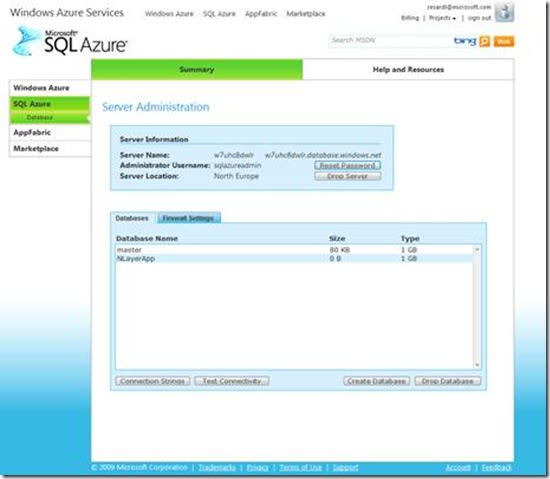

I was lucky enough to work on one of the first commercial Azure projects in the UK back in 2009 - a time when the Azure management portal had only 4 services available (Web Roles, Worker Roles, Storage and SQL Azure)!

In those early days we used to use emulators to run Azure services on local machines - a sort of sandboxed VM with a wrapper on top of SQL Server providing persistent storage. Deployment issues were rife at that point as the emulators (as good as they were) were just a pseudo-environment. Differences in on-premise SQL Server and early versions of SQL Azure were one big area that immediately brings back bad memories!

Nowadays, we build and deploy directly into real cloud services in the first instance, so "it works on my machine" may not be appropriate any more. But, even in a full PaaS solution, where underlying infrastructure is abstracted so far away that you don't actually know (or care) what kind, flavour or size of machine is actually running your code, we still typically have some notion of a "development environment".

This may be because (in our case) we're doing work for clients - we might develop a project in an endjin Azure subscription before handing over ARM templates and scripts to automatically deploy into a client's subscription. Or within an organisation (especially if there's an Enterprise Agreement in place) there may be siloed subscriptions for different teams, away from the subscription that contains the production services and infrastructure.

As cloud services become more and more sophisticated, the complexity of PaaS solutions has increased...which only increases the potential for "environment issues"

![]()

Either way, there's still plenty of opportunity to fall into the trappings of an "environment issue" today just as there always has been. Ironically, as cloud services become more and more sophisticated, the complexity of PaaS solutions has increased.

What was a simple application build and deploy has evolved into configuring and deploying infrastructure again (albeit PaaS infrastructure). We're now able to build sophisticated cloud applications, which means we're responsible for things like authorisation, access & encryption policies and networking.

All of which only increases the potential for "environment issues". We've definitely encountered our fair share over the years in our use of Azure, the majority of which could be grouped into the following categories:

1. Permission denied

Permissions can be applied in Azure at granular levels - through Role Based Access Control and through Azure Resource Manager Policies. You may be a Global Admin in your own MSDN subscription, or siloed dev sandbox and everything might run fine from a local PowerShell script, but the Service Principal running your build and release process, or the application itself may not have permission to access or create the necessary resources in a different subscription.

Access may also be denied to provision infrastructure based on the size and scale or even the location of the requested services through policies that apply restrictions on due to the associated costs incurred on running those services.

2. Manual configuration missing

Tweaking settings and configuration is easy to do when it's just a few clicks in a web based portal to get something working. Finding the associated PowerShell cmdlet, or translating that into an ARM template typically takes longer, especially if it needs to be parameterised for dynamic tokens according to different Dev/Test/Prod values, so can easily get forgotten, or added to a todo list that doesn't get done before it's already caused someone else a problem.

And, even with your biggest DevOps hat on, not everything can or should be automated. Passwords, tokens or other secrets might need to be manually added to enterprise key management tools such as Azure Key Vault by people with specific role based permissions to adhere to security policies.

3. Differing feature support

Not all platform services are created equal - each Azure region has it's own set of supported resources and associated API versions. This is a moving target as features and services get rolled out through incremental onboarding. You may be deploying into North Europe but your client, or target user base, might be based in the US.

We're lucky enough in the UK to have two regions close by - North and West Europe, but support for features is different between the two - at time of writing, Azure Data Factory is supported in North Europe but not West Europe, and Automation Account support is the opposite.

So, nothing's changed then?

What's (not) surprising is that the categories of issues above are still the same in the cloud as they always have been - regardless of the platforms, the technologies and the specifics of the applications themselves. Whilst there may be new tooling to attempt to avoid them in a PaaS world, this really comes down to a solid definition of "done", that is understood and adhered to by the entire team.

You could argue that with the focus in the software industry on DevOps, the need for understanding the whole of a solution - not only the application code, but it's dependencies, security constraints, networking configuration and any other non-functional requirements - is only ever-increasing for the development team.

This shift towards infrastructure-as-code that cloud PaaS services enable provides, or even demands, an opportunity to break away from the negative connotations of the "rockstar developer" and help foster an industry of highly capable, multi-disciplined teams.

But, and excuse the cliché, with great power comes great responsibility - the definition of done applies more and more to a single DevOps role, meaning a focus on quality and professionalism in that role is critical to success with a PaaS model.

It's a commonly held view that the later a bug is found in the software development lifecycle, the more costly it is to fix.

So to avoid killing the real benefits of the cloud by adding inefficiency through re-work and duplication of effort by poor quality deliveries, getting things done properly first time around is always the best approach - and this still holds as true now, in a cloud first world, as it always has.